DeepMind's AI Outperforms IMO Gold Medalists

Google DeepMind's latest AI, AlphaGeometry2, has made waves by outperforming the average gold medalist in solving geometry problems at the International Mathematical Olympiad (IMO). This advanced version of the previously released AlphaGeometry, introduced just last January, reportedly solved 84% of geometry problems from the last 25 years of IMO competitions.

You might wonder why DeepMind is focusing on a high school math contest. Well, they believe that cracking these challenging Euclidean geometry problems could be a stepping stone to developing more advanced AI. Solving these problems requires both logical reasoning and the ability to navigate through various solution paths, skills that could be crucial for future general-purpose AI systems.

This summer, DeepMind showcased a system that combined AlphaGeometry2 with AlphaProof, another AI model designed for formal math reasoning. Together, they tackled four out of six problems from the 2024 IMO. This approach could potentially extend beyond geometry to other areas of math and science, like complex engineering calculations.

AlphaGeometry2 is powered by a few key components, including a language model from Google's Gemini family and a "symbolic engine." The Gemini model assists the symbolic engine, which applies mathematical rules to find solutions, in creating feasible proofs for geometry theorems.

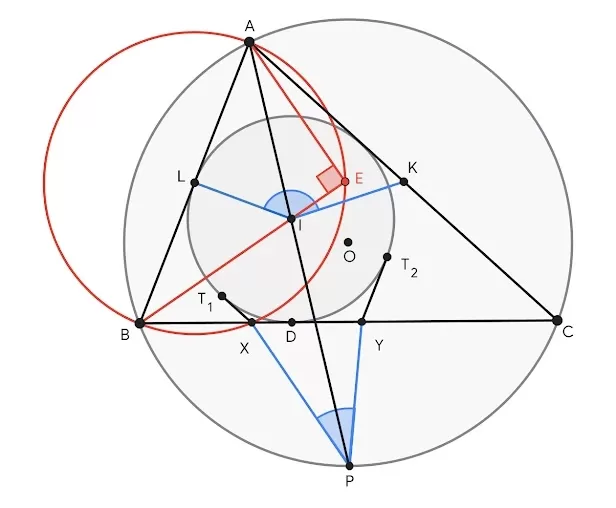

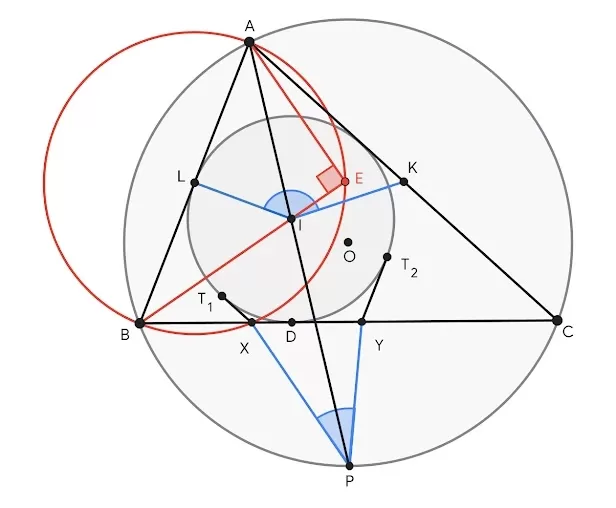

A typical geometry problem diagram in an IMO exam.Image Credits:Google (opens in a new window)

In the IMO, geometry problems often require adding "constructs" like points, lines, or circles to diagrams before solving them. AlphaGeometry2's Gemini model predicts which constructs might be helpful, guiding the symbolic engine to make deductions.

Here's how it works: The Gemini model suggests steps and constructions in a formal mathematical language, which the engine then checks for logical consistency. AlphaGeometry2 uses a search algorithm to explore multiple solution paths simultaneously and stores potentially useful findings in a shared knowledge base.

A problem is considered "solved" when AlphaGeometry2 combines the Gemini model's suggestions with the symbolic engine's known principles to form a complete proof.

Due to the scarcity of usable geometry training data, DeepMind created synthetic data to train AlphaGeometry2's language model, generating over 300 million theorems and proofs of varying complexity.

The DeepMind team tested AlphaGeometry2 on 45 geometry problems from IMO competitions spanning 2000 to 2024, which they expanded into 50 problems. AlphaGeometry2 solved 42 of these, surpassing the average gold medalist score of 40.9.

However, AlphaGeometry2 has its limitations. It struggles with problems involving a variable number of points, nonlinear equations, and inequalities. While it's not the first AI to reach gold-medal-level performance in geometry, it's the first to do so with such a large problem set.

When faced with a tougher set of 29 IMO-nominated problems that haven't yet appeared in competitions, AlphaGeometry2 could only solve 20.

The study's results are likely to spark further debate about the best approach to building AI systems. Should we focus on symbol manipulation, where AI uses rules to manipulate symbols representing knowledge, or on neural networks, which mimic the human brain's structure and learn from data?

AlphaGeometry2 takes a hybrid approach, combining the neural network architecture of the Gemini model with the rules-based symbolic engine.

Supporters of neural networks argue that intelligent behavior can emerge from vast amounts of data and computing power. In contrast, symbolic AI proponents believe it's better suited for encoding knowledge, reasoning through complex scenarios, and explaining solutions.

Vince Conitzer, a Carnegie Mellon University computer science professor specializing in AI, commented on the contrast between the impressive progress on benchmarks like the IMO and the ongoing struggles of language models with simple commonsense problems. He emphasized the need to better understand these systems and the risks they pose.

AlphaGeometry2 suggests that combining symbol manipulation and neural networks might be a promising way forward in the quest for generalizable AI. Interestingly, the DeepMind team found that AlphaGeometry2's language model could generate partial solutions to problems without the symbolic engine's help, hinting at the potential for language models to become self-sufficient in the future.

However, the team noted that until language model speed improves and hallucinations are resolved, tools like symbolic engines will remain essential for math applications.

Related article

Midjourney Unveils Cutting-Edge AI Video Generator for Creative Content

Midjourney's AI Video Generation BreakthroughMidjourney has unveiled its inaugural AI video generation tool, marking a significant expansion beyond its renowned image creation capabilities. The initial release enables users to transform both uploaded

Midjourney Unveils Cutting-Edge AI Video Generator for Creative Content

Midjourney's AI Video Generation BreakthroughMidjourney has unveiled its inaugural AI video generation tool, marking a significant expansion beyond its renowned image creation capabilities. The initial release enables users to transform both uploaded

Google Curbs Pixel 10 Leaks by Officially Revealing the Smartphone Early

Google is teasing fans with an early glimpse of its upcoming Pixel 10 smartphone lineup, showcasing the official design just weeks before the scheduled August 20th launch event.A promotional video on Google's website reveals a sleek grayish-blue devi

Google Curbs Pixel 10 Leaks by Officially Revealing the Smartphone Early

Google is teasing fans with an early glimpse of its upcoming Pixel 10 smartphone lineup, showcasing the official design just weeks before the scheduled August 20th launch event.A promotional video on Google's website reveals a sleek grayish-blue devi

Google's Gemini app adds real-time AI video, Deep Research, and new features (120 chars)

Google unveiled significant Gemini AI enhancements during its I/O 2025 developer conference, expanding multimodal capabilities, introducing next-generation AI models, and strengthening ecosystem integrations across its product portfolio.Key Gemini Li

Comments (31)

0/200

Google's Gemini app adds real-time AI video, Deep Research, and new features (120 chars)

Google unveiled significant Gemini AI enhancements during its I/O 2025 developer conference, expanding multimodal capabilities, introducing next-generation AI models, and strengthening ecosystem integrations across its product portfolio.Key Gemini Li

Comments (31)

0/200

![KevinBrown]() KevinBrown

KevinBrown

September 3, 2025 at 8:30:33 AM EDT

September 3, 2025 at 8:30:33 AM EDT

Impressionnant mais un peu flippant... Si une IA peut battre des médaillés d'or aux Olympiades, qu'est-ce qui nous reste comme domaines où les humains sont encore les meilleurs ? 😅 J'espère qu'on va pas tous devenir obsolètes !

0

0

![GregoryWalker]() GregoryWalker

GregoryWalker

August 20, 2025 at 1:01:20 PM EDT

August 20, 2025 at 1:01:20 PM EDT

This AI beating IMO champs is wild! 🧠 Geometry’s tough, but AlphaGeometry2’s out here crushing it. Makes me wonder if it’ll start tutoring kids soon! 😄

0

0

![AnthonyMoore]() AnthonyMoore

AnthonyMoore

August 19, 2025 at 3:01:23 PM EDT

August 19, 2025 at 3:01:23 PM EDT

Incroyable, AlphaGeometry2 dépasse les médaillés d'or de l'IMO en géométrie ! 😲 Ça montre à quel point l'IA avance vite, mais je me demande si elle pourrait un jour résoudre des problèmes plus... humains, comme gérer mes impôts !

0

0

![GaryThomas]() GaryThomas

GaryThomas

August 13, 2025 at 9:00:59 PM EDT

August 13, 2025 at 9:00:59 PM EDT

This AI beating IMO gold medalists is wild! 🤯 Geometry’s tough, but AlphaGeometry2’s out here making it look easy. Wonder how far it’ll go in other math fields?

0

0

![AlbertSmith]() AlbertSmith

AlbertSmith

August 9, 2025 at 5:00:59 PM EDT

August 9, 2025 at 5:00:59 PM EDT

Wow, AlphaGeometry2 is killing it at IMO geometry problems! Beating gold medalists is wild—makes me wonder if AI will soon design math contests instead of just solving them. 😮

0

0

![JackCarter]() JackCarter

JackCarter

July 27, 2025 at 10:13:31 PM EDT

July 27, 2025 at 10:13:31 PM EDT

This AI beating IMO champs is wild! 🤯 Makes me wonder if it could tutor me in math or just take over the world one proof at a time.

0

0

Google DeepMind's latest AI, AlphaGeometry2, has made waves by outperforming the average gold medalist in solving geometry problems at the International Mathematical Olympiad (IMO). This advanced version of the previously released AlphaGeometry, introduced just last January, reportedly solved 84% of geometry problems from the last 25 years of IMO competitions.

You might wonder why DeepMind is focusing on a high school math contest. Well, they believe that cracking these challenging Euclidean geometry problems could be a stepping stone to developing more advanced AI. Solving these problems requires both logical reasoning and the ability to navigate through various solution paths, skills that could be crucial for future general-purpose AI systems.

This summer, DeepMind showcased a system that combined AlphaGeometry2 with AlphaProof, another AI model designed for formal math reasoning. Together, they tackled four out of six problems from the 2024 IMO. This approach could potentially extend beyond geometry to other areas of math and science, like complex engineering calculations.

AlphaGeometry2 is powered by a few key components, including a language model from Google's Gemini family and a "symbolic engine." The Gemini model assists the symbolic engine, which applies mathematical rules to find solutions, in creating feasible proofs for geometry theorems.

In the IMO, geometry problems often require adding "constructs" like points, lines, or circles to diagrams before solving them. AlphaGeometry2's Gemini model predicts which constructs might be helpful, guiding the symbolic engine to make deductions.

Here's how it works: The Gemini model suggests steps and constructions in a formal mathematical language, which the engine then checks for logical consistency. AlphaGeometry2 uses a search algorithm to explore multiple solution paths simultaneously and stores potentially useful findings in a shared knowledge base.

A problem is considered "solved" when AlphaGeometry2 combines the Gemini model's suggestions with the symbolic engine's known principles to form a complete proof.

Due to the scarcity of usable geometry training data, DeepMind created synthetic data to train AlphaGeometry2's language model, generating over 300 million theorems and proofs of varying complexity.

The DeepMind team tested AlphaGeometry2 on 45 geometry problems from IMO competitions spanning 2000 to 2024, which they expanded into 50 problems. AlphaGeometry2 solved 42 of these, surpassing the average gold medalist score of 40.9.

However, AlphaGeometry2 has its limitations. It struggles with problems involving a variable number of points, nonlinear equations, and inequalities. While it's not the first AI to reach gold-medal-level performance in geometry, it's the first to do so with such a large problem set.

When faced with a tougher set of 29 IMO-nominated problems that haven't yet appeared in competitions, AlphaGeometry2 could only solve 20.

The study's results are likely to spark further debate about the best approach to building AI systems. Should we focus on symbol manipulation, where AI uses rules to manipulate symbols representing knowledge, or on neural networks, which mimic the human brain's structure and learn from data?

AlphaGeometry2 takes a hybrid approach, combining the neural network architecture of the Gemini model with the rules-based symbolic engine.

Supporters of neural networks argue that intelligent behavior can emerge from vast amounts of data and computing power. In contrast, symbolic AI proponents believe it's better suited for encoding knowledge, reasoning through complex scenarios, and explaining solutions.

Vince Conitzer, a Carnegie Mellon University computer science professor specializing in AI, commented on the contrast between the impressive progress on benchmarks like the IMO and the ongoing struggles of language models with simple commonsense problems. He emphasized the need to better understand these systems and the risks they pose.

AlphaGeometry2 suggests that combining symbol manipulation and neural networks might be a promising way forward in the quest for generalizable AI. Interestingly, the DeepMind team found that AlphaGeometry2's language model could generate partial solutions to problems without the symbolic engine's help, hinting at the potential for language models to become self-sufficient in the future.

However, the team noted that until language model speed improves and hallucinations are resolved, tools like symbolic engines will remain essential for math applications.

Midjourney Unveils Cutting-Edge AI Video Generator for Creative Content

Midjourney's AI Video Generation BreakthroughMidjourney has unveiled its inaugural AI video generation tool, marking a significant expansion beyond its renowned image creation capabilities. The initial release enables users to transform both uploaded

Midjourney Unveils Cutting-Edge AI Video Generator for Creative Content

Midjourney's AI Video Generation BreakthroughMidjourney has unveiled its inaugural AI video generation tool, marking a significant expansion beyond its renowned image creation capabilities. The initial release enables users to transform both uploaded

Google Curbs Pixel 10 Leaks by Officially Revealing the Smartphone Early

Google is teasing fans with an early glimpse of its upcoming Pixel 10 smartphone lineup, showcasing the official design just weeks before the scheduled August 20th launch event.A promotional video on Google's website reveals a sleek grayish-blue devi

Google Curbs Pixel 10 Leaks by Officially Revealing the Smartphone Early

Google is teasing fans with an early glimpse of its upcoming Pixel 10 smartphone lineup, showcasing the official design just weeks before the scheduled August 20th launch event.A promotional video on Google's website reveals a sleek grayish-blue devi

September 3, 2025 at 8:30:33 AM EDT

September 3, 2025 at 8:30:33 AM EDT

Impressionnant mais un peu flippant... Si une IA peut battre des médaillés d'or aux Olympiades, qu'est-ce qui nous reste comme domaines où les humains sont encore les meilleurs ? 😅 J'espère qu'on va pas tous devenir obsolètes !

0

0

August 20, 2025 at 1:01:20 PM EDT

August 20, 2025 at 1:01:20 PM EDT

This AI beating IMO champs is wild! 🧠 Geometry’s tough, but AlphaGeometry2’s out here crushing it. Makes me wonder if it’ll start tutoring kids soon! 😄

0

0

August 19, 2025 at 3:01:23 PM EDT

August 19, 2025 at 3:01:23 PM EDT

Incroyable, AlphaGeometry2 dépasse les médaillés d'or de l'IMO en géométrie ! 😲 Ça montre à quel point l'IA avance vite, mais je me demande si elle pourrait un jour résoudre des problèmes plus... humains, comme gérer mes impôts !

0

0

August 13, 2025 at 9:00:59 PM EDT

August 13, 2025 at 9:00:59 PM EDT

This AI beating IMO gold medalists is wild! 🤯 Geometry’s tough, but AlphaGeometry2’s out here making it look easy. Wonder how far it’ll go in other math fields?

0

0

August 9, 2025 at 5:00:59 PM EDT

August 9, 2025 at 5:00:59 PM EDT

Wow, AlphaGeometry2 is killing it at IMO geometry problems! Beating gold medalists is wild—makes me wonder if AI will soon design math contests instead of just solving them. 😮

0

0

July 27, 2025 at 10:13:31 PM EDT

July 27, 2025 at 10:13:31 PM EDT

This AI beating IMO champs is wild! 🤯 Makes me wonder if it could tutor me in math or just take over the world one proof at a time.

0

0