DataGemma Tackles AI Hallucinations with Real-World Data

Large language models (LLMs) are at the heart of today's AI breakthroughs, capable of sifting through massive text datasets to produce summaries, spark creative ideas, and even write code. Yet, despite their prowess, these models can sometimes deliver information that's just plain wrong, a problem we call "hallucination." It's a big hurdle in the world of generative AI.

We're excited to share some cutting-edge research that's tackling this issue head-on, aiming to curb hallucinations by grounding LLMs in real-world stats. And we're thrilled to introduce DataGemma, the first open models that link LLMs with a wealth of real-world data from Google's Data Commons.

Data Commons: A Treasure Trove of Trustworthy Data

Data Commons is like a giant, ever-growing library of public data, boasting over 240 billion data points on everything from health to economics. It pulls this info from reliable sources like the UN, WHO, CDC, and Census Bureaus. By merging these datasets into a single, powerful toolset and AI models, Data Commons helps policymakers, researchers, and organizations get the accurate insights they need.

Imagine a vast database where you can ask questions in plain English, like which African countries have seen the biggest jump in electricity access, or how income relates to diabetes across US counties. That's Data Commons for you.

How Data Commons Helps Fight Hallucination

As more folks turn to generative AI, we're working to make these experiences more grounded by weaving Data Commons into Gemma, our family of lightweight, top-notch open models. These DataGemma models are now available for researchers and developers to dive into.

DataGemma boosts Gemma's capabilities by tapping into Data Commons' knowledge, using two cool methods to improve the accuracy and reasoning of LLMs:

RIG (Retrieval-Interleaved Generation) amps up our Gemma 2 model by actively checking facts against Data Commons. When you ask DataGemma a question, it hunts down statistical data from Data Commons to give you a solid answer. While RIG isn't a new idea, the way we're using it in DataGemma is pretty special.

Example query: ''Has the use of renewables increased in the world?'' applying DataGemma RIG methodology leverages Data Commons (DC) for authoritative data.

RAG (Retrieval-Augmented Generation) lets language models pull in extra info beyond what they've been trained on, making their answers richer and more accurate. With DataGemma, we use Gemini 1.5 Pro's long context window to fetch relevant data from Data Commons before the model starts crafting its response, cutting down on hallucinations.

Example query: ''Has the use of renewables increased in the world?'' applying DataGemma RAG methodology showcases greater reasoning and inclusion of footnotes.

Promising Results and What's Next

Our early tests with RIG and RAG are looking good. We're seeing better accuracy in our models when dealing with numbers, which means fewer hallucinations for folks using these models for research, decision-making, or just to satisfy their curiosity. You can check out these results in our research paper.

Illustration of a RAG query and response. Supporting ground truth statistics are referenced as tables served from Data Commons. *Partial response shown for brevity.

We're not stopping here. We're all in on refining these methods, scaling up our efforts, and putting them through the wringer with more tests. Eventually, we'll roll out these improvements to both Gemma and Gemini models, starting with a limited-access phase.

By sharing our research and making this new Gemma model variant open, we hope to spread the use of these Data Commons-based techniques far and wide. Making LLMs more reliable and trustworthy is crucial for turning them into essential tools for everyone, helping to build a future where AI gives people accurate info, supports informed choices, and deepens our understanding of the world.

Researchers and developers can jump right in with DataGemma using our quickstart notebooks for both RIG and RAG. To dive deeper into how Data Commons and Gemma work together, check out our Research post.

Related article

"Dot AI Companion App Announces Closure, Discontinues Personalized Service"

Dot, an AI companion application designed to function as a personal friend and confidant, will cease operations, according to a Friday announcement from its developers. New Computer, the startup behind Dot, stated on its website that the service will

"Dot AI Companion App Announces Closure, Discontinues Personalized Service"

Dot, an AI companion application designed to function as a personal friend and confidant, will cease operations, according to a Friday announcement from its developers. New Computer, the startup behind Dot, stated on its website that the service will

Anthropic Resolves Legal Case Over AI-Generated Book Piracy

Anthropic has reached a resolution in a significant copyright dispute with US authors, agreeing to a proposed class action settlement that avoids a potentially costly trial. The agreement, filed in court documents this Tuesday, stems from allegations

Anthropic Resolves Legal Case Over AI-Generated Book Piracy

Anthropic has reached a resolution in a significant copyright dispute with US authors, agreeing to a proposed class action settlement that avoids a potentially costly trial. The agreement, filed in court documents this Tuesday, stems from allegations

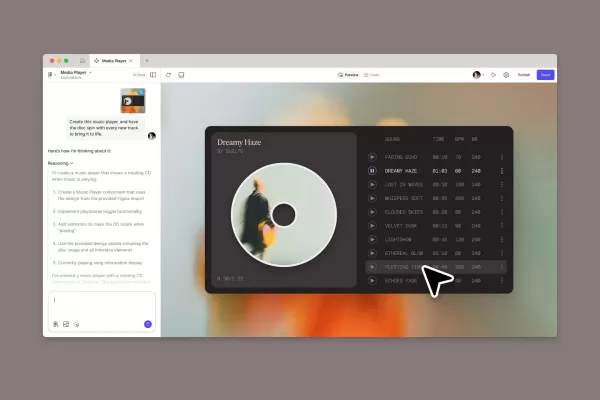

Figma Releases AI-Powered App Builder Tool to All Users

Figma Make, the innovative prompt-to-app development platform unveiled earlier this year, has officially exited beta and rolled out to all users. This groundbreaking tool joins the ranks of AI-powered coding assistants like Google's Gemini Code Assis

Comments (39)

0/200

Figma Releases AI-Powered App Builder Tool to All Users

Figma Make, the innovative prompt-to-app development platform unveiled earlier this year, has officially exited beta and rolled out to all users. This groundbreaking tool joins the ranks of AI-powered coding assistants like Google's Gemini Code Assis

Comments (39)

0/200

![WillMitchell]() WillMitchell

WillMitchell

October 4, 2025 at 2:30:40 PM EDT

October 4, 2025 at 2:30:40 PM EDT

Me pregunto si DataGemma realmente podrá resolver el problema de las alucinaciones en IA. Parece prometedor, pero ya hemos visto muchas soluciones 'milagrosas' que luego no cumplen. Ojalá esta vez sea diferente, porque los errores en los modelos actuales pueden ser bastante graves 😅

0

0

![BillyAdams]() BillyAdams

BillyAdams

August 25, 2025 at 5:47:02 AM EDT

August 25, 2025 at 5:47:02 AM EDT

This article on DataGemma is super intriguing! It's wild how LLMs can churn out so much but still trip up on facts sometimes. 😅 Makes me wonder if grounding them in real-world data could finally make AI as reliable as we hope!

0

0

![StephenScott]() StephenScott

StephenScott

August 8, 2025 at 5:00:59 AM EDT

August 8, 2025 at 5:00:59 AM EDT

This article on DataGemma is super intriguing! I love how it dives into fixing AI hallucinations with real-world data. Makes me wonder if we’ll finally get models that don’t spit out random nonsense. 😄 Anyone else excited about this?

0

0

![ArthurYoung]() ArthurYoung

ArthurYoung

July 29, 2025 at 8:25:16 AM EDT

July 29, 2025 at 8:25:16 AM EDT

This article on DataGemma is super intriguing! It's wild how LLMs can churn out so much but still trip over facts. Excited to see how real-world data could make AI less of a fibber! 😄

0

0

![RalphJohnson]() RalphJohnson

RalphJohnson

April 21, 2025 at 12:26:32 AM EDT

April 21, 2025 at 12:26:32 AM EDT

DataGemmaは本当に助かる!AIの幻覚を現実世界のデータで抑えてくれるから、まるでAIにファクトチェッカーが付いているみたい。もう少し処理が早ければ完璧なんだけど、それでも素晴らしいツールだよね!👍

0

0

![WillieAnderson]() WillieAnderson

WillieAnderson

April 17, 2025 at 5:10:42 PM EDT

April 17, 2025 at 5:10:42 PM EDT

DataGemma 정말 도움이 돼! AI의 환각을 현실 세계 데이터로 줄여주니까, 마치 AI에 팩트체커가 있는 것 같아. 처리 속도가 조금 더 빨랐으면 좋겠지만, 그래도 훌륭한 도구야! 👍

0

0

Large language models (LLMs) are at the heart of today's AI breakthroughs, capable of sifting through massive text datasets to produce summaries, spark creative ideas, and even write code. Yet, despite their prowess, these models can sometimes deliver information that's just plain wrong, a problem we call "hallucination." It's a big hurdle in the world of generative AI.

We're excited to share some cutting-edge research that's tackling this issue head-on, aiming to curb hallucinations by grounding LLMs in real-world stats. And we're thrilled to introduce DataGemma, the first open models that link LLMs with a wealth of real-world data from Google's Data Commons.

Data Commons: A Treasure Trove of Trustworthy Data

Data Commons is like a giant, ever-growing library of public data, boasting over 240 billion data points on everything from health to economics. It pulls this info from reliable sources like the UN, WHO, CDC, and Census Bureaus. By merging these datasets into a single, powerful toolset and AI models, Data Commons helps policymakers, researchers, and organizations get the accurate insights they need.

Imagine a vast database where you can ask questions in plain English, like which African countries have seen the biggest jump in electricity access, or how income relates to diabetes across US counties. That's Data Commons for you.

How Data Commons Helps Fight Hallucination

As more folks turn to generative AI, we're working to make these experiences more grounded by weaving Data Commons into Gemma, our family of lightweight, top-notch open models. These DataGemma models are now available for researchers and developers to dive into.

DataGemma boosts Gemma's capabilities by tapping into Data Commons' knowledge, using two cool methods to improve the accuracy and reasoning of LLMs:

RIG (Retrieval-Interleaved Generation) amps up our Gemma 2 model by actively checking facts against Data Commons. When you ask DataGemma a question, it hunts down statistical data from Data Commons to give you a solid answer. While RIG isn't a new idea, the way we're using it in DataGemma is pretty special.

Example query: ''Has the use of renewables increased in the world?'' applying DataGemma RIG methodology leverages Data Commons (DC) for authoritative data. RAG (Retrieval-Augmented Generation) lets language models pull in extra info beyond what they've been trained on, making their answers richer and more accurate. With DataGemma, we use Gemini 1.5 Pro's long context window to fetch relevant data from Data Commons before the model starts crafting its response, cutting down on hallucinations.

Example query: ''Has the use of renewables increased in the world?'' applying DataGemma RAG methodology showcases greater reasoning and inclusion of footnotes.

Promising Results and What's Next

Our early tests with RIG and RAG are looking good. We're seeing better accuracy in our models when dealing with numbers, which means fewer hallucinations for folks using these models for research, decision-making, or just to satisfy their curiosity. You can check out these results in our research paper.

By sharing our research and making this new Gemma model variant open, we hope to spread the use of these Data Commons-based techniques far and wide. Making LLMs more reliable and trustworthy is crucial for turning them into essential tools for everyone, helping to build a future where AI gives people accurate info, supports informed choices, and deepens our understanding of the world.

Researchers and developers can jump right in with DataGemma using our quickstart notebooks for both RIG and RAG. To dive deeper into how Data Commons and Gemma work together, check out our Research post.

Anthropic Resolves Legal Case Over AI-Generated Book Piracy

Anthropic has reached a resolution in a significant copyright dispute with US authors, agreeing to a proposed class action settlement that avoids a potentially costly trial. The agreement, filed in court documents this Tuesday, stems from allegations

Anthropic Resolves Legal Case Over AI-Generated Book Piracy

Anthropic has reached a resolution in a significant copyright dispute with US authors, agreeing to a proposed class action settlement that avoids a potentially costly trial. The agreement, filed in court documents this Tuesday, stems from allegations

Figma Releases AI-Powered App Builder Tool to All Users

Figma Make, the innovative prompt-to-app development platform unveiled earlier this year, has officially exited beta and rolled out to all users. This groundbreaking tool joins the ranks of AI-powered coding assistants like Google's Gemini Code Assis

Figma Releases AI-Powered App Builder Tool to All Users

Figma Make, the innovative prompt-to-app development platform unveiled earlier this year, has officially exited beta and rolled out to all users. This groundbreaking tool joins the ranks of AI-powered coding assistants like Google's Gemini Code Assis

October 4, 2025 at 2:30:40 PM EDT

October 4, 2025 at 2:30:40 PM EDT

Me pregunto si DataGemma realmente podrá resolver el problema de las alucinaciones en IA. Parece prometedor, pero ya hemos visto muchas soluciones 'milagrosas' que luego no cumplen. Ojalá esta vez sea diferente, porque los errores en los modelos actuales pueden ser bastante graves 😅

0

0

August 25, 2025 at 5:47:02 AM EDT

August 25, 2025 at 5:47:02 AM EDT

This article on DataGemma is super intriguing! It's wild how LLMs can churn out so much but still trip up on facts sometimes. 😅 Makes me wonder if grounding them in real-world data could finally make AI as reliable as we hope!

0

0

August 8, 2025 at 5:00:59 AM EDT

August 8, 2025 at 5:00:59 AM EDT

This article on DataGemma is super intriguing! I love how it dives into fixing AI hallucinations with real-world data. Makes me wonder if we’ll finally get models that don’t spit out random nonsense. 😄 Anyone else excited about this?

0

0

July 29, 2025 at 8:25:16 AM EDT

July 29, 2025 at 8:25:16 AM EDT

This article on DataGemma is super intriguing! It's wild how LLMs can churn out so much but still trip over facts. Excited to see how real-world data could make AI less of a fibber! 😄

0

0

April 21, 2025 at 12:26:32 AM EDT

April 21, 2025 at 12:26:32 AM EDT

DataGemmaは本当に助かる!AIの幻覚を現実世界のデータで抑えてくれるから、まるでAIにファクトチェッカーが付いているみたい。もう少し処理が早ければ完璧なんだけど、それでも素晴らしいツールだよね!👍

0

0

April 17, 2025 at 5:10:42 PM EDT

April 17, 2025 at 5:10:42 PM EDT

DataGemma 정말 도움이 돼! AI의 환각을 현실 세계 데이터로 줄여주니까, 마치 AI에 팩트체커가 있는 것 같아. 처리 속도가 조금 더 빨랐으면 좋겠지만, 그래도 훌륭한 도구야! 👍

0

0