AI Transforms 2D Images into Stunning 3D Photos - The Ultimate Guide

The digital photography landscape is undergoing a revolutionary transformation as artificial intelligence enables the conversion of static 2D images into immersive 3D experiences. This cutting-edge technology breathes new life into traditional photographs by algorithmically reconstructing depth and perspective. Our comprehensive exploration reveals the science behind this innovation, practical implementation methods, and creative applications that elevate ordinary pictures into dynamic visual narratives.

Key Points

Mastering depth-aware image transformations through advanced inpainting techniques.

Harnessing AI algorithms to generate accurate depth maps from single images.

Understanding visual gap-filling mechanisms for realistic 3D conversions.

Implementing ready-to-use AI models for instant 3D photo generation.

Evaluating current capabilities and boundaries of AI-powered 3D imaging.

Discovering diverse cinematic effects achievable through AI rendering.

Practical workflow for converting personal photos into dimensional artworks.

Unveiling the Magic of AI 3D Photography

What is AI-Powered 3D Photography?

AI-powered 3D photography represents a paradigm shift in digital imaging, where conventional flat photographs gain spatial depth through intelligent algorithmic processing. Traditional photographs capture only luminosity and color information, lacking the dimensional data that creates visual depth perception in human vision.

This transformative technology operates through several innovative approaches:

- Depth Prediction: Neural networks analyze visual patterns to estimate object distances, creating pixel-level depth maps.

- Visual Reconstruction: Context-aware algorithms build hidden spatial relationships between image elements.

- Dynamic Rendering: Systems generate multiple viewing angles simulating three-dimensional perspective shifts.

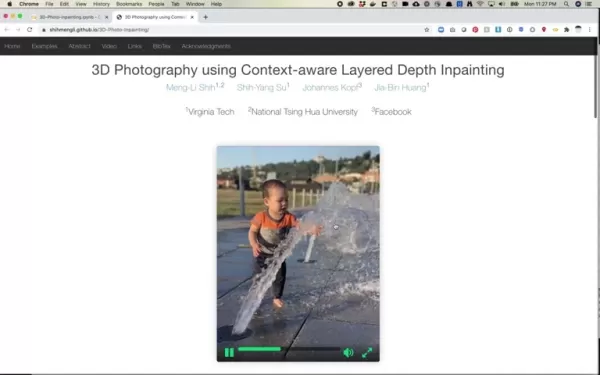

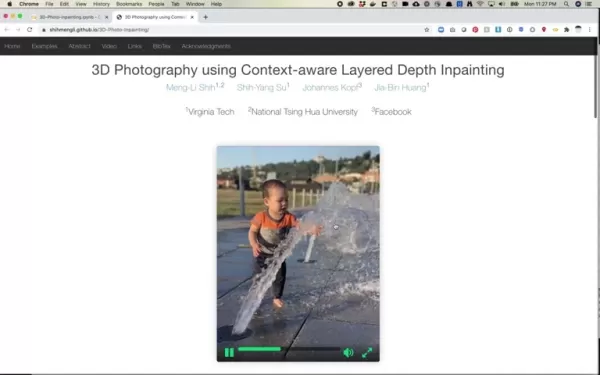

The Core Technique: Context-Aware Layered Depth Inpainting

The revolutionary aspect of modern 3D photo conversion lies in its integrated processing pipeline:

- Scene analysis through convolutional neural networks

- Depth estimation via trained prediction models

- Multi-plane image segmentation

- Viewpoint simulation through geometric transformation

- Visual gap completion using generative inpainting

This sophisticated workflow enables various output formats including depth visualizations, animated perspective shifts, and interactive 3D views that bring static images to life.

Understanding Depth Mapping and its Importance

Depth mapping serves as the foundation for believable 3D conversions by establishing spatial relationships between image elements. Advanced techniques include:

- Monocular depth estimation: Single-image analysis using trained neural networks

- Geometric reconstruction: Interpretation of perspective lines and vanishing points

- Texture gradient analysis: Assessing detail resolution variations across the image

Practical Implementation Guide

Setting Up Your Development Environment

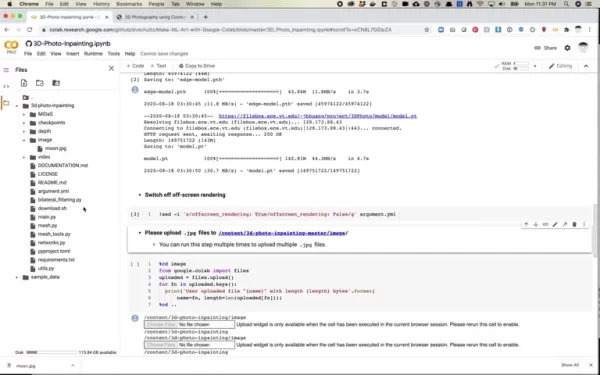

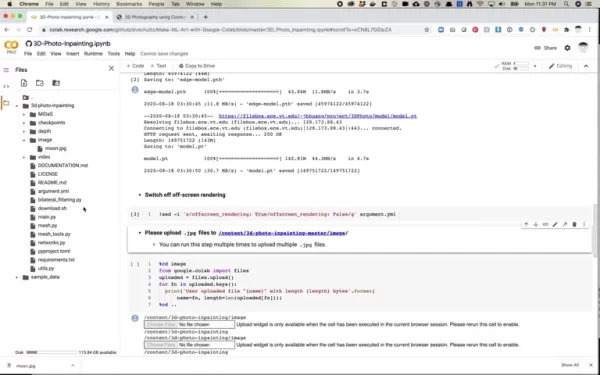

Google Colab provides an accessible platform for experimenting with 3D photo conversion. Essential configuration steps include:

- Activating GPU acceleration in runtime settings

- Installing core visualization libraries

- Configuring Python dependencies

Downloading the Script and Pre-trained Models

The implementation requires specific AI models that have been pre-trained on extensive image datasets. Key components include:

- 3D reconstruction neural networks

- Depth prediction algorithms

- Image inpainting architectures

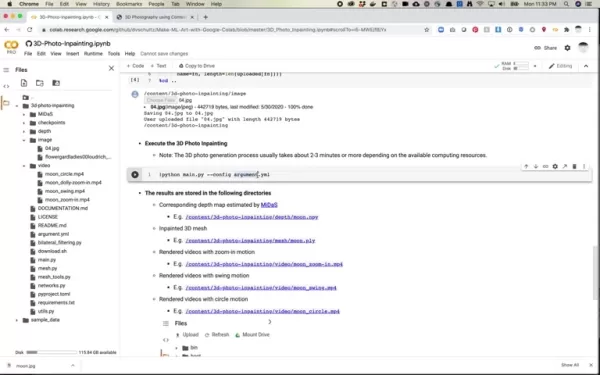

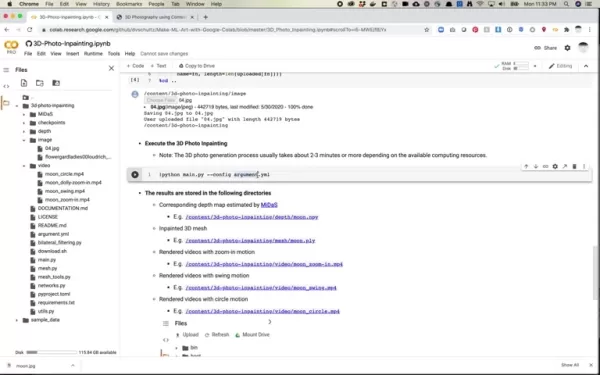

Uploading and Executing the 3D Transformation

The conversion process involves:

- Selecting optimal source images (JPEG format recommended)

- Uploading to the processing environment

- Executing the conversion pipeline

- Reviewing and refining outputs

Creating Your AI 3D Photo

Step-by-Step Conversion Process

- Image selection - Choose photos with clear subjects and good lighting

- Environment setup - Configure Colab notebook with necessary dependencies

- Model deployment - Load and initialize the AI processing pipeline

- Conversion execution - Run the transformation algorithms

- Output generation - Review and export your 3D photo

Technical Considerations

Performance Factors

- GPU acceleration significantly reduces processing time

- Image resolution affects both quality and computation duration

- Complex scenes may require additional processing iterations

Output Quality Optimization

- Use high-quality source images with good contrast

- Ensure proper lighting in original photographs

- Select images with clearly defined foreground elements

- Avoid excessive noise or compression artifacts

Advantages of AI 3D Photo Conversion

- Revitalizes historical or archival photographs

- Creates engaging content for digital platforms

- Enhances visual storytelling capabilities

- Provides cost-effective alternative to stereoscopic photography

Current Limitations

- Depth estimation challenges with reflective/specular surfaces

- Potential artifacts in complex occlusion scenarios

- Computationally intensive processing requirements

- Limited viewpoint adjustment range

FAQ

What image characteristics yield best results?

Images with strong subject separation, good lighting, and clear edges typically produce optimal 3D conversions.

How can I improve processing speed?

Utilize GPU acceleration and optimize source image resolution for faster conversion times.

What file formats are supported?

The system currently processes JPEG images most reliably.

How can I address edge artifacts?

Experiment with different source images and consider pre-processing steps when necessary.

Emerging Capabilities

Continued advancements in neural rendering and generative AI promise even more sophisticated 3D conversion capabilities, including real-time processing and enhanced viewpoint flexibility.

Related article

TikTok's Ban Crisis Nears Resolution with Potential New App and Sale

TikTok Sale Nears Completion as New US Version Prepares for LaunchDespite the TikTok divest-or-ban legislation taking effect in January, the platform has maintained US operations with only a brief one-day shutdown. *The Information* now reports that

TikTok's Ban Crisis Nears Resolution with Potential New App and Sale

TikTok Sale Nears Completion as New US Version Prepares for LaunchDespite the TikTok divest-or-ban legislation taking effect in January, the platform has maintained US operations with only a brief one-day shutdown. *The Information* now reports that

Amazon Discontinues Shared Prime Free Shipping Benefits Outside Households

Amazon Ends Prime Sharing ProgramAmazon is eliminating the popular feature that allowed Prime members to extend their free shipping benefits to non-household members. According to updated support documentation, this sharing capability will terminate

Amazon Discontinues Shared Prime Free Shipping Benefits Outside Households

Amazon Ends Prime Sharing ProgramAmazon is eliminating the popular feature that allowed Prime members to extend their free shipping benefits to non-household members. According to updated support documentation, this sharing capability will terminate

HMD Scales Back US Operations, Ending Nokia Phone Revival

HMD Global, the Finnish company that revitalized Nokia-branded mobile devices through a licensing agreement over the past decade, has announced a significant reduction in its US market presence. The company appears to have halted all direct sales of

Comments (0)

0/200

HMD Scales Back US Operations, Ending Nokia Phone Revival

HMD Global, the Finnish company that revitalized Nokia-branded mobile devices through a licensing agreement over the past decade, has announced a significant reduction in its US market presence. The company appears to have halted all direct sales of

Comments (0)

0/200

The digital photography landscape is undergoing a revolutionary transformation as artificial intelligence enables the conversion of static 2D images into immersive 3D experiences. This cutting-edge technology breathes new life into traditional photographs by algorithmically reconstructing depth and perspective. Our comprehensive exploration reveals the science behind this innovation, practical implementation methods, and creative applications that elevate ordinary pictures into dynamic visual narratives.

Key Points

Mastering depth-aware image transformations through advanced inpainting techniques.

Harnessing AI algorithms to generate accurate depth maps from single images.

Understanding visual gap-filling mechanisms for realistic 3D conversions.

Implementing ready-to-use AI models for instant 3D photo generation.

Evaluating current capabilities and boundaries of AI-powered 3D imaging.

Discovering diverse cinematic effects achievable through AI rendering.

Practical workflow for converting personal photos into dimensional artworks.

Unveiling the Magic of AI 3D Photography

What is AI-Powered 3D Photography?

AI-powered 3D photography represents a paradigm shift in digital imaging, where conventional flat photographs gain spatial depth through intelligent algorithmic processing. Traditional photographs capture only luminosity and color information, lacking the dimensional data that creates visual depth perception in human vision.

This transformative technology operates through several innovative approaches:

- Depth Prediction: Neural networks analyze visual patterns to estimate object distances, creating pixel-level depth maps.

- Visual Reconstruction: Context-aware algorithms build hidden spatial relationships between image elements.

- Dynamic Rendering: Systems generate multiple viewing angles simulating three-dimensional perspective shifts.

The Core Technique: Context-Aware Layered Depth Inpainting

The revolutionary aspect of modern 3D photo conversion lies in its integrated processing pipeline:

- Scene analysis through convolutional neural networks

- Depth estimation via trained prediction models

- Multi-plane image segmentation

- Viewpoint simulation through geometric transformation

- Visual gap completion using generative inpainting

This sophisticated workflow enables various output formats including depth visualizations, animated perspective shifts, and interactive 3D views that bring static images to life.

Understanding Depth Mapping and its Importance

Depth mapping serves as the foundation for believable 3D conversions by establishing spatial relationships between image elements. Advanced techniques include:

- Monocular depth estimation: Single-image analysis using trained neural networks

- Geometric reconstruction: Interpretation of perspective lines and vanishing points

- Texture gradient analysis: Assessing detail resolution variations across the image

Practical Implementation Guide

Setting Up Your Development Environment

Google Colab provides an accessible platform for experimenting with 3D photo conversion. Essential configuration steps include:

- Activating GPU acceleration in runtime settings

- Installing core visualization libraries

- Configuring Python dependencies

Downloading the Script and Pre-trained Models

The implementation requires specific AI models that have been pre-trained on extensive image datasets. Key components include:

- 3D reconstruction neural networks

- Depth prediction algorithms

- Image inpainting architectures

Uploading and Executing the 3D Transformation

The conversion process involves:

- Selecting optimal source images (JPEG format recommended)

- Uploading to the processing environment

- Executing the conversion pipeline

- Reviewing and refining outputs

Creating Your AI 3D Photo

Step-by-Step Conversion Process

- Image selection - Choose photos with clear subjects and good lighting

- Environment setup - Configure Colab notebook with necessary dependencies

- Model deployment - Load and initialize the AI processing pipeline

- Conversion execution - Run the transformation algorithms

- Output generation - Review and export your 3D photo

Technical Considerations

Performance Factors

- GPU acceleration significantly reduces processing time

- Image resolution affects both quality and computation duration

- Complex scenes may require additional processing iterations

Output Quality Optimization

- Use high-quality source images with good contrast

- Ensure proper lighting in original photographs

- Select images with clearly defined foreground elements

- Avoid excessive noise or compression artifacts

Advantages of AI 3D Photo Conversion

- Revitalizes historical or archival photographs

- Creates engaging content for digital platforms

- Enhances visual storytelling capabilities

- Provides cost-effective alternative to stereoscopic photography

Current Limitations

- Depth estimation challenges with reflective/specular surfaces

- Potential artifacts in complex occlusion scenarios

- Computationally intensive processing requirements

- Limited viewpoint adjustment range

FAQ

What image characteristics yield best results?

Images with strong subject separation, good lighting, and clear edges typically produce optimal 3D conversions.

How can I improve processing speed?

Utilize GPU acceleration and optimize source image resolution for faster conversion times.

What file formats are supported?

The system currently processes JPEG images most reliably.

How can I address edge artifacts?

Experiment with different source images and consider pre-processing steps when necessary.

Emerging Capabilities

Continued advancements in neural rendering and generative AI promise even more sophisticated 3D conversion capabilities, including real-time processing and enhanced viewpoint flexibility.

TikTok's Ban Crisis Nears Resolution with Potential New App and Sale

TikTok Sale Nears Completion as New US Version Prepares for LaunchDespite the TikTok divest-or-ban legislation taking effect in January, the platform has maintained US operations with only a brief one-day shutdown. *The Information* now reports that

TikTok's Ban Crisis Nears Resolution with Potential New App and Sale

TikTok Sale Nears Completion as New US Version Prepares for LaunchDespite the TikTok divest-or-ban legislation taking effect in January, the platform has maintained US operations with only a brief one-day shutdown. *The Information* now reports that

Amazon Discontinues Shared Prime Free Shipping Benefits Outside Households

Amazon Ends Prime Sharing ProgramAmazon is eliminating the popular feature that allowed Prime members to extend their free shipping benefits to non-household members. According to updated support documentation, this sharing capability will terminate

Amazon Discontinues Shared Prime Free Shipping Benefits Outside Households

Amazon Ends Prime Sharing ProgramAmazon is eliminating the popular feature that allowed Prime members to extend their free shipping benefits to non-household members. According to updated support documentation, this sharing capability will terminate

HMD Scales Back US Operations, Ending Nokia Phone Revival

HMD Global, the Finnish company that revitalized Nokia-branded mobile devices through a licensing agreement over the past decade, has announced a significant reduction in its US market presence. The company appears to have halted all direct sales of

HMD Scales Back US Operations, Ending Nokia Phone Revival

HMD Global, the Finnish company that revitalized Nokia-branded mobile devices through a licensing agreement over the past decade, has announced a significant reduction in its US market presence. The company appears to have halted all direct sales of