CoSAI Launched: Founding Members Unite for Secure AI

AI's rapid growth demands a robust security framework and standards that can keep up. That's why we introduced the Secure AI Framework (SAIF) last year, knowing it was just the beginning. To make any industry framework work, you need teamwork and a place to collaborate. That's where we're at today.

At the Aspen Security Forum, we're excited to announce the launch of the Coalition for Secure AI (CoSAI) with our industry buddies. We've been working hard for the past year to bring this coalition together, aiming to tackle the unique security challenges AI brings, both now and in the future.

CoSAI's founding members include big names like Amazon, Anthropic, Chainguard, Cisco, Cohere, GenLab, IBM, Intel, Microsoft, NVIDIA, OpenAI, PayPal, and Wiz. We're setting up shop under OASIS Open, the international standards and open-source group.

Introducing CoSAI’s Inaugural Workstreams

As everyone from individual developers to big companies works on adopting common security standards and best practices, CoSAI will back this collective effort in AI security. Today, we're kicking off with the first three areas the coalition will focus on, working hand-in-hand with industry and academia:

- Software Supply Chain Security for AI systems: Google's been pushing to extend SLSA Provenance to AI models, helping to figure out if AI software is secure by tracing its creation and handling through the supply chain. This workstream aims to boost AI security by offering guidance on checking provenance, managing risks from third-party models, and assessing the full AI application provenance, building on the existing SSDF and SLSA security principles for both AI and traditional software.

- Preparing defenders for a changing cybersecurity landscape: Dealing with daily AI governance can be a real headache for security folks. This workstream will create a defender's framework to help them spot where to invest and what mitigation techniques to use to tackle the security impacts of AI. The framework will grow with the rise of offensive cybersecurity advancements in AI models.

- AI security governance: Governing AI security needs new resources and an understanding of what makes AI security unique. CoSAI will develop a list of risks and controls, a checklist, and a scorecard to help practitioners assess their readiness, manage, monitor, and report on the security of their AI products.

Plus, CoSAI will team up with groups like Frontier Model Forum, Partnership on AI, Open Source Security Foundation, and ML Commons to push forward responsible AI.

What’s Next

As AI keeps moving forward, we're all in on making sure our risk management strategies keep pace. We've seen great support from the industry over the past year to make AI safe and secure. Even better, we're seeing real action from developers, experts, and companies of all sizes to help organizations use AI safely.

AI developers need—and end users deserve—a security framework that's up to the challenge and responsibly seizes the opportunities ahead. CoSAI is the next big step in this journey, and we'll have more updates coming soon. To find out how you can support CoSAI, check out coalitionforsecureai.org. In the meantime, head over to our Secure AI Framework page to learn more about Google's work on AI security.

Related article

"Dot AI Companion App Announces Closure, Discontinues Personalized Service"

Dot, an AI companion application designed to function as a personal friend and confidant, will cease operations, according to a Friday announcement from its developers. New Computer, the startup behind Dot, stated on its website that the service will

"Dot AI Companion App Announces Closure, Discontinues Personalized Service"

Dot, an AI companion application designed to function as a personal friend and confidant, will cease operations, according to a Friday announcement from its developers. New Computer, the startup behind Dot, stated on its website that the service will

Anthropic Resolves Legal Case Over AI-Generated Book Piracy

Anthropic has reached a resolution in a significant copyright dispute with US authors, agreeing to a proposed class action settlement that avoids a potentially costly trial. The agreement, filed in court documents this Tuesday, stems from allegations

Anthropic Resolves Legal Case Over AI-Generated Book Piracy

Anthropic has reached a resolution in a significant copyright dispute with US authors, agreeing to a proposed class action settlement that avoids a potentially costly trial. The agreement, filed in court documents this Tuesday, stems from allegations

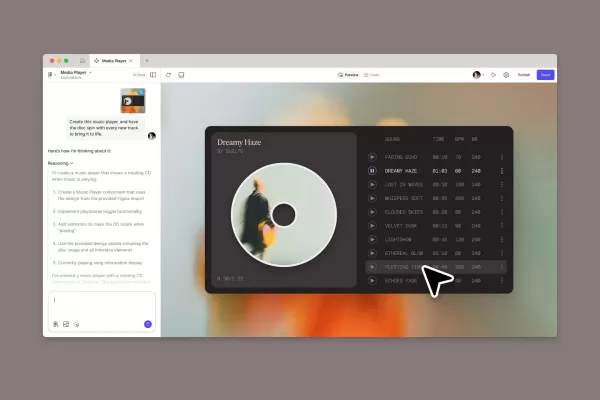

Figma Releases AI-Powered App Builder Tool to All Users

Figma Make, the innovative prompt-to-app development platform unveiled earlier this year, has officially exited beta and rolled out to all users. This groundbreaking tool joins the ranks of AI-powered coding assistants like Google's Gemini Code Assis

Comments (33)

0/200

Figma Releases AI-Powered App Builder Tool to All Users

Figma Make, the innovative prompt-to-app development platform unveiled earlier this year, has officially exited beta and rolled out to all users. This groundbreaking tool joins the ranks of AI-powered coding assistants like Google's Gemini Code Assis

Comments (33)

0/200

![JonathanRamirez]() JonathanRamirez

JonathanRamirez

August 27, 2025 at 3:01:30 AM EDT

August 27, 2025 at 3:01:30 AM EDT

Wow, CoSAI sounds like a game-changer for AI security! It's cool to see big players teaming up for SAIF. Curious how this'll shape AI ethics debates. 🤔

0

0

![BillyAdams]() BillyAdams

BillyAdams

August 13, 2025 at 9:00:59 PM EDT

August 13, 2025 at 9:00:59 PM EDT

This CoSAI initiative sounds promising! 😊 It's cool to see big players teaming up for AI security, but I wonder how they'll balance innovation with strict standards. Could be a game-changer if done right!

0

0

![RobertMartinez]() RobertMartinez

RobertMartinez

July 23, 2025 at 12:59:47 AM EDT

July 23, 2025 at 12:59:47 AM EDT

Wow, CoSAI sounds like a game-changer! Finally, some serious teamwork to lock down AI security. I’m curious how SAIF will evolve with all these big players collaborating. 🛡️

0

0

![GaryWilson]() GaryWilson

GaryWilson

April 24, 2025 at 6:18:04 AM EDT

April 24, 2025 at 6:18:04 AM EDT

CoSAI의 런치는 AI 보안에 큰 진전입니다. 많은 창립 멤버들이 함께하는 것을 보는 것이 좋습니다. 프레임워크는 견고해 보이지만, 아직 어떻게 작동하는지 잘 모르겠어요. 더 명확한 가이드가 있으면 좋겠어요! 🤔

0

0

![AnthonyJohnson]() AnthonyJohnson

AnthonyJohnson

April 24, 2025 at 2:42:02 AM EDT

April 24, 2025 at 2:42:02 AM EDT

El lanzamiento de CoSAI es un gran paso adelante para la seguridad en IA. Es genial ver a tantos miembros fundadores unidos. El marco parece sólido, pero aún estoy un poco confundido sobre cómo funciona todo. ¡Guías más claras serían útiles! 🤔

0

0

![FredLewis]() FredLewis

FredLewis

April 23, 2025 at 12:23:37 PM EDT

April 23, 2025 at 12:23:37 PM EDT

CoSAI's launch is a big step forward for AI security. It's great to see so many founding members coming together. The framework seems solid, but I'm still a bit confused about how it all works. More clear guides would be helpful! 🤔

0

0

AI's rapid growth demands a robust security framework and standards that can keep up. That's why we introduced the Secure AI Framework (SAIF) last year, knowing it was just the beginning. To make any industry framework work, you need teamwork and a place to collaborate. That's where we're at today.

At the Aspen Security Forum, we're excited to announce the launch of the Coalition for Secure AI (CoSAI) with our industry buddies. We've been working hard for the past year to bring this coalition together, aiming to tackle the unique security challenges AI brings, both now and in the future.

CoSAI's founding members include big names like Amazon, Anthropic, Chainguard, Cisco, Cohere, GenLab, IBM, Intel, Microsoft, NVIDIA, OpenAI, PayPal, and Wiz. We're setting up shop under OASIS Open, the international standards and open-source group.

Introducing CoSAI’s Inaugural Workstreams

As everyone from individual developers to big companies works on adopting common security standards and best practices, CoSAI will back this collective effort in AI security. Today, we're kicking off with the first three areas the coalition will focus on, working hand-in-hand with industry and academia:

- Software Supply Chain Security for AI systems: Google's been pushing to extend SLSA Provenance to AI models, helping to figure out if AI software is secure by tracing its creation and handling through the supply chain. This workstream aims to boost AI security by offering guidance on checking provenance, managing risks from third-party models, and assessing the full AI application provenance, building on the existing SSDF and SLSA security principles for both AI and traditional software.

- Preparing defenders for a changing cybersecurity landscape: Dealing with daily AI governance can be a real headache for security folks. This workstream will create a defender's framework to help them spot where to invest and what mitigation techniques to use to tackle the security impacts of AI. The framework will grow with the rise of offensive cybersecurity advancements in AI models.

- AI security governance: Governing AI security needs new resources and an understanding of what makes AI security unique. CoSAI will develop a list of risks and controls, a checklist, and a scorecard to help practitioners assess their readiness, manage, monitor, and report on the security of their AI products.

Plus, CoSAI will team up with groups like Frontier Model Forum, Partnership on AI, Open Source Security Foundation, and ML Commons to push forward responsible AI.

What’s Next

As AI keeps moving forward, we're all in on making sure our risk management strategies keep pace. We've seen great support from the industry over the past year to make AI safe and secure. Even better, we're seeing real action from developers, experts, and companies of all sizes to help organizations use AI safely.

AI developers need—and end users deserve—a security framework that's up to the challenge and responsibly seizes the opportunities ahead. CoSAI is the next big step in this journey, and we'll have more updates coming soon. To find out how you can support CoSAI, check out coalitionforsecureai.org. In the meantime, head over to our Secure AI Framework page to learn more about Google's work on AI security.

Anthropic Resolves Legal Case Over AI-Generated Book Piracy

Anthropic has reached a resolution in a significant copyright dispute with US authors, agreeing to a proposed class action settlement that avoids a potentially costly trial. The agreement, filed in court documents this Tuesday, stems from allegations

Anthropic Resolves Legal Case Over AI-Generated Book Piracy

Anthropic has reached a resolution in a significant copyright dispute with US authors, agreeing to a proposed class action settlement that avoids a potentially costly trial. The agreement, filed in court documents this Tuesday, stems from allegations

Figma Releases AI-Powered App Builder Tool to All Users

Figma Make, the innovative prompt-to-app development platform unveiled earlier this year, has officially exited beta and rolled out to all users. This groundbreaking tool joins the ranks of AI-powered coding assistants like Google's Gemini Code Assis

Figma Releases AI-Powered App Builder Tool to All Users

Figma Make, the innovative prompt-to-app development platform unveiled earlier this year, has officially exited beta and rolled out to all users. This groundbreaking tool joins the ranks of AI-powered coding assistants like Google's Gemini Code Assis

August 27, 2025 at 3:01:30 AM EDT

August 27, 2025 at 3:01:30 AM EDT

Wow, CoSAI sounds like a game-changer for AI security! It's cool to see big players teaming up for SAIF. Curious how this'll shape AI ethics debates. 🤔

0

0

August 13, 2025 at 9:00:59 PM EDT

August 13, 2025 at 9:00:59 PM EDT

This CoSAI initiative sounds promising! 😊 It's cool to see big players teaming up for AI security, but I wonder how they'll balance innovation with strict standards. Could be a game-changer if done right!

0

0

July 23, 2025 at 12:59:47 AM EDT

July 23, 2025 at 12:59:47 AM EDT

Wow, CoSAI sounds like a game-changer! Finally, some serious teamwork to lock down AI security. I’m curious how SAIF will evolve with all these big players collaborating. 🛡️

0

0

April 24, 2025 at 6:18:04 AM EDT

April 24, 2025 at 6:18:04 AM EDT

CoSAI의 런치는 AI 보안에 큰 진전입니다. 많은 창립 멤버들이 함께하는 것을 보는 것이 좋습니다. 프레임워크는 견고해 보이지만, 아직 어떻게 작동하는지 잘 모르겠어요. 더 명확한 가이드가 있으면 좋겠어요! 🤔

0

0

April 24, 2025 at 2:42:02 AM EDT

April 24, 2025 at 2:42:02 AM EDT

El lanzamiento de CoSAI es un gran paso adelante para la seguridad en IA. Es genial ver a tantos miembros fundadores unidos. El marco parece sólido, pero aún estoy un poco confundido sobre cómo funciona todo. ¡Guías más claras serían útiles! 🤔

0

0

April 23, 2025 at 12:23:37 PM EDT

April 23, 2025 at 12:23:37 PM EDT

CoSAI's launch is a big step forward for AI security. It's great to see so many founding members coming together. The framework seems solid, but I'm still a bit confused about how it all works. More clear guides would be helpful! 🤔

0

0