DeepMind's AI Secures Gold at 2025 Math Olympiad

DeepMind's AI has achieved a stunning leap in mathematical reasoning, clinching a gold medal at the 2025 International Mathematical Olympiad (IMO), just a year after earning silver in 2024. This breakthrough underscores AI's growing prowess in solving complex, abstract problems requiring human-like creativity. This article explores DeepMind's transformative journey, key technical advancements, and the wider impact of this milestone.

Why the IMO Matters

Since 1959, the International Mathematical Olympiad has been the world's top math competition for high school students. It challenges participants with six intricate problems in algebra, geometry, number theory, and combinatorics, demanding exceptional creativity, logic, and elegant proofs.

For AI, the IMO is a formidable test. Unlike pattern recognition or strategic games like Go, Olympiad math requires abstract reasoning and novel idea synthesis—skills long considered uniquely human. The IMO thus serves as a benchmark for AI's progress toward human-like intelligence.

2024's Silver Medal Milestone

In 2024, DeepMind debuted two AI systems for IMO problems: AlphaProof and AlphaGeometry 2, both leveraging "neuro-symbolic" AI, blending large language models (LLMs) with symbolic logic.

AlphaProof used Lean, a formal mathematical language, to prove statements. It integrated Gemini, DeepMind's LLM, with AlphaZero, a reinforcement learning system famed for mastering board games. Gemini translated problems into Lean, generating logical steps, while AlphaProof trained on millions of diverse math problems, refining its skills through self-improvement.

AlphaGeometry 2 excelled in geometry, with Gemini predicting auxiliary constructions and a symbolic engine handling deductions. This hybrid approach enabled it to solve complex geometric problems.

The systems solved four of six IMO problems—two in algebra, one in number theory, and one in geometry—scoring 28/42, earning a silver medal. This marked a historic AI achievement, though it relied on human translations and extensive computational resources.

Key Innovations for Gold

DeepMind's jump to gold in 2025 stemmed from major technical advancements.

1. Natural Language Proofs

A pivotal shift was using natural language for proofs, eliminating the need for expert translations into formal languages. An upgraded Gemini with Deep Think capabilities processes problems directly, sketching informal proofs, formalizing key steps internally, and delivering clear English proofs. Reinforcement learning from human feedback (RLHF) ensured concise, logical solutions.

Gemini Deep Think stands out with longer context windows and more computing tokens, supporting multi-page reasoning. It employs parallel reasoning, generating hundreds of solution paths, with a supervisor ranking the best, akin to human brainstorming.

2. Advanced Training Techniques

Gemini Deep Think was fine-tuned on a 100,000-solution corpus from math forums, arXiv, and college problem sets, with human mentors filtering errors. Reinforcement learning with stepwise rewards for verified sub-lemmas guided the model toward concise proofs. Training spanned three months, using 25 million TPU-hours.

3. Parallel Processing Power

Parallelization was critical, with multiple reasoning branches explored simultaneously. Resources dynamically shifted to promising paths, especially effective for combinatorics. This approach, supported by DeepMind's TPU v5 clusters, mirrored human strategies like testing inequalities before full proofs.

DeepMind's 2025 IMO Triumph

To ensure fairness, DeepMind froze its model’s weights three weeks before the IMO, filtering out unpublished problem solutions. During the event, Gemini Deep Think tackled six plaintext problems without internet access, using laptop-equivalent computing power. It completed proofs in under three hours, earning perfect scores on five problems and a total of 35/42—securing gold. The AI's proofs were praised as rigorous and thorough, matching human standards.

Impact on AI and Mathematics

DeepMind's success signals AI's progress toward artificial general intelligence (AGI), as IMO problems demand advanced reasoning. For mathematics, AI tools like Gemini Deep Think can aid in exploring new theorems, verifying conjectures, and streamlining proofs, freeing mathematicians for conceptual work. However, AI's role in education and competitions raises questions about their future structure.

Future Prospects

While some mathematical challenges remain, DeepMind's rapid progress suggests AI could soon tackle major unsolved problems. The 2025 IMO victory highlights AI's advancing logical reasoning, sparking debate on whether it will complement or redefine human creativity in mathematics.

Related article

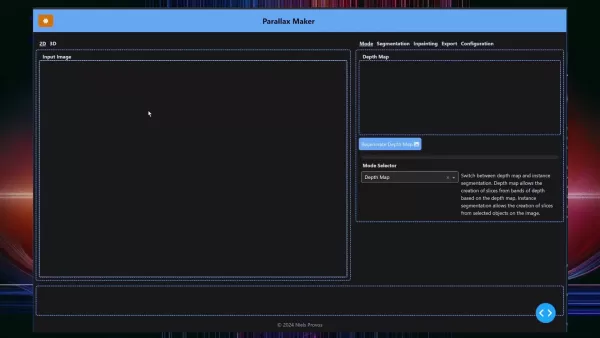

AI-Powered Parallax Maker: Craft Dynamic 2.5D Animations

Turn static images into captivating 2.5D animations with Parallax Maker. This open-source tool empowers artists and game developers to infuse depth and motion into their work. By leveraging the Stabil

AI-Powered Parallax Maker: Craft Dynamic 2.5D Animations

Turn static images into captivating 2.5D animations with Parallax Maker. This open-source tool empowers artists and game developers to infuse depth and motion into their work. By leveraging the Stabil

Trunk Tools Secures $40M to Advance AI-Driven Construction Solutions

Trunk Tools, an AI innovator transforming how construction professionals manage and utilize project data, has raised $40 million in a Series B round. Led by Insight Partners, with contributions from R

Trunk Tools Secures $40M to Advance AI-Driven Construction Solutions

Trunk Tools, an AI innovator transforming how construction professionals manage and utilize project data, has raised $40 million in a Series B round. Led by Insight Partners, with contributions from R

AI-Powered Real Estate Advertising: Boosting Lead Quality with Likely AI

In today's fast-paced real estate market, differentiation is key. Likely AI's Ad Creator transforms marketing by using artificial intelligence to craft highly targeted, impactful ad campaigns. This in

Comments (0)

0/200

AI-Powered Real Estate Advertising: Boosting Lead Quality with Likely AI

In today's fast-paced real estate market, differentiation is key. Likely AI's Ad Creator transforms marketing by using artificial intelligence to craft highly targeted, impactful ad campaigns. This in

Comments (0)

0/200

DeepMind's AI has achieved a stunning leap in mathematical reasoning, clinching a gold medal at the 2025 International Mathematical Olympiad (IMO), just a year after earning silver in 2024. This breakthrough underscores AI's growing prowess in solving complex, abstract problems requiring human-like creativity. This article explores DeepMind's transformative journey, key technical advancements, and the wider impact of this milestone.

Why the IMO Matters

Since 1959, the International Mathematical Olympiad has been the world's top math competition for high school students. It challenges participants with six intricate problems in algebra, geometry, number theory, and combinatorics, demanding exceptional creativity, logic, and elegant proofs.

For AI, the IMO is a formidable test. Unlike pattern recognition or strategic games like Go, Olympiad math requires abstract reasoning and novel idea synthesis—skills long considered uniquely human. The IMO thus serves as a benchmark for AI's progress toward human-like intelligence.

2024's Silver Medal Milestone

In 2024, DeepMind debuted two AI systems for IMO problems: AlphaProof and AlphaGeometry 2, both leveraging "neuro-symbolic" AI, blending large language models (LLMs) with symbolic logic.

AlphaProof used Lean, a formal mathematical language, to prove statements. It integrated Gemini, DeepMind's LLM, with AlphaZero, a reinforcement learning system famed for mastering board games. Gemini translated problems into Lean, generating logical steps, while AlphaProof trained on millions of diverse math problems, refining its skills through self-improvement.

AlphaGeometry 2 excelled in geometry, with Gemini predicting auxiliary constructions and a symbolic engine handling deductions. This hybrid approach enabled it to solve complex geometric problems.

The systems solved four of six IMO problems—two in algebra, one in number theory, and one in geometry—scoring 28/42, earning a silver medal. This marked a historic AI achievement, though it relied on human translations and extensive computational resources.

Key Innovations for Gold

DeepMind's jump to gold in 2025 stemmed from major technical advancements.

1. Natural Language Proofs

A pivotal shift was using natural language for proofs, eliminating the need for expert translations into formal languages. An upgraded Gemini with Deep Think capabilities processes problems directly, sketching informal proofs, formalizing key steps internally, and delivering clear English proofs. Reinforcement learning from human feedback (RLHF) ensured concise, logical solutions.

Gemini Deep Think stands out with longer context windows and more computing tokens, supporting multi-page reasoning. It employs parallel reasoning, generating hundreds of solution paths, with a supervisor ranking the best, akin to human brainstorming.

2. Advanced Training Techniques

Gemini Deep Think was fine-tuned on a 100,000-solution corpus from math forums, arXiv, and college problem sets, with human mentors filtering errors. Reinforcement learning with stepwise rewards for verified sub-lemmas guided the model toward concise proofs. Training spanned three months, using 25 million TPU-hours.

3. Parallel Processing Power

Parallelization was critical, with multiple reasoning branches explored simultaneously. Resources dynamically shifted to promising paths, especially effective for combinatorics. This approach, supported by DeepMind's TPU v5 clusters, mirrored human strategies like testing inequalities before full proofs.

DeepMind's 2025 IMO Triumph

To ensure fairness, DeepMind froze its model’s weights three weeks before the IMO, filtering out unpublished problem solutions. During the event, Gemini Deep Think tackled six plaintext problems without internet access, using laptop-equivalent computing power. It completed proofs in under three hours, earning perfect scores on five problems and a total of 35/42—securing gold. The AI's proofs were praised as rigorous and thorough, matching human standards.

Impact on AI and Mathematics

DeepMind's success signals AI's progress toward artificial general intelligence (AGI), as IMO problems demand advanced reasoning. For mathematics, AI tools like Gemini Deep Think can aid in exploring new theorems, verifying conjectures, and streamlining proofs, freeing mathematicians for conceptual work. However, AI's role in education and competitions raises questions about their future structure.

Future Prospects

While some mathematical challenges remain, DeepMind's rapid progress suggests AI could soon tackle major unsolved problems. The 2025 IMO victory highlights AI's advancing logical reasoning, sparking debate on whether it will complement or redefine human creativity in mathematics.

AI-Powered Parallax Maker: Craft Dynamic 2.5D Animations

Turn static images into captivating 2.5D animations with Parallax Maker. This open-source tool empowers artists and game developers to infuse depth and motion into their work. By leveraging the Stabil

AI-Powered Parallax Maker: Craft Dynamic 2.5D Animations

Turn static images into captivating 2.5D animations with Parallax Maker. This open-source tool empowers artists and game developers to infuse depth and motion into their work. By leveraging the Stabil

Trunk Tools Secures $40M to Advance AI-Driven Construction Solutions

Trunk Tools, an AI innovator transforming how construction professionals manage and utilize project data, has raised $40 million in a Series B round. Led by Insight Partners, with contributions from R

Trunk Tools Secures $40M to Advance AI-Driven Construction Solutions

Trunk Tools, an AI innovator transforming how construction professionals manage and utilize project data, has raised $40 million in a Series B round. Led by Insight Partners, with contributions from R

AI-Powered Real Estate Advertising: Boosting Lead Quality with Likely AI

In today's fast-paced real estate market, differentiation is key. Likely AI's Ad Creator transforms marketing by using artificial intelligence to craft highly targeted, impactful ad campaigns. This in

AI-Powered Real Estate Advertising: Boosting Lead Quality with Likely AI

In today's fast-paced real estate market, differentiation is key. Likely AI's Ad Creator transforms marketing by using artificial intelligence to craft highly targeted, impactful ad campaigns. This in