Master ComfyUI Inpainting: Key Workflows and Essential Techniques for Flawless Edits

ComfyUI revolutionizes image editing through its advanced inpainting capabilities. Our comprehensive guide walks you through diverse workflow techniques - from fundamental image-to-image conversions to sophisticated ControlNet implementations and automated facial enhancements. Master these professional methods to elevate your ComfyUI expertise and transform your creative process.

Key Points

Master the core principles of ComfyUI's inpainting workflows.

Harness standard models for localized image modifications.

Explore ControlNet integration for superior inpainting precision.

Implement intelligent facial enhancement techniques.

Compare methodologies to identify optimal solutions for specific projects.

ComfyUI Inpainting Fundamentals

Why Learn ComfyUI Inpainting Workflows?

While optional, proficiency in ComfyUI inpainting unlocks creative potential by enabling tailored image editing solutions. This technique allows selective modifications without reprocessing entire compositions, optimizing both time and computational resources.

The secret lies in ComfyUI's conversion of images to a compressed latent space format, where the AI precisely targets masked regions while preserving surrounding content. This streamlined approach facilitates focused artistic adjustments with remarkable efficiency.

Image to Image Workflow With Inpainting: Using Standard Models

Initiate your inpainting journey with basic image transformation workflows. Beginners should first familiarize themselves with ComfyUI's core functionality before advancing to specialized techniques.

The foundation begins with the Latent Noise Mask node, which guides AI processing to designated areas. Establish these critical connections:

- Connect mask outputs to the Latent Noise Mask node

- Link VAE Encode latent outputs to the same node

- Route processed latent images to the KSampler

Implementation checklist:

- Import source imagery

- Access the Mask Editor for precise area selection

- Finalize and save mask parameters

- Select appropriate Stable Diffusion model

- Detail desired modifications through prompts

- Tune KSampler settings (seed, steps, CFG scale)

- Adjust denoise levels for subtle or significant changes

Advanced ComfyUI Inpainting Techniques

Inpainting with an Inpaint Model

Specialized inpaint models offer superior performance through dedicated VAE Encode nodes that streamline the masking process. Here's the optimized workflow:

- Integrate the VAE Encode (for Inpainting) node

- Connect image pixels and mask outputs accordingly

- Route processed latent outputs to the KSampler

- Maintain standard subsequent steps (image import, model selection, prompting)

- Set denoise strength to maximum (1) for optimal reconstruction

ControlNet Inpainting

For professional-grade precision, ControlNet integration enhances ComfyUI's capabilities. This configuration requires additional nodes but delivers unmatched control.

Critical implementation steps:

- Include Load ControlNet and Apply ControlNet nodes

- Utilize the Inpaint Preprocessor for combined image/mask handling

- Verify proper node connections and port alignments

- Select complementary base and ControlNet models

Automatic Inpainting for Faces

ComfyUI's automated facial enhancement system leverages YOLO detection technology to intelligently improve portrait quality. The process involves:

- Adding the Face Detailer node to your workflow

- Establishing proper nodal connections

- Configuring positive/negative inputs

- Connecting the Face Detector for automated recognition

Feature Enhanced Standard Facial Clarity High-resolution detail Basic rendering Texture Quality Refined skin textures Simplified surfaces

Step-by-Step Guides

Standard Model Workflow

- Import source image into ComfyUI

- Access the Mask Editor for target selection

- Define modification regions precisely

- Save mask configurations

- Choose appropriate Stable Diffusion model

- Detail modification requirements through text prompts

- Optimize KSampler parameters

Inpaint Model Workflow

- Add the specialized VAE Encode node

- Establish image/mask input connections

- Connect processed outputs to the KSampler

- Import reference image

- Select dedicated inpaint model

- Specify modification requirements

ControlNet Workflow

- Integrate ControlNet-specific nodes

- Implement the Inpaint Preprocessor

- Route image/mask data appropriately

- Process through ControlNet architecture

Face Detailing Workflow

- Add the Face Detailer component

- Configure nodal relationships

- Enable automated YOLO detection

- Execute intelligent facial enhancements

Standard Models Pros and Cons

Advantages

Versatile application across diverse content types

Straightforward implementation process

Effective for nuanced adjustments when properly configured

Limitations

Performance degradation at high denoise levels

Less specialized than dedicated models

Generic output quality compared to focused solutions

FAQ

What is ComfyUI?

An advanced node-based interface for Stable Diffusion workflows, enabling complex image generation and manipulation through visual programming.

What is Inpainting, and how does it work in ComfyUI?

A technique for selectively reconstructing image regions by masking target areas and AI-powered content generation that blends seamlessly with existing elements.

What are latent models?

Compression algorithms that convert images into efficient data representations, enabling targeted modifications in ComfyUI workflows.

What does YOLO mean in terms of ComfyUI?

The facial recognition system powering automated detection and enhancement capabilities within the platform.

Does ComfyUI support ControlNet?

Yes, through specialized nodes that enable condition-based generation control using various reference maps.

Related Questions

What are the best models for inpainting in ComfyUI?

Model selection depends on content type. EpicRealism excels for general inpainting, while realisticVision specializes in portrait work. ComfyUI's search functionality facilitates rapid model integration.

Related article

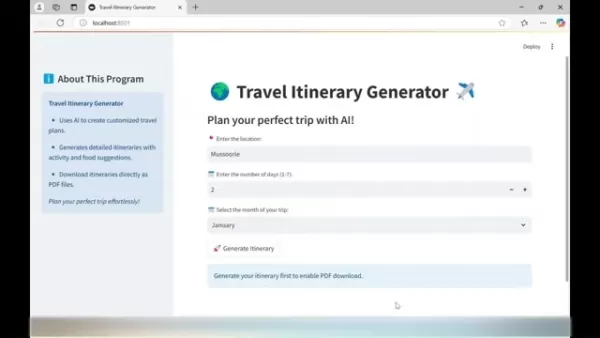

AI-Powered Travel Itinerary Generator Helps You Plan the Perfect Trip

Planning unforgettable journeys just got simpler with cutting-edge AI technology. The Travel Itinerary Generator revolutionizes vacation planning by crafting customized travel guides packed with attractions, dining suggestions, and daily schedules -

AI-Powered Travel Itinerary Generator Helps You Plan the Perfect Trip

Planning unforgettable journeys just got simpler with cutting-edge AI technology. The Travel Itinerary Generator revolutionizes vacation planning by crafting customized travel guides packed with attractions, dining suggestions, and daily schedules -

Apple Vision Pro Debuts as a Game-Changer in Augmented Reality

Apple makes a bold leap into spatial computing with its groundbreaking Vision Pro headset - redefining what's possible in augmented and virtual reality experiences through cutting-edge engineering and thoughtful design.Introduction to Vision ProRedef

Apple Vision Pro Debuts as a Game-Changer in Augmented Reality

Apple makes a bold leap into spatial computing with its groundbreaking Vision Pro headset - redefining what's possible in augmented and virtual reality experiences through cutting-edge engineering and thoughtful design.Introduction to Vision ProRedef

Perplexity AI Shopping Assistant Transforms Online Shopping Experience

Perplexity AI is making waves in e-commerce with its revolutionary AI shopping assistant, poised to transform how consumers discover and purchase products online. This innovative platform merges conversational AI with e-commerce functionality, challe

Comments (0)

0/200

Perplexity AI Shopping Assistant Transforms Online Shopping Experience

Perplexity AI is making waves in e-commerce with its revolutionary AI shopping assistant, poised to transform how consumers discover and purchase products online. This innovative platform merges conversational AI with e-commerce functionality, challe

Comments (0)

0/200

ComfyUI revolutionizes image editing through its advanced inpainting capabilities. Our comprehensive guide walks you through diverse workflow techniques - from fundamental image-to-image conversions to sophisticated ControlNet implementations and automated facial enhancements. Master these professional methods to elevate your ComfyUI expertise and transform your creative process.

Key Points

Master the core principles of ComfyUI's inpainting workflows.

Harness standard models for localized image modifications.

Explore ControlNet integration for superior inpainting precision.

Implement intelligent facial enhancement techniques.

Compare methodologies to identify optimal solutions for specific projects.

ComfyUI Inpainting Fundamentals

Why Learn ComfyUI Inpainting Workflows?

While optional, proficiency in ComfyUI inpainting unlocks creative potential by enabling tailored image editing solutions. This technique allows selective modifications without reprocessing entire compositions, optimizing both time and computational resources.

The secret lies in ComfyUI's conversion of images to a compressed latent space format, where the AI precisely targets masked regions while preserving surrounding content. This streamlined approach facilitates focused artistic adjustments with remarkable efficiency.

Image to Image Workflow With Inpainting: Using Standard Models

Initiate your inpainting journey with basic image transformation workflows. Beginners should first familiarize themselves with ComfyUI's core functionality before advancing to specialized techniques.

The foundation begins with the Latent Noise Mask node, which guides AI processing to designated areas. Establish these critical connections:

- Connect mask outputs to the Latent Noise Mask node

- Link VAE Encode latent outputs to the same node

- Route processed latent images to the KSampler

Implementation checklist:

- Import source imagery

- Access the Mask Editor for precise area selection

- Finalize and save mask parameters

- Select appropriate Stable Diffusion model

- Detail desired modifications through prompts

- Tune KSampler settings (seed, steps, CFG scale)

- Adjust denoise levels for subtle or significant changes

Advanced ComfyUI Inpainting Techniques

Inpainting with an Inpaint Model

Specialized inpaint models offer superior performance through dedicated VAE Encode nodes that streamline the masking process. Here's the optimized workflow:

- Integrate the VAE Encode (for Inpainting) node

- Connect image pixels and mask outputs accordingly

- Route processed latent outputs to the KSampler

- Maintain standard subsequent steps (image import, model selection, prompting)

- Set denoise strength to maximum (1) for optimal reconstruction

ControlNet Inpainting

For professional-grade precision, ControlNet integration enhances ComfyUI's capabilities. This configuration requires additional nodes but delivers unmatched control.

Critical implementation steps:

- Include Load ControlNet and Apply ControlNet nodes

- Utilize the Inpaint Preprocessor for combined image/mask handling

- Verify proper node connections and port alignments

- Select complementary base and ControlNet models

Automatic Inpainting for Faces

ComfyUI's automated facial enhancement system leverages YOLO detection technology to intelligently improve portrait quality. The process involves:

- Adding the Face Detailer node to your workflow

- Establishing proper nodal connections

- Configuring positive/negative inputs

- Connecting the Face Detector for automated recognition

| Feature | Enhanced | Standard |

|---|---|---|

| Facial Clarity | High-resolution detail | Basic rendering |

| Texture Quality | Refined skin textures | Simplified surfaces |

Step-by-Step Guides

Standard Model Workflow

- Import source image into ComfyUI

- Access the Mask Editor for target selection

- Define modification regions precisely

- Save mask configurations

- Choose appropriate Stable Diffusion model

- Detail modification requirements through text prompts

- Optimize KSampler parameters

Inpaint Model Workflow

- Add the specialized VAE Encode node

- Establish image/mask input connections

- Connect processed outputs to the KSampler

- Import reference image

- Select dedicated inpaint model

- Specify modification requirements

ControlNet Workflow

- Integrate ControlNet-specific nodes

- Implement the Inpaint Preprocessor

- Route image/mask data appropriately

- Process through ControlNet architecture

Face Detailing Workflow

- Add the Face Detailer component

- Configure nodal relationships

- Enable automated YOLO detection

- Execute intelligent facial enhancements

Standard Models Pros and Cons

Advantages

Versatile application across diverse content types

Straightforward implementation process

Effective for nuanced adjustments when properly configured

Limitations

Performance degradation at high denoise levels

Less specialized than dedicated models

Generic output quality compared to focused solutions

FAQ

What is ComfyUI?

An advanced node-based interface for Stable Diffusion workflows, enabling complex image generation and manipulation through visual programming.

What is Inpainting, and how does it work in ComfyUI?

A technique for selectively reconstructing image regions by masking target areas and AI-powered content generation that blends seamlessly with existing elements.

What are latent models?

Compression algorithms that convert images into efficient data representations, enabling targeted modifications in ComfyUI workflows.

What does YOLO mean in terms of ComfyUI?

The facial recognition system powering automated detection and enhancement capabilities within the platform.

Does ComfyUI support ControlNet?

Yes, through specialized nodes that enable condition-based generation control using various reference maps.

Related Questions

What are the best models for inpainting in ComfyUI?

Model selection depends on content type. EpicRealism excels for general inpainting, while realisticVision specializes in portrait work. ComfyUI's search functionality facilitates rapid model integration.

AI-Powered Travel Itinerary Generator Helps You Plan the Perfect Trip

Planning unforgettable journeys just got simpler with cutting-edge AI technology. The Travel Itinerary Generator revolutionizes vacation planning by crafting customized travel guides packed with attractions, dining suggestions, and daily schedules -

AI-Powered Travel Itinerary Generator Helps You Plan the Perfect Trip

Planning unforgettable journeys just got simpler with cutting-edge AI technology. The Travel Itinerary Generator revolutionizes vacation planning by crafting customized travel guides packed with attractions, dining suggestions, and daily schedules -

Apple Vision Pro Debuts as a Game-Changer in Augmented Reality

Apple makes a bold leap into spatial computing with its groundbreaking Vision Pro headset - redefining what's possible in augmented and virtual reality experiences through cutting-edge engineering and thoughtful design.Introduction to Vision ProRedef

Apple Vision Pro Debuts as a Game-Changer in Augmented Reality

Apple makes a bold leap into spatial computing with its groundbreaking Vision Pro headset - redefining what's possible in augmented and virtual reality experiences through cutting-edge engineering and thoughtful design.Introduction to Vision ProRedef

Perplexity AI Shopping Assistant Transforms Online Shopping Experience

Perplexity AI is making waves in e-commerce with its revolutionary AI shopping assistant, poised to transform how consumers discover and purchase products online. This innovative platform merges conversational AI with e-commerce functionality, challe

Perplexity AI Shopping Assistant Transforms Online Shopping Experience

Perplexity AI is making waves in e-commerce with its revolutionary AI shopping assistant, poised to transform how consumers discover and purchase products online. This innovative platform merges conversational AI with e-commerce functionality, challe