Google AI Assists in Decoding Dolphin Communication with DolphinGemma

For decades, scientists have been fascinated by the clicks, whistles, and burst pulses of dolphins, trying to crack the code of their intricate communication. Imagine if we could not only eavesdrop on these marine conversations but also decipher their patterns well enough to craft our own dolphin-like responses. This dream is inching closer to reality, and on National Dolphin Day, Google, in partnership with researchers from Georgia Tech and the fieldwork of the Wild Dolphin Project (WDP), is thrilled to share exciting updates on DolphinGemma. This groundbreaking AI model is trained to grasp the nuances of dolphin vocalizations and even generate novel sound sequences, pushing the limits of AI and our potential to connect with the ocean's inhabitants.

Decades of Dolphin Society Research

Understanding any species isn't a walk in the park; it requires a deep dive into their world. That's where the WDP comes in. Since 1985, they've been running the longest underwater dolphin research project, focusing on a community of wild Atlantic spotted dolphins (Stenella frontalis) in the Bahamas, studying them across generations. Their "In Their World, on Their Terms" approach means they gather a wealth of data without disturbing the dolphins. This includes decades of underwater video and audio, all meticulously linked to individual dolphin identities, life histories, and behaviors. It's like having a detailed diary of dolphin life.

A pod of Atlantic spotted dolphins, Stenella frontalis

The WDP's primary focus is to observe and analyze the dolphins' natural communication and social interactions. By working underwater, researchers can directly connect sounds to specific behaviors in a way surface observations can't. They've spent years correlating different sound types with behavioral contexts. For example:

- Signature whistles, which act like unique names, help mothers and calves reunite.

- Burst-pulse "squawks" often accompany dolphin disputes.

- Click "buzzes" are commonly heard during courtship or when chasing sharks.

Knowing the individual dolphins involved is key to accurate interpretation. The ultimate aim of this long-term observation is to decode the structure and potential meanings within these natural sound sequences, looking for patterns and rules that might hint at a form of language. This rich analysis of natural communication is the backbone of WDP's research and provides crucial context for AI analysis.

Left: A mother spotted dolphin watches her calf while foraging. She'll use her unique signature whistle to call him back when he's done. Right: A spectrogram showing the whistle.

Introducing DolphinGemma

Analyzing dolphins' natural, complex communication is no small feat, and WDP's extensive, labeled dataset offers a golden opportunity for AI innovation. Enter DolphinGemma, a creation of Google that leverages specific audio technologies. The SoundStream tokenizer effectively captures dolphin sounds, which are then processed by a model designed for intricate sequences. This ~400M parameter model is just the right size to run on the Pixel phones used by WDP in the field.

Left: Whistles and burst pulses generated during DolphinGemma's early testing.

DolphinGemma draws inspiration from Gemma, Google's suite of lightweight, cutting-edge open models, which share the same research and tech as the Gemini models. Trained on WDP's acoustic database of wild Atlantic spotted dolphins, DolphinGemma operates as an audio-in, audio-out model. It processes sequences of natural dolphin sounds to spot patterns, structure, and ultimately predict the likely next sounds in a sequence, similar to how human language models predict the next word.

This season, WDP is rolling out DolphinGemma in the field, with immediate benefits on the horizon. By pinpointing recurring sound patterns, clusters, and reliable sequences, the model can assist researchers in uncovering hidden structures and potential meanings within the dolphins' natural communication—work that previously demanded massive human effort. Down the line, these patterns, combined with synthetic sounds created by researchers to represent objects the dolphins enjoy, could pave the way for a shared vocabulary and interactive communication.

Using Pixel Phones to Listen and Analyze Dolphin Sounds

Alongside analyzing natural communication, WDP is also exploring a different avenue: potential two-way interaction using technology in the ocean. This has led to the development of the CHAT (Cetacean Hearing Augmentation Telemetry) system, in collaboration with the Georgia Institute of Technology. CHAT is an underwater computer designed not to decode the dolphins' complex natural language but to establish a simpler, shared vocabulary.

The idea is to link novel, synthetic whistles (generated by CHAT, distinct from natural dolphin sounds) with specific objects the dolphins find interesting, like sargassum, seagrass, or scarves used by researchers. By demonstrating the system to each other, researchers hope the curious dolphins will learn to mimic these whistles to request these items. As understanding of the dolphins' natural sounds grows, these can also be incorporated into the system.

To enable two-way interaction, the CHAT system must:

- Hear the mimic accurately amid ocean noise.

- Identify which whistle was mimicked in real-time.

- Inform the researcher (via bone-conducting headphones that work underwater) which object the dolphin "requested."

- Allow the researcher to respond quickly by offering the correct object, reinforcing the connection.

A Google Pixel 6 previously handled the high-fidelity analysis of dolphin sounds in real time. The next generation, focusing on a Google Pixel 9 (research planned for summer 2025), will build on this by integrating speaker/microphone functions and using the phone's advanced processing to run both deep learning models and template matching algorithms simultaneously.

Left: Dr. Denise Herzing wearing "Chat Senior, 2012", Right: Georgia Tech PhD Student Charles Ramey wearing "Chat Junior, 2025"

Using Pixel smartphones greatly reduces the need for custom hardware, boosts system maintainability, cuts down on power usage, and shrinks the device's cost and size—key advantages for field research in the open ocean. Meanwhile, DolphinGemma's predictive capabilities can help CHAT anticipate and identify potential mimics earlier in the vocalization sequence, speeding up researchers' responses to the dolphins and making interactions smoother and more reinforcing.

A Google Pixel 9 inside the latest CHAT system hardware.

Sharing DolphinGemma with the Research Community

Understanding the importance of collaboration in scientific discovery, we're set to share DolphinGemma as an open model this summer. Though trained on Atlantic spotted dolphin sounds, we believe it could be useful for researchers studying other cetacean species, like bottlenose or spinner dolphins. Some fine-tuning might be needed for different species' vocalizations, but the open model allows for this kind of adaptation.

By making tools like DolphinGemma available, we aim to equip researchers worldwide with the means to analyze their own acoustic datasets, speed up the search for patterns, and collectively enhance our understanding of these intelligent marine mammals.

The journey to understanding dolphin communication is long and winding, but the combined efforts of WDP's dedicated field research, Georgia Tech's engineering prowess, and Google's technological might are opening up exciting new possibilities. We're not just listening anymore; we're beginning to understand the patterns within the sounds, setting the stage for a future where the gap between human and dolphin communication might just narrow a bit more.

You can delve deeper into the Wild Dolphin Project on their website.

Related article

"Dot AI Companion App Announces Closure, Discontinues Personalized Service"

Dot, an AI companion application designed to function as a personal friend and confidant, will cease operations, according to a Friday announcement from its developers. New Computer, the startup behind Dot, stated on its website that the service will

"Dot AI Companion App Announces Closure, Discontinues Personalized Service"

Dot, an AI companion application designed to function as a personal friend and confidant, will cease operations, according to a Friday announcement from its developers. New Computer, the startup behind Dot, stated on its website that the service will

Anthropic Resolves Legal Case Over AI-Generated Book Piracy

Anthropic has reached a resolution in a significant copyright dispute with US authors, agreeing to a proposed class action settlement that avoids a potentially costly trial. The agreement, filed in court documents this Tuesday, stems from allegations

Anthropic Resolves Legal Case Over AI-Generated Book Piracy

Anthropic has reached a resolution in a significant copyright dispute with US authors, agreeing to a proposed class action settlement that avoids a potentially costly trial. The agreement, filed in court documents this Tuesday, stems from allegations

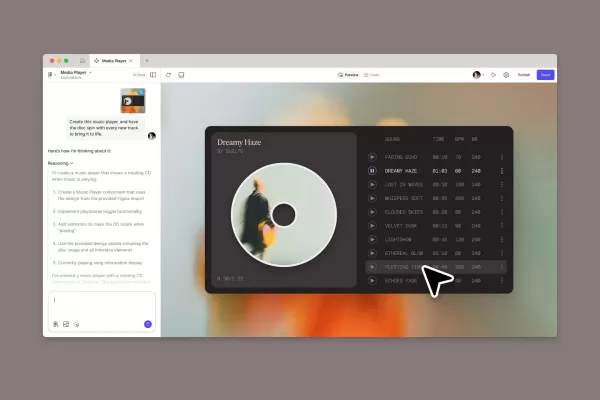

Figma Releases AI-Powered App Builder Tool to All Users

Figma Make, the innovative prompt-to-app development platform unveiled earlier this year, has officially exited beta and rolled out to all users. This groundbreaking tool joins the ranks of AI-powered coding assistants like Google's Gemini Code Assis

Comments (8)

0/200

Figma Releases AI-Powered App Builder Tool to All Users

Figma Make, the innovative prompt-to-app development platform unveiled earlier this year, has officially exited beta and rolled out to all users. This groundbreaking tool joins the ranks of AI-powered coding assistants like Google's Gemini Code Assis

Comments (8)

0/200

![JonathanAllen]() JonathanAllen

JonathanAllen

September 20, 2025 at 2:30:31 PM EDT

September 20, 2025 at 2:30:31 PM EDT

Finalmente estamos mais perto de conversar com golfinhos! 🐬 Mas será que eles vão gostar das nossas piadas? Imagina um golfinho rindo dos nossos memes aquáticos... Jason Warner tem razão, melhor focar em apps legais assim do que reinventar modelos gigantes.

0

0

![NoahSmith]() NoahSmith

NoahSmith

August 2, 2025 at 11:07:14 AM EDT

August 2, 2025 at 11:07:14 AM EDT

Wow, decoding dolphin language with AI? That's wild! Imagine chatting with dolphins someday, but I wonder if they'll have better gossip than us humans. 🐬

0

0

![AlbertMartínez]() AlbertMartínez

AlbertMartínez

July 27, 2025 at 9:19:05 PM EDT

July 27, 2025 at 9:19:05 PM EDT

This is wild! Google’s AI decoding dolphin chatter? I wonder if we’ll ever have a full-on convo with dolphins, like chatting with an alien species. 🐬 Super cool but kinda creepy too!

0

0

![WalterWalker]() WalterWalker

WalterWalker

April 20, 2025 at 11:04:10 AM EDT

April 20, 2025 at 11:04:10 AM EDT

GoogleのAIがイルカの通信を助けるなんて、すごい!これで賢い生き物をより理解できるようになるのは素晴らしいです。でも、本当にイルカのようなメッセージを作れるの?SFみたいだけど、楽しみです。🐬🤓

0

0

![GeorgeJones]() GeorgeJones

GeorgeJones

April 20, 2025 at 1:34:27 AM EDT

April 20, 2025 at 1:34:27 AM EDT

구글의 AI가 돌고래의 통신을 돕는다고? 그건 정말 멋져요! 이 똑똑한 생물들을 더 잘 이해할 수 있게 되는 건 좋죠. 하지만 정말로 돌고래 같은 메시지를 만들 수 있을까요? SF 같지만, 기대돼요. 🐬🤓

0

0

![JonathanLewis]() JonathanLewis

JonathanLewis

April 19, 2025 at 11:35:47 PM EDT

April 19, 2025 at 11:35:47 PM EDT

Google's AI helping with dolphin communication? That's wild! I'm all for understanding these smart creatures better. But can we really make our own dolphin-like messages? Sounds like sci-fi, but I'm here for it. 🐬🤓

0

0

For decades, scientists have been fascinated by the clicks, whistles, and burst pulses of dolphins, trying to crack the code of their intricate communication. Imagine if we could not only eavesdrop on these marine conversations but also decipher their patterns well enough to craft our own dolphin-like responses. This dream is inching closer to reality, and on National Dolphin Day, Google, in partnership with researchers from Georgia Tech and the fieldwork of the Wild Dolphin Project (WDP), is thrilled to share exciting updates on DolphinGemma. This groundbreaking AI model is trained to grasp the nuances of dolphin vocalizations and even generate novel sound sequences, pushing the limits of AI and our potential to connect with the ocean's inhabitants.

Decades of Dolphin Society Research

Understanding any species isn't a walk in the park; it requires a deep dive into their world. That's where the WDP comes in. Since 1985, they've been running the longest underwater dolphin research project, focusing on a community of wild Atlantic spotted dolphins (Stenella frontalis) in the Bahamas, studying them across generations. Their "In Their World, on Their Terms" approach means they gather a wealth of data without disturbing the dolphins. This includes decades of underwater video and audio, all meticulously linked to individual dolphin identities, life histories, and behaviors. It's like having a detailed diary of dolphin life.

- Signature whistles, which act like unique names, help mothers and calves reunite.

- Burst-pulse "squawks" often accompany dolphin disputes.

- Click "buzzes" are commonly heard during courtship or when chasing sharks.

Knowing the individual dolphins involved is key to accurate interpretation. The ultimate aim of this long-term observation is to decode the structure and potential meanings within these natural sound sequences, looking for patterns and rules that might hint at a form of language. This rich analysis of natural communication is the backbone of WDP's research and provides crucial context for AI analysis.

Introducing DolphinGemma

Analyzing dolphins' natural, complex communication is no small feat, and WDP's extensive, labeled dataset offers a golden opportunity for AI innovation. Enter DolphinGemma, a creation of Google that leverages specific audio technologies. The SoundStream tokenizer effectively captures dolphin sounds, which are then processed by a model designed for intricate sequences. This ~400M parameter model is just the right size to run on the Pixel phones used by WDP in the field.

This season, WDP is rolling out DolphinGemma in the field, with immediate benefits on the horizon. By pinpointing recurring sound patterns, clusters, and reliable sequences, the model can assist researchers in uncovering hidden structures and potential meanings within the dolphins' natural communication—work that previously demanded massive human effort. Down the line, these patterns, combined with synthetic sounds created by researchers to represent objects the dolphins enjoy, could pave the way for a shared vocabulary and interactive communication.

Using Pixel Phones to Listen and Analyze Dolphin Sounds

Alongside analyzing natural communication, WDP is also exploring a different avenue: potential two-way interaction using technology in the ocean. This has led to the development of the CHAT (Cetacean Hearing Augmentation Telemetry) system, in collaboration with the Georgia Institute of Technology. CHAT is an underwater computer designed not to decode the dolphins' complex natural language but to establish a simpler, shared vocabulary.

The idea is to link novel, synthetic whistles (generated by CHAT, distinct from natural dolphin sounds) with specific objects the dolphins find interesting, like sargassum, seagrass, or scarves used by researchers. By demonstrating the system to each other, researchers hope the curious dolphins will learn to mimic these whistles to request these items. As understanding of the dolphins' natural sounds grows, these can also be incorporated into the system.

- Hear the mimic accurately amid ocean noise.

- Identify which whistle was mimicked in real-time.

- Inform the researcher (via bone-conducting headphones that work underwater) which object the dolphin "requested."

- Allow the researcher to respond quickly by offering the correct object, reinforcing the connection.

A Google Pixel 6 previously handled the high-fidelity analysis of dolphin sounds in real time. The next generation, focusing on a Google Pixel 9 (research planned for summer 2025), will build on this by integrating speaker/microphone functions and using the phone's advanced processing to run both deep learning models and template matching algorithms simultaneously.

Sharing DolphinGemma with the Research Community

Understanding the importance of collaboration in scientific discovery, we're set to share DolphinGemma as an open model this summer. Though trained on Atlantic spotted dolphin sounds, we believe it could be useful for researchers studying other cetacean species, like bottlenose or spinner dolphins. Some fine-tuning might be needed for different species' vocalizations, but the open model allows for this kind of adaptation.

By making tools like DolphinGemma available, we aim to equip researchers worldwide with the means to analyze their own acoustic datasets, speed up the search for patterns, and collectively enhance our understanding of these intelligent marine mammals.

The journey to understanding dolphin communication is long and winding, but the combined efforts of WDP's dedicated field research, Georgia Tech's engineering prowess, and Google's technological might are opening up exciting new possibilities. We're not just listening anymore; we're beginning to understand the patterns within the sounds, setting the stage for a future where the gap between human and dolphin communication might just narrow a bit more.

You can delve deeper into the Wild Dolphin Project on their website.

Anthropic Resolves Legal Case Over AI-Generated Book Piracy

Anthropic has reached a resolution in a significant copyright dispute with US authors, agreeing to a proposed class action settlement that avoids a potentially costly trial. The agreement, filed in court documents this Tuesday, stems from allegations

Anthropic Resolves Legal Case Over AI-Generated Book Piracy

Anthropic has reached a resolution in a significant copyright dispute with US authors, agreeing to a proposed class action settlement that avoids a potentially costly trial. The agreement, filed in court documents this Tuesday, stems from allegations

Figma Releases AI-Powered App Builder Tool to All Users

Figma Make, the innovative prompt-to-app development platform unveiled earlier this year, has officially exited beta and rolled out to all users. This groundbreaking tool joins the ranks of AI-powered coding assistants like Google's Gemini Code Assis

Figma Releases AI-Powered App Builder Tool to All Users

Figma Make, the innovative prompt-to-app development platform unveiled earlier this year, has officially exited beta and rolled out to all users. This groundbreaking tool joins the ranks of AI-powered coding assistants like Google's Gemini Code Assis

September 20, 2025 at 2:30:31 PM EDT

September 20, 2025 at 2:30:31 PM EDT

Finalmente estamos mais perto de conversar com golfinhos! 🐬 Mas será que eles vão gostar das nossas piadas? Imagina um golfinho rindo dos nossos memes aquáticos... Jason Warner tem razão, melhor focar em apps legais assim do que reinventar modelos gigantes.

0

0

August 2, 2025 at 11:07:14 AM EDT

August 2, 2025 at 11:07:14 AM EDT

Wow, decoding dolphin language with AI? That's wild! Imagine chatting with dolphins someday, but I wonder if they'll have better gossip than us humans. 🐬

0

0

July 27, 2025 at 9:19:05 PM EDT

July 27, 2025 at 9:19:05 PM EDT

This is wild! Google’s AI decoding dolphin chatter? I wonder if we’ll ever have a full-on convo with dolphins, like chatting with an alien species. 🐬 Super cool but kinda creepy too!

0

0

April 20, 2025 at 11:04:10 AM EDT

April 20, 2025 at 11:04:10 AM EDT

GoogleのAIがイルカの通信を助けるなんて、すごい!これで賢い生き物をより理解できるようになるのは素晴らしいです。でも、本当にイルカのようなメッセージを作れるの?SFみたいだけど、楽しみです。🐬🤓

0

0

April 20, 2025 at 1:34:27 AM EDT

April 20, 2025 at 1:34:27 AM EDT

구글의 AI가 돌고래의 통신을 돕는다고? 그건 정말 멋져요! 이 똑똑한 생물들을 더 잘 이해할 수 있게 되는 건 좋죠. 하지만 정말로 돌고래 같은 메시지를 만들 수 있을까요? SF 같지만, 기대돼요. 🐬🤓

0

0

April 19, 2025 at 11:35:47 PM EDT

April 19, 2025 at 11:35:47 PM EDT

Google's AI helping with dolphin communication? That's wild! I'm all for understanding these smart creatures better. But can we really make our own dolphin-like messages? Sounds like sci-fi, but I'm here for it. 🐬🤓

0

0