Generative AI Potentially Increases Workload Rather Than Saving Time

The Double-Edged Sword of Generative AI

Generative artificial intelligence (AI) tools are often touted as time-savers and productivity boosters. They can indeed help you run code or generate reports in a snap, but there's a catch. The behind-the-scenes work required to develop and maintain large language models (LLMs) might actually demand more human effort than the initial time saved. Moreover, many tasks could be efficiently handled by simpler automation methods, without needing the heavy artillery of AI.

Peter Cappelli, a management professor at the University of Pennsylvania's Wharton School, shared these insights at a recent MIT event. He argues that on a broader scale, generative AI and LLMs might generate more work than they alleviate. These models are complex to implement, and Cappelli pointed out, "it turns out there are many things generative AI could do that we don't really need doing."

The Hype vs. Reality of AI

While AI is often celebrated as a revolutionary technology, Cappelli warns that tech industry projections can be overly optimistic. "In fact, most of the technology forecasts about work have been wrong over time," he stated. He cited the example of driverless trucks and cars, which were supposed to be widespread by 2018 but have yet to fully materialize.

The grand visions of tech-driven transformation often stumble over practical challenges. Advocates for autonomous vehicles focused on what these vehicles could do, rather than addressing the necessary regulatory clearances, insurance issues, and software complexities. Cappelli also noted that truck drivers perform a range of tasks beyond just driving, complicating the transition to autonomous vehicles.

Generative AI in Software Development and Business

A similar complexity arises when applying generative AI to software development and business operations. Cappelli highlighted that programmers spend a significant portion of their time on non-coding activities, such as communication and budget negotiations. "Even on the programming side, not all of that is actually programming," he said.

The promise of AI-driven innovation is exciting, but its implementation often faces real-world hurdles. The labor-saving and productivity benefits of generative AI might be overshadowed by the extensive backend work needed to support LLMs and algorithms.

New Work Generated by AI

Both generative and operational AI introduce new tasks, according to Cappelli. "People have to manage databases, they have to organize materials, they have to resolve these problems of dueling reports, validity, and those sorts of things. It's going to generate a lot of new tasks, somebody is going to have to do those."

Even operational AI, which has been around for a while, continues to evolve. Cappelli noted that machine learning with numerical data has been underutilized, partly due to challenges in database management. "It takes a lot of effort just to put the data together so you can analyze it. Data is often in different silos in different organizations, which are politically difficult and just technically difficult to put together."

Challenges in Adopting Generative AI and LLMs

Cappelli identified several hurdles in the adoption of generative AI and LLMs:

- Overkill for Many Tasks: "There are lots of things that large language models can do that probably don't need doing," he said. For instance, while business correspondence is a potential use case, much of it is already handled by form letters and basic automation. "A form letter has already been cleared by lawyers, and anything written by large language models has probably got to be seen by a lawyer. And that is not going to be any kind of a time saver."

- Increasing Costs: Cappelli warned that replacing rote automation with AI might become more expensive. "It's not so clear that large language models are going to be as cheap as they are now. As more people use them, computer space has to go up, electricity demands alone are big. Somebody's got to pay for it."

- Need for Validation: Generative AI outputs may be suitable for simple tasks like emails, but for more complex reports, validation is crucial. "If you're going to use it for something important, you better be sure that it's right. And how are you going to know if it's right? Well, it helps to have an expert; somebody who can independently validate and knows something about the topic. To look for hallucinations or quirky outcomes, and that it is up-to-date."

- Information Overload: The ease of generating reports and outputs can lead to an overwhelming amount of information, sometimes contradictory. "Because it's pretty easy to generate reports and output, you're going to get more responses," Cappelli said. He also noted that LLMs might produce different responses to the same prompt, raising reliability concerns. "This is a reliability issue -- what would you do with your report? You generate one that makes your division look better, and you give that to the boss."

- Human Decision-Making: People often prefer to make decisions based on gut feelings or personal preferences, which can be challenging for machines to influence. Cappelli pointed out that even when AI could assist in decision-making, such as in hiring, people tend to follow their instincts. "Machine learning could already do that for us. If you built the model, you would find that your line managers who are already making the decisions don't want to use it."

Potential Near-Term Applications

Despite these challenges, Cappelli sees potential in using generative AI for sifting through data and supporting decision-making processes. "We are washing data right now that we haven't been able to analyze ourselves," he said. "It's going to be way better at doing that than we are." Alongside this, managing databases and addressing data pollution issues will remain critical tasks.

Related article

Does Training Mitigate AI-Induced Cognitive Offloading Effects?

A recent investigative piece on Unite.ai titled 'ChatGPT Might Be Draining Your Brain: Cognitive Debt in the AI Era' shed light on concerning research from MIT. Journalist Alex McFarland detailed compelling evidence of how excessive AI dependency can

Does Training Mitigate AI-Induced Cognitive Offloading Effects?

A recent investigative piece on Unite.ai titled 'ChatGPT Might Be Draining Your Brain: Cognitive Debt in the AI Era' shed light on concerning research from MIT. Journalist Alex McFarland detailed compelling evidence of how excessive AI dependency can

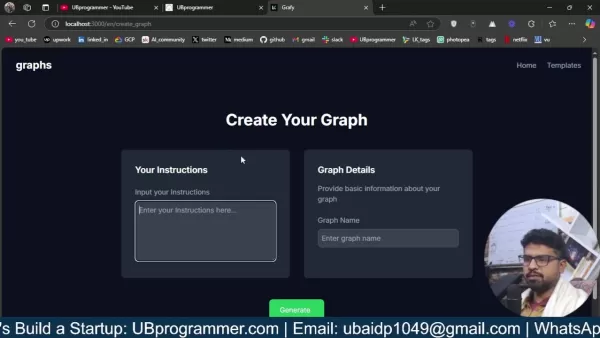

Easily Generate AI-Powered Graphs and Visualizations for Better Data Insights

Modern data analysis demands intuitive visualization of complex information. AI-powered graph generation solutions have emerged as indispensable assets, revolutionizing how professionals transform raw data into compelling visual stories. These intell

Easily Generate AI-Powered Graphs and Visualizations for Better Data Insights

Modern data analysis demands intuitive visualization of complex information. AI-powered graph generation solutions have emerged as indispensable assets, revolutionizing how professionals transform raw data into compelling visual stories. These intell

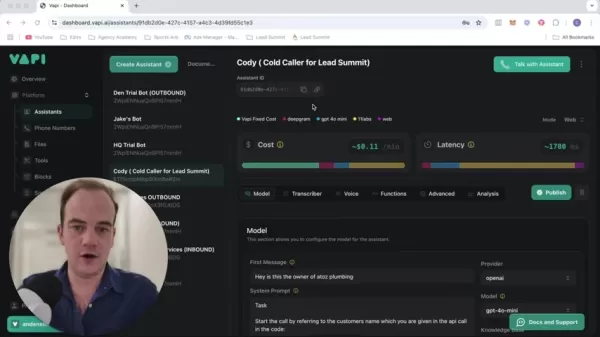

Transform Your Sales Strategy: AI Cold Calling Technology Powered by Vapi

Modern businesses operate at lightning speed, demanding innovative solutions to stay competitive. Picture revolutionizing your agency's outreach with an AI-powered cold calling system that simultaneously engages dozens of prospects - all running auto

Comments (3)

0/200

Transform Your Sales Strategy: AI Cold Calling Technology Powered by Vapi

Modern businesses operate at lightning speed, demanding innovative solutions to stay competitive. Picture revolutionizing your agency's outreach with an AI-powered cold calling system that simultaneously engages dozens of prospects - all running auto

Comments (3)

0/200

![TimothyWilliams]() TimothyWilliams

TimothyWilliams

August 16, 2025 at 5:00:59 AM EDT

August 16, 2025 at 5:00:59 AM EDT

Generative AI sounds cool, but it’s like getting a fancy new tool that takes ages to learn how to use properly. More work to save work? Ironic! 😅

0

0

![ChloeGreen]() ChloeGreen

ChloeGreen

August 15, 2025 at 7:00:59 PM EDT

August 15, 2025 at 7:00:59 PM EDT

Generative AI sounds like a dream, but it’s piling on more work for me! 😩 Fixing bugs in AI-generated code takes longer than writing it myself sometimes.

0

0

![WilliamAnderson]() WilliamAnderson

WilliamAnderson

July 27, 2025 at 9:18:39 PM EDT

July 27, 2025 at 9:18:39 PM EDT

I thought generative AI was supposed to make life easier, but this article's got a point—it's like trading one workload for another! 😅 Still, the coding speed-up is nice, even if I’m now babysitting AI models.

0

0

The Double-Edged Sword of Generative AI

Generative artificial intelligence (AI) tools are often touted as time-savers and productivity boosters. They can indeed help you run code or generate reports in a snap, but there's a catch. The behind-the-scenes work required to develop and maintain large language models (LLMs) might actually demand more human effort than the initial time saved. Moreover, many tasks could be efficiently handled by simpler automation methods, without needing the heavy artillery of AI.

Peter Cappelli, a management professor at the University of Pennsylvania's Wharton School, shared these insights at a recent MIT event. He argues that on a broader scale, generative AI and LLMs might generate more work than they alleviate. These models are complex to implement, and Cappelli pointed out, "it turns out there are many things generative AI could do that we don't really need doing."

The Hype vs. Reality of AI

While AI is often celebrated as a revolutionary technology, Cappelli warns that tech industry projections can be overly optimistic. "In fact, most of the technology forecasts about work have been wrong over time," he stated. He cited the example of driverless trucks and cars, which were supposed to be widespread by 2018 but have yet to fully materialize.

The grand visions of tech-driven transformation often stumble over practical challenges. Advocates for autonomous vehicles focused on what these vehicles could do, rather than addressing the necessary regulatory clearances, insurance issues, and software complexities. Cappelli also noted that truck drivers perform a range of tasks beyond just driving, complicating the transition to autonomous vehicles.

Generative AI in Software Development and Business

A similar complexity arises when applying generative AI to software development and business operations. Cappelli highlighted that programmers spend a significant portion of their time on non-coding activities, such as communication and budget negotiations. "Even on the programming side, not all of that is actually programming," he said.

The promise of AI-driven innovation is exciting, but its implementation often faces real-world hurdles. The labor-saving and productivity benefits of generative AI might be overshadowed by the extensive backend work needed to support LLMs and algorithms.

New Work Generated by AI

Both generative and operational AI introduce new tasks, according to Cappelli. "People have to manage databases, they have to organize materials, they have to resolve these problems of dueling reports, validity, and those sorts of things. It's going to generate a lot of new tasks, somebody is going to have to do those."

Even operational AI, which has been around for a while, continues to evolve. Cappelli noted that machine learning with numerical data has been underutilized, partly due to challenges in database management. "It takes a lot of effort just to put the data together so you can analyze it. Data is often in different silos in different organizations, which are politically difficult and just technically difficult to put together."

Challenges in Adopting Generative AI and LLMs

Cappelli identified several hurdles in the adoption of generative AI and LLMs:

- Overkill for Many Tasks: "There are lots of things that large language models can do that probably don't need doing," he said. For instance, while business correspondence is a potential use case, much of it is already handled by form letters and basic automation. "A form letter has already been cleared by lawyers, and anything written by large language models has probably got to be seen by a lawyer. And that is not going to be any kind of a time saver."

- Increasing Costs: Cappelli warned that replacing rote automation with AI might become more expensive. "It's not so clear that large language models are going to be as cheap as they are now. As more people use them, computer space has to go up, electricity demands alone are big. Somebody's got to pay for it."

- Need for Validation: Generative AI outputs may be suitable for simple tasks like emails, but for more complex reports, validation is crucial. "If you're going to use it for something important, you better be sure that it's right. And how are you going to know if it's right? Well, it helps to have an expert; somebody who can independently validate and knows something about the topic. To look for hallucinations or quirky outcomes, and that it is up-to-date."

- Information Overload: The ease of generating reports and outputs can lead to an overwhelming amount of information, sometimes contradictory. "Because it's pretty easy to generate reports and output, you're going to get more responses," Cappelli said. He also noted that LLMs might produce different responses to the same prompt, raising reliability concerns. "This is a reliability issue -- what would you do with your report? You generate one that makes your division look better, and you give that to the boss."

- Human Decision-Making: People often prefer to make decisions based on gut feelings or personal preferences, which can be challenging for machines to influence. Cappelli pointed out that even when AI could assist in decision-making, such as in hiring, people tend to follow their instincts. "Machine learning could already do that for us. If you built the model, you would find that your line managers who are already making the decisions don't want to use it."

Potential Near-Term Applications

Despite these challenges, Cappelli sees potential in using generative AI for sifting through data and supporting decision-making processes. "We are washing data right now that we haven't been able to analyze ourselves," he said. "It's going to be way better at doing that than we are." Alongside this, managing databases and addressing data pollution issues will remain critical tasks.

Does Training Mitigate AI-Induced Cognitive Offloading Effects?

A recent investigative piece on Unite.ai titled 'ChatGPT Might Be Draining Your Brain: Cognitive Debt in the AI Era' shed light on concerning research from MIT. Journalist Alex McFarland detailed compelling evidence of how excessive AI dependency can

Does Training Mitigate AI-Induced Cognitive Offloading Effects?

A recent investigative piece on Unite.ai titled 'ChatGPT Might Be Draining Your Brain: Cognitive Debt in the AI Era' shed light on concerning research from MIT. Journalist Alex McFarland detailed compelling evidence of how excessive AI dependency can

Easily Generate AI-Powered Graphs and Visualizations for Better Data Insights

Modern data analysis demands intuitive visualization of complex information. AI-powered graph generation solutions have emerged as indispensable assets, revolutionizing how professionals transform raw data into compelling visual stories. These intell

Easily Generate AI-Powered Graphs and Visualizations for Better Data Insights

Modern data analysis demands intuitive visualization of complex information. AI-powered graph generation solutions have emerged as indispensable assets, revolutionizing how professionals transform raw data into compelling visual stories. These intell

Transform Your Sales Strategy: AI Cold Calling Technology Powered by Vapi

Modern businesses operate at lightning speed, demanding innovative solutions to stay competitive. Picture revolutionizing your agency's outreach with an AI-powered cold calling system that simultaneously engages dozens of prospects - all running auto

Transform Your Sales Strategy: AI Cold Calling Technology Powered by Vapi

Modern businesses operate at lightning speed, demanding innovative solutions to stay competitive. Picture revolutionizing your agency's outreach with an AI-powered cold calling system that simultaneously engages dozens of prospects - all running auto

August 16, 2025 at 5:00:59 AM EDT

August 16, 2025 at 5:00:59 AM EDT

Generative AI sounds cool, but it’s like getting a fancy new tool that takes ages to learn how to use properly. More work to save work? Ironic! 😅

0

0

August 15, 2025 at 7:00:59 PM EDT

August 15, 2025 at 7:00:59 PM EDT

Generative AI sounds like a dream, but it’s piling on more work for me! 😩 Fixing bugs in AI-generated code takes longer than writing it myself sometimes.

0

0

July 27, 2025 at 9:18:39 PM EDT

July 27, 2025 at 9:18:39 PM EDT

I thought generative AI was supposed to make life easier, but this article's got a point—it's like trading one workload for another! 😅 Still, the coding speed-up is nice, even if I’m now babysitting AI models.

0

0