Gemini Unveils Enhanced Model, Extended Context, AI Agents

Back in December, we rolled out our first natively multimodal model, Gemini 1.0, available in three sizes: Ultra, Pro, and Nano. Fast forward a few months, and we introduced 1.5 Pro, boasting enhanced performance and a groundbreaking long context window of 1 million tokens.

Developers and enterprise customers have been leveraging 1.5 Pro in some pretty amazing ways, appreciating its long context window, robust multimodal reasoning, and overall stellar performance.

Feedback from users highlighted the need for models with lower latency and cost, which spurred us to keep pushing the envelope. That's why we're excited to introduce Gemini 1.5 Flash today. This model is lighter than 1.5 Pro, designed to be fast and efficient, and perfect for scaling up.

Both 1.5 Pro and 1.5 Flash are now in public preview, with a 1 million token context window, accessible through Google AI Studio and Vertex AI. And for those who need even more, 1.5 Pro now offers a 2 million token context window, available via waitlist to developers using the API and Google Cloud customers.

We're not stopping there. We're also rolling out updates across the entire Gemini family, unveiling our next generation of open models, Gemma 2, and making strides in the future of AI assistants with Project Astra.

Context lengths of leading foundation models compared with Gemini 1.5’s 2 million token capability

Gemini family of model updates

The new 1.5 Flash, optimized for speed and efficiency

Introducing 1.5 Flash, the latest and fastest member of the Gemini family, served through our API. It's tailored for high-volume, high-frequency tasks, offering cost-effective scalability while maintaining our long context window breakthrough.

Although lighter than 1.5 Pro, 1.5 Flash is no slouch. It excels in multimodal reasoning across vast data sets, delivering impressive quality relative to its size.

The new Gemini 1.5 Flash model is optimized for speed and efficiency, is highly capable of multimodal reasoning and features our breakthrough long context window.

1.5 Flash shines in tasks like summarization, chat applications, and captioning images and videos. It's also adept at extracting data from long documents and tables. This versatility stems from being trained by 1.5 Pro through "distillation," where the core knowledge and skills of a larger model are passed down to a more efficient, smaller model.

For more details on 1.5 Flash, check out our updated Gemini 1.5 technical report, the Gemini technology page, and learn about its availability and pricing.

Significantly improving 1.5 Pro

Over the past few months, we've made significant strides in enhancing 1.5 Pro, our top performer across a wide range of tasks.

We've expanded its context window to 2 million tokens and improved its capabilities in code generation, logical reasoning, planning, multi-turn conversations, and understanding audio and images. These enhancements are backed by advances in data and algorithms, showing marked improvements on both public and internal benchmarks.

1.5 Pro now handles increasingly complex and nuanced instructions, including those that define product-level behaviors like role, format, and style. We've refined control over the model's responses for specific use cases, such as customizing chat agent personas or automating workflows with multiple function calls. Users can now steer the model's behavior with system instructions.

We've also added audio understanding to the Gemini API and Google AI Studio, allowing 1.5 Pro to process both images and audio from videos uploaded to Google AI Studio. We're integrating 1.5 Pro into Google products like Gemini Advanced and Workspace apps.

For more on 1.5 Pro, dive into our updated Gemini 1.5 technical report and the Gemini technology page.

Gemini Nano understands multimodal inputs

Gemini Nano is stepping up its game, moving beyond text-only inputs to include images. Starting with Pixel, apps using Gemini Nano with Multimodality will be able to interpret the world in a more human-like way, through text, visuals, sound, and spoken language.

Learn more about Gemini 1.0 Nano on Android.

Next generation of open models

Today, we're also updating Gemma, our family of open models, which are built on the same research and tech as the Gemini models.

We're launching Gemma 2, our next-gen open models for responsible AI innovation. Gemma 2 features a new architecture for superior performance and efficiency, and will come in new sizes.

The Gemma family is growing with PaliGemma, our first vision-language model inspired by PaLI-3. We've also upgraded our Responsible Generative AI Toolkit with LLM Comparator to assess model response quality.

For more details, head over to the Developer blog.

Progress developing universal AI agents

At Google DeepMind, our mission is to build AI responsibly to benefit humanity. We've always aimed to create universal AI agents that can assist in everyday life. That's why we're sharing our progress on the future of AI assistants with Project Astra (advanced seeing and talking responsive agent).

For an AI agent to be truly helpful, it needs to understand and react to the world like a human, taking in and remembering what it sees and hears to grasp context and act accordingly. It should also be proactive, teachable, and personal, allowing for natural, lag-free conversations.

While we've made great strides in processing multimodal information, achieving conversational response times is a tough engineering challenge. Over the years, we've been refining how our models perceive, reason, and converse to make interactions feel more natural.

Building on Gemini, we've developed prototype agents that process information faster by continuously encoding video frames, merging video and speech inputs into a timeline of events, and caching this data for quick recall.

By using our top-tier speech models, we've also improved how these agents sound, giving them a broader range of intonations. They can better understand the context they're in and respond swiftly in conversation.

With this technology, it's easy to imagine a future where everyone has an expert AI assistant at their side, accessible through a phone or glasses. Some of these capabilities will be coming to Google products like the Gemini app and web experience later this year.

Continued exploration

We've come a long way with our Gemini family of models, and we're committed to pushing the boundaries even further. Through relentless innovation, we're exploring new frontiers while unlocking exciting new use cases for Gemini.

To learn more about Gemini and its capabilities, check out our resources.

Get more stories from Google in your inbox.Get more stories from Google in your inbox.

Get more stories from Google in your inbox.Get more stories from Google in your inbox.

Email addressYour information will be used in accordance withGoogle's privacy policy.

SubscribeDone. Just one step more.

Check your inbox to confirm your subscription.

You are already subscribed to our newsletter.

You can also subscribe with adifferent email address.

Related article

Anthropic Resolves Legal Case Over AI-Generated Book Piracy

Anthropic has reached a resolution in a significant copyright dispute with US authors, agreeing to a proposed class action settlement that avoids a potentially costly trial. The agreement, filed in court documents this Tuesday, stems from allegations

Anthropic Resolves Legal Case Over AI-Generated Book Piracy

Anthropic has reached a resolution in a significant copyright dispute with US authors, agreeing to a proposed class action settlement that avoids a potentially costly trial. The agreement, filed in court documents this Tuesday, stems from allegations

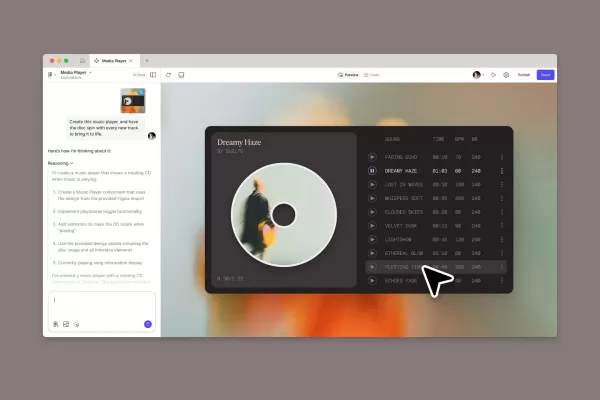

Figma Releases AI-Powered App Builder Tool to All Users

Figma Make, the innovative prompt-to-app development platform unveiled earlier this year, has officially exited beta and rolled out to all users. This groundbreaking tool joins the ranks of AI-powered coding assistants like Google's Gemini Code Assis

Figma Releases AI-Powered App Builder Tool to All Users

Figma Make, the innovative prompt-to-app development platform unveiled earlier this year, has officially exited beta and rolled out to all users. This groundbreaking tool joins the ranks of AI-powered coding assistants like Google's Gemini Code Assis

R1: Chinese Tech Giant Unveils Optimus-Rivaling Humanoid Robot

Ant Group Unveils First Humanoid Robot Prototype

The payments giant's robotics division has debuted its R1 humanoid at major tech events, showcasing automated cooking demonstrations and signaling ambitions beyond simple manufacturing applications.

P

Comments (25)

0/200

R1: Chinese Tech Giant Unveils Optimus-Rivaling Humanoid Robot

Ant Group Unveils First Humanoid Robot Prototype

The payments giant's robotics division has debuted its R1 humanoid at major tech events, showcasing automated cooking demonstrations and signaling ambitions beyond simple manufacturing applications.

P

Comments (25)

0/200

![LucasWalker]() LucasWalker

LucasWalker

April 18, 2025 at 5:37:58 PM EDT

April 18, 2025 at 5:37:58 PM EDT

ジェミニの新しいモデルが100万トークンのコンテキストを持つとは信じられない!🤯 まるで何でも扱える超賢いAIを持っているようです。AIエージェントもゲームチェンジャーです。次に何を出すのか楽しみです!🚀

0

0

![FrankSmith]() FrankSmith

FrankSmith

April 15, 2025 at 8:37:56 PM EDT

April 15, 2025 at 8:37:56 PM EDT

젬니니의 새로운 모델 정말 멋지네요! 100만 토큰의 컨텍스트 윈도우는 정말 놀랍습니다. 마치 모든 대화를 기억하는 똑똑한 친구가 있는 것 같아요! 조금 더 빨랐으면 좋겠지만, 뭐 다 가질 수는 없죠? 🤓

0

0

![JamesMiller]() JamesMiller

JamesMiller

April 15, 2025 at 1:53:33 PM EDT

April 15, 2025 at 1:53:33 PM EDT

O novo modelo do Gemini é bem legal! A janela de contexto de 1 milhão de tokens é louca, é como ter um amigo superinteligente que lembra de tudo o que você já disse! Só queria que fosse um pouco mais rápido, mas, ei, não dá pra ter tudo, né? 🤓

0

0

![MarkRoberts]() MarkRoberts

MarkRoberts

April 14, 2025 at 9:25:31 PM EDT

April 14, 2025 at 9:25:31 PM EDT

El nuevo modelo Gemini es impresionante, especialmente la ventana de contexto larga. Es genial para desarrolladores, pero puede ser un poco abrumador para principiantes. Los agentes de IA son geniales, pero desearía que hubiera más documentación sobre cómo usarlos de manera efectiva.

0

0

![BillyGarcia]() BillyGarcia

BillyGarcia

April 14, 2025 at 3:20:08 PM EDT

April 14, 2025 at 3:20:08 PM EDT

O novo modelo do Gemini com um contexto de um milhão de tokens é loucura! 🤯 É como ter uma IA super inteligente que pode lidar com qualquer coisa. Os agentes de IA também são um divisor de águas. Mal posso esperar para ver o que eles vão lançar a seguir! 🚀

0

0

![RogerRoberts]() RogerRoberts

RogerRoberts

April 14, 2025 at 1:06:25 PM EDT

April 14, 2025 at 1:06:25 PM EDT

¡El nuevo modelo de Gemini es bastante genial! La ventana de contexto de 1 millón de tokens es una locura, es como tener un amigo súper inteligente que recuerda todo lo que has dicho alguna vez. Ojalá fuera un poco más rápido, pero bueno, no se puede tener todo, ¿verdad? 🤓

0

0

Back in December, we rolled out our first natively multimodal model, Gemini 1.0, available in three sizes: Ultra, Pro, and Nano. Fast forward a few months, and we introduced 1.5 Pro, boasting enhanced performance and a groundbreaking long context window of 1 million tokens.

Developers and enterprise customers have been leveraging 1.5 Pro in some pretty amazing ways, appreciating its long context window, robust multimodal reasoning, and overall stellar performance.

Feedback from users highlighted the need for models with lower latency and cost, which spurred us to keep pushing the envelope. That's why we're excited to introduce Gemini 1.5 Flash today. This model is lighter than 1.5 Pro, designed to be fast and efficient, and perfect for scaling up.

Both 1.5 Pro and 1.5 Flash are now in public preview, with a 1 million token context window, accessible through Google AI Studio and Vertex AI. And for those who need even more, 1.5 Pro now offers a 2 million token context window, available via waitlist to developers using the API and Google Cloud customers.

We're not stopping there. We're also rolling out updates across the entire Gemini family, unveiling our next generation of open models, Gemma 2, and making strides in the future of AI assistants with Project Astra.

Gemini family of model updates

The new 1.5 Flash, optimized for speed and efficiency

Introducing 1.5 Flash, the latest and fastest member of the Gemini family, served through our API. It's tailored for high-volume, high-frequency tasks, offering cost-effective scalability while maintaining our long context window breakthrough.

Although lighter than 1.5 Pro, 1.5 Flash is no slouch. It excels in multimodal reasoning across vast data sets, delivering impressive quality relative to its size.

1.5 Flash shines in tasks like summarization, chat applications, and captioning images and videos. It's also adept at extracting data from long documents and tables. This versatility stems from being trained by 1.5 Pro through "distillation," where the core knowledge and skills of a larger model are passed down to a more efficient, smaller model.

For more details on 1.5 Flash, check out our updated Gemini 1.5 technical report, the Gemini technology page, and learn about its availability and pricing.

Significantly improving 1.5 Pro

Over the past few months, we've made significant strides in enhancing 1.5 Pro, our top performer across a wide range of tasks.

We've expanded its context window to 2 million tokens and improved its capabilities in code generation, logical reasoning, planning, multi-turn conversations, and understanding audio and images. These enhancements are backed by advances in data and algorithms, showing marked improvements on both public and internal benchmarks.

1.5 Pro now handles increasingly complex and nuanced instructions, including those that define product-level behaviors like role, format, and style. We've refined control over the model's responses for specific use cases, such as customizing chat agent personas or automating workflows with multiple function calls. Users can now steer the model's behavior with system instructions.

We've also added audio understanding to the Gemini API and Google AI Studio, allowing 1.5 Pro to process both images and audio from videos uploaded to Google AI Studio. We're integrating 1.5 Pro into Google products like Gemini Advanced and Workspace apps.

For more on 1.5 Pro, dive into our updated Gemini 1.5 technical report and the Gemini technology page.

Gemini Nano understands multimodal inputs

Gemini Nano is stepping up its game, moving beyond text-only inputs to include images. Starting with Pixel, apps using Gemini Nano with Multimodality will be able to interpret the world in a more human-like way, through text, visuals, sound, and spoken language.

Learn more about Gemini 1.0 Nano on Android.

Next generation of open models

Today, we're also updating Gemma, our family of open models, which are built on the same research and tech as the Gemini models.

We're launching Gemma 2, our next-gen open models for responsible AI innovation. Gemma 2 features a new architecture for superior performance and efficiency, and will come in new sizes.

The Gemma family is growing with PaliGemma, our first vision-language model inspired by PaLI-3. We've also upgraded our Responsible Generative AI Toolkit with LLM Comparator to assess model response quality.

For more details, head over to the Developer blog.

Progress developing universal AI agents

At Google DeepMind, our mission is to build AI responsibly to benefit humanity. We've always aimed to create universal AI agents that can assist in everyday life. That's why we're sharing our progress on the future of AI assistants with Project Astra (advanced seeing and talking responsive agent).

For an AI agent to be truly helpful, it needs to understand and react to the world like a human, taking in and remembering what it sees and hears to grasp context and act accordingly. It should also be proactive, teachable, and personal, allowing for natural, lag-free conversations.

While we've made great strides in processing multimodal information, achieving conversational response times is a tough engineering challenge. Over the years, we've been refining how our models perceive, reason, and converse to make interactions feel more natural.

Building on Gemini, we've developed prototype agents that process information faster by continuously encoding video frames, merging video and speech inputs into a timeline of events, and caching this data for quick recall.

By using our top-tier speech models, we've also improved how these agents sound, giving them a broader range of intonations. They can better understand the context they're in and respond swiftly in conversation.

With this technology, it's easy to imagine a future where everyone has an expert AI assistant at their side, accessible through a phone or glasses. Some of these capabilities will be coming to Google products like the Gemini app and web experience later this year.

Continued exploration

We've come a long way with our Gemini family of models, and we're committed to pushing the boundaries even further. Through relentless innovation, we're exploring new frontiers while unlocking exciting new use cases for Gemini.

To learn more about Gemini and its capabilities, check out our resources.

Get more stories from Google in your inbox.Get more stories from Google in your inbox.

Get more stories from Google in your inbox.Get more stories from Google in your inbox.

SubscribeDone. Just one step more.

Check your inbox to confirm your subscription.

You are already subscribed to our newsletter.

You can also subscribe with adifferent email address.

Anthropic Resolves Legal Case Over AI-Generated Book Piracy

Anthropic has reached a resolution in a significant copyright dispute with US authors, agreeing to a proposed class action settlement that avoids a potentially costly trial. The agreement, filed in court documents this Tuesday, stems from allegations

Anthropic Resolves Legal Case Over AI-Generated Book Piracy

Anthropic has reached a resolution in a significant copyright dispute with US authors, agreeing to a proposed class action settlement that avoids a potentially costly trial. The agreement, filed in court documents this Tuesday, stems from allegations

Figma Releases AI-Powered App Builder Tool to All Users

Figma Make, the innovative prompt-to-app development platform unveiled earlier this year, has officially exited beta and rolled out to all users. This groundbreaking tool joins the ranks of AI-powered coding assistants like Google's Gemini Code Assis

Figma Releases AI-Powered App Builder Tool to All Users

Figma Make, the innovative prompt-to-app development platform unveiled earlier this year, has officially exited beta and rolled out to all users. This groundbreaking tool joins the ranks of AI-powered coding assistants like Google's Gemini Code Assis

R1: Chinese Tech Giant Unveils Optimus-Rivaling Humanoid Robot

Ant Group Unveils First Humanoid Robot Prototype

The payments giant's robotics division has debuted its R1 humanoid at major tech events, showcasing automated cooking demonstrations and signaling ambitions beyond simple manufacturing applications.

P

R1: Chinese Tech Giant Unveils Optimus-Rivaling Humanoid Robot

Ant Group Unveils First Humanoid Robot Prototype

The payments giant's robotics division has debuted its R1 humanoid at major tech events, showcasing automated cooking demonstrations and signaling ambitions beyond simple manufacturing applications.

P

April 18, 2025 at 5:37:58 PM EDT

April 18, 2025 at 5:37:58 PM EDT

ジェミニの新しいモデルが100万トークンのコンテキストを持つとは信じられない!🤯 まるで何でも扱える超賢いAIを持っているようです。AIエージェントもゲームチェンジャーです。次に何を出すのか楽しみです!🚀

0

0

April 15, 2025 at 8:37:56 PM EDT

April 15, 2025 at 8:37:56 PM EDT

젬니니의 새로운 모델 정말 멋지네요! 100만 토큰의 컨텍스트 윈도우는 정말 놀랍습니다. 마치 모든 대화를 기억하는 똑똑한 친구가 있는 것 같아요! 조금 더 빨랐으면 좋겠지만, 뭐 다 가질 수는 없죠? 🤓

0

0

April 15, 2025 at 1:53:33 PM EDT

April 15, 2025 at 1:53:33 PM EDT

O novo modelo do Gemini é bem legal! A janela de contexto de 1 milhão de tokens é louca, é como ter um amigo superinteligente que lembra de tudo o que você já disse! Só queria que fosse um pouco mais rápido, mas, ei, não dá pra ter tudo, né? 🤓

0

0

April 14, 2025 at 9:25:31 PM EDT

April 14, 2025 at 9:25:31 PM EDT

El nuevo modelo Gemini es impresionante, especialmente la ventana de contexto larga. Es genial para desarrolladores, pero puede ser un poco abrumador para principiantes. Los agentes de IA son geniales, pero desearía que hubiera más documentación sobre cómo usarlos de manera efectiva.

0

0

April 14, 2025 at 3:20:08 PM EDT

April 14, 2025 at 3:20:08 PM EDT

O novo modelo do Gemini com um contexto de um milhão de tokens é loucura! 🤯 É como ter uma IA super inteligente que pode lidar com qualquer coisa. Os agentes de IA também são um divisor de águas. Mal posso esperar para ver o que eles vão lançar a seguir! 🚀

0

0

April 14, 2025 at 1:06:25 PM EDT

April 14, 2025 at 1:06:25 PM EDT

¡El nuevo modelo de Gemini es bastante genial! La ventana de contexto de 1 millón de tokens es una locura, es como tener un amigo súper inteligente que recuerda todo lo que has dicho alguna vez. Ojalá fuera un poco más rápido, pero bueno, no se puede tener todo, ¿verdad? 🤓

0

0