Debates over AI benchmarking have reached Pokémon

Even the beloved world of Pokémon isn't immune to the drama surrounding AI benchmarks. A recent viral post on X stirred up quite the buzz, claiming that Google's latest Gemini model had outpaced Anthropic's leading Claude model in the classic Pokémon video game trilogy. According to the post, Gemini had impressively made it to Lavender Town in a developer's Twitch stream, while Claude was lagging behind at Mount Moon as of late February.

Gemini is literally ahead of Claude atm in pokemon after reaching Lavender Town

119 live views only btw, incredibly underrated stream pic.twitter.com/8AvSovAI4x

— Jush (@Jush21e8) April 10, 2025

However, what this post conveniently left out was the fact that Gemini had a bit of an unfair advantage. Savvy users over on Reddit quickly pointed out that the developer behind the Gemini stream had crafted a custom minimap. This nifty tool aids the model in recognizing "tiles" in the game, such as cuttable trees, which significantly cuts down the time Gemini needs to spend analyzing screenshots before deciding on its next move.

Now, while Pokémon might not be the most serious AI benchmark out there, it does serve as a fun yet telling example of how different setups can skew the results of these tests. Take Anthropic's recent model, Anthropic 3.7 Sonnet, for instance. On the SWE-bench Verified benchmark, which is meant to test coding prowess, it scored 62.3% accuracy. But, with a "custom scaffold" that Anthropic whipped up, that score jumped to 70.3%.

And it doesn't stop there. Meta took one of its newer models, Llama 4 Maverick, and fine-tuned it specifically for the LM Arena benchmark. The vanilla version of the model didn't fare nearly as well on the same test.

Given that AI benchmarks, including our friendly Pokémon example, are already a bit hit-or-miss, these custom tweaks and non-standard approaches just make it even trickier to draw meaningful comparisons between models as they hit the market. It seems like comparing apples to apples might be getting harder by the day.

Related article

"Dot AI Companion App Announces Closure, Discontinues Personalized Service"

Dot, an AI companion application designed to function as a personal friend and confidant, will cease operations, according to a Friday announcement from its developers. New Computer, the startup behind Dot, stated on its website that the service will

"Dot AI Companion App Announces Closure, Discontinues Personalized Service"

Dot, an AI companion application designed to function as a personal friend and confidant, will cease operations, according to a Friday announcement from its developers. New Computer, the startup behind Dot, stated on its website that the service will

Anthropic Resolves Legal Case Over AI-Generated Book Piracy

Anthropic has reached a resolution in a significant copyright dispute with US authors, agreeing to a proposed class action settlement that avoids a potentially costly trial. The agreement, filed in court documents this Tuesday, stems from allegations

Anthropic Resolves Legal Case Over AI-Generated Book Piracy

Anthropic has reached a resolution in a significant copyright dispute with US authors, agreeing to a proposed class action settlement that avoids a potentially costly trial. The agreement, filed in court documents this Tuesday, stems from allegations

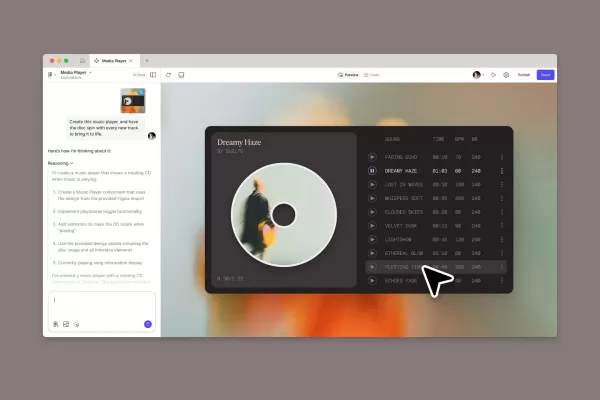

Figma Releases AI-Powered App Builder Tool to All Users

Figma Make, the innovative prompt-to-app development platform unveiled earlier this year, has officially exited beta and rolled out to all users. This groundbreaking tool joins the ranks of AI-powered coding assistants like Google's Gemini Code Assis

Comments (6)

0/200

Figma Releases AI-Powered App Builder Tool to All Users

Figma Make, the innovative prompt-to-app development platform unveiled earlier this year, has officially exited beta and rolled out to all users. This groundbreaking tool joins the ranks of AI-powered coding assistants like Google's Gemini Code Assis

Comments (6)

0/200

![DouglasMartínez]() DouglasMartínez

DouglasMartínez

August 6, 2025 at 1:01:00 PM EDT

August 6, 2025 at 1:01:00 PM EDT

Whoa, AI playing Pokémon? That's wild! I wonder if Gemini's got a secret Pikachu strategy or just brute-forced its way through. Gotta catch 'em all, I guess! ⚡️

0

0

![JasonKing]() JasonKing

JasonKing

May 5, 2025 at 7:38:52 AM EDT

May 5, 2025 at 7:38:52 AM EDT

Debates over AI benchmarking in Pokémon? That's wild! I never thought I'd see the day when AI models are compared using Pokémon games. It's fun but kinda confusing. Can someone explain how Gemini outpaced Claude? 🤯

0

0

![NicholasAdams]() NicholasAdams

NicholasAdams

May 4, 2025 at 7:11:33 PM EDT

May 4, 2025 at 7:11:33 PM EDT

ポケモンでAIのベンチマークを議論するなんて、信じられない!AIモデルがポケモンのゲームで比較される日が来るなんて思わなかった。面白いけど、ちょっと混乱する。ジェミニがクロードをどうやって追い越したのか、誰か説明してくれない?🤯

0

0

![WalterThomas]() WalterThomas

WalterThomas

May 4, 2025 at 11:05:10 AM EDT

May 4, 2025 at 11:05:10 AM EDT

पोकेमॉन में AI बेंचमार्किंग पर बहस? यह तो पागलपन है! मुझे कभी नहीं लगा था कि मैं AI मॉडल्स को पोकेमॉन गेम्स का उपयोग करके तुलना करते हुए देखूंगा। यह मजेदार है लेकिन थोड़ा भ्रमित करने वाला है। कोई बता सकता है कि जेमिनी ने क्लॉड को कैसे पछाड़ा? 🤯

0

0

![AlbertThomas]() AlbertThomas

AlbertThomas

May 4, 2025 at 2:38:28 AM EDT

May 4, 2025 at 2:38:28 AM EDT

포켓몬에서 AI 벤치마킹 논쟁이라니, 이건 정말 놀랍네요! AI 모델이 포켓몬 게임으로 비교될 날이 올 줄은 몰랐어요. 재미있지만 조금 헷갈려요. 제미니가 클로드를 어떻게 앞질렀는지 설명해줄 수 있는 분? 🤯

0

0

![CharlesRoberts]() CharlesRoberts

CharlesRoberts

May 3, 2025 at 3:01:44 PM EDT

May 3, 2025 at 3:01:44 PM EDT

Debates sobre benchmarking de IA em Pokémon? Isso é loucura! Nunca pensei que veria o dia em que modelos de IA seriam comparados usando jogos de Pokémon. É divertido, mas um pouco confuso. Alguém pode explicar como o Gemini superou o Claude? 🤯

0

0

Even the beloved world of Pokémon isn't immune to the drama surrounding AI benchmarks. A recent viral post on X stirred up quite the buzz, claiming that Google's latest Gemini model had outpaced Anthropic's leading Claude model in the classic Pokémon video game trilogy. According to the post, Gemini had impressively made it to Lavender Town in a developer's Twitch stream, while Claude was lagging behind at Mount Moon as of late February.

Gemini is literally ahead of Claude atm in pokemon after reaching Lavender Town

119 live views only btw, incredibly underrated stream pic.twitter.com/8AvSovAI4x

— Jush (@Jush21e8) April 10, 2025

However, what this post conveniently left out was the fact that Gemini had a bit of an unfair advantage. Savvy users over on Reddit quickly pointed out that the developer behind the Gemini stream had crafted a custom minimap. This nifty tool aids the model in recognizing "tiles" in the game, such as cuttable trees, which significantly cuts down the time Gemini needs to spend analyzing screenshots before deciding on its next move.

Now, while Pokémon might not be the most serious AI benchmark out there, it does serve as a fun yet telling example of how different setups can skew the results of these tests. Take Anthropic's recent model, Anthropic 3.7 Sonnet, for instance. On the SWE-bench Verified benchmark, which is meant to test coding prowess, it scored 62.3% accuracy. But, with a "custom scaffold" that Anthropic whipped up, that score jumped to 70.3%.

And it doesn't stop there. Meta took one of its newer models, Llama 4 Maverick, and fine-tuned it specifically for the LM Arena benchmark. The vanilla version of the model didn't fare nearly as well on the same test.

Given that AI benchmarks, including our friendly Pokémon example, are already a bit hit-or-miss, these custom tweaks and non-standard approaches just make it even trickier to draw meaningful comparisons between models as they hit the market. It seems like comparing apples to apples might be getting harder by the day.

Anthropic Resolves Legal Case Over AI-Generated Book Piracy

Anthropic has reached a resolution in a significant copyright dispute with US authors, agreeing to a proposed class action settlement that avoids a potentially costly trial. The agreement, filed in court documents this Tuesday, stems from allegations

Anthropic Resolves Legal Case Over AI-Generated Book Piracy

Anthropic has reached a resolution in a significant copyright dispute with US authors, agreeing to a proposed class action settlement that avoids a potentially costly trial. The agreement, filed in court documents this Tuesday, stems from allegations

Figma Releases AI-Powered App Builder Tool to All Users

Figma Make, the innovative prompt-to-app development platform unveiled earlier this year, has officially exited beta and rolled out to all users. This groundbreaking tool joins the ranks of AI-powered coding assistants like Google's Gemini Code Assis

Figma Releases AI-Powered App Builder Tool to All Users

Figma Make, the innovative prompt-to-app development platform unveiled earlier this year, has officially exited beta and rolled out to all users. This groundbreaking tool joins the ranks of AI-powered coding assistants like Google's Gemini Code Assis

August 6, 2025 at 1:01:00 PM EDT

August 6, 2025 at 1:01:00 PM EDT

Whoa, AI playing Pokémon? That's wild! I wonder if Gemini's got a secret Pikachu strategy or just brute-forced its way through. Gotta catch 'em all, I guess! ⚡️

0

0

May 5, 2025 at 7:38:52 AM EDT

May 5, 2025 at 7:38:52 AM EDT

Debates over AI benchmarking in Pokémon? That's wild! I never thought I'd see the day when AI models are compared using Pokémon games. It's fun but kinda confusing. Can someone explain how Gemini outpaced Claude? 🤯

0

0

May 4, 2025 at 7:11:33 PM EDT

May 4, 2025 at 7:11:33 PM EDT

ポケモンでAIのベンチマークを議論するなんて、信じられない!AIモデルがポケモンのゲームで比較される日が来るなんて思わなかった。面白いけど、ちょっと混乱する。ジェミニがクロードをどうやって追い越したのか、誰か説明してくれない?🤯

0

0

May 4, 2025 at 11:05:10 AM EDT

May 4, 2025 at 11:05:10 AM EDT

पोकेमॉन में AI बेंचमार्किंग पर बहस? यह तो पागलपन है! मुझे कभी नहीं लगा था कि मैं AI मॉडल्स को पोकेमॉन गेम्स का उपयोग करके तुलना करते हुए देखूंगा। यह मजेदार है लेकिन थोड़ा भ्रमित करने वाला है। कोई बता सकता है कि जेमिनी ने क्लॉड को कैसे पछाड़ा? 🤯

0

0

May 4, 2025 at 2:38:28 AM EDT

May 4, 2025 at 2:38:28 AM EDT

포켓몬에서 AI 벤치마킹 논쟁이라니, 이건 정말 놀랍네요! AI 모델이 포켓몬 게임으로 비교될 날이 올 줄은 몰랐어요. 재미있지만 조금 헷갈려요. 제미니가 클로드를 어떻게 앞질렀는지 설명해줄 수 있는 분? 🤯

0

0

May 3, 2025 at 3:01:44 PM EDT

May 3, 2025 at 3:01:44 PM EDT

Debates sobre benchmarking de IA em Pokémon? Isso é loucura! Nunca pensei que veria o dia em que modelos de IA seriam comparados usando jogos de Pokémon. É divertido, mas um pouco confuso. Alguém pode explicar como o Gemini superou o Claude? 🤯

0

0