2024 AI Responsibility Report Released

Two years back, we shared our vision for pushing AI forward to benefit society and spark innovation. Just a few weeks ago, we rolled out our 2024 update, showcasing the strides we've made. From cutting-edge models that boost creativity to AI-driven breakthroughs in biology, health research, and neuroscience, we're making waves.

But being bold with AI means being responsible right from the get-go. That's why our approach to AI has always been about understanding and addressing its wide-ranging impact on people. Back in 2018, we were among the first to lay out AI principles, and we've been putting out an annual transparency report since 2019. We're always tweaking our policies, practices, and frameworks to keep up with the times.

The 2024 Responsible AI Progress Report

Our sixth annual Responsible AI Progress Report dives into how we handle AI risks throughout the development process. It shows off the progress we've made over the past year in setting up governance structures for our AI product launches.

We're pouring more resources than ever into AI research and products that help people and society, as well as into AI safety and figuring out potential risks.

Last year's report spotlighted over 300 research papers from our teams on responsibility and safety, updates to our AI policies, and what we've learned from red teaming and evaluations against safety, privacy, and security benchmarks. We've also made headway in risk mitigation across different AI launches, with better safety tuning and filters, security and privacy controls, using provenance technology in our products, and pushing for broader AI literacy. Throughout 2024, we've also supported the wider AI community with funding, tools, and standards development, as detailed in the report.

An Update to Our Frontier Safety Framework

As AI keeps evolving, new capabilities can bring new risks. That's why we rolled out the first version of our Frontier Safety Framework last year—a set of protocols to help us stay on top of potential risks from powerful frontier AI models. We've been working with experts from industry, academia, and government to better understand these risks, how to test for them, and how to mitigate them.

We've also put the Framework into action in our Google DeepMind safety and governance processes for evaluating frontier models like Gemini 2.0. Today, we're releasing an updated Frontier Safety Framework, which includes:

- Recommendations for Heightened Security: pinpointing where we need to beef up efforts to prevent data leaks.

- Deployment Mitigations Procedure: focusing on stopping the misuse of critical system capabilities.

- Deceptive Alignment Risk: tackling the risk of an autonomous system intentionally undermining human control.

You can dive deeper into this on the Google DeepMind blog.

Updating AI Principles

Since we first published our AI Principles in 2018, AI tech has been moving at breakneck speed. Now, billions of people are using AI in their daily lives. It's become a go-to technology, a platform that countless organizations and individuals use to build apps. AI has gone from a niche lab topic to something as widespread as mobile phones and the internet, with tons of beneficial uses for society and people worldwide, backed by a thriving AI ecosystem of developers.

Having common baseline principles is key to this evolution. It's not just AI companies and academic institutions; we're seeing progress on AI principles globally. The G7, the International Organization for Standardization, and various democratic nations have all put out frameworks to guide the safe development and use of AI. More and more, organizations and governments are using these common standards to figure out how to build, regulate, and deploy this evolving tech—our Responsible AI Progress Report, for instance, now follows the United States' NIST Risk Management Framework. Our experience and research over the years, along with the threat intelligence, expertise, and best practices we've shared with other AI companies, have given us a deeper understanding of AI's potential and risks.

There's a global race for AI leadership in an increasingly complex geopolitical landscape. We believe democracies should lead the way in AI development, guided by core values like freedom, equality, and respect for human rights. And we think companies, governments, and organizations that share these values should team up to create AI that protects people, boosts global growth, and supports national security.

With that in mind, we're updating our AI Principles to focus on three core tenets:

- Bold Innovation: We're developing AI to help, empower, and inspire people in almost every field, drive economic progress, improve lives, enable scientific breakthroughs, and tackle humanity's biggest challenges.

- Responsible Development and Deployment: We get that AI, as an emerging transformative technology, brings new complexities and risks. That's why we're committed to pursuing AI responsibly throughout the development and deployment lifecycle—from design to testing to deployment to iteration—learning as AI advances and its uses evolve.

- Collaborative Progress, Together: We learn from others and build technology that empowers others to use AI positively.

You can check out our full AI Principles on AI.google.

Guided by our AI Principles, we'll keep focusing on AI research and applications that align with our mission, our scientific focus, and our areas of expertise, and stay consistent with widely accepted principles of international law and human rights—always evaluating specific work by carefully assessing whether the benefits substantially outweigh potential risks. We'll also consider whether our engagements require custom research and development or can rely on general-purpose, widely-available technology. These assessments are crucial as AI is increasingly being developed by numerous organizations and governments for uses in fields like healthcare, science, robotics, cybersecurity, transportation, national security, energy, climate, and more.

Of course, in addition to the Principles, we continue to have specific product policies and clear terms of use that include prohibitions like illegal use of our services.

The Opportunity Ahead

We know how fast the underlying technology—and the debate around AI's advancement, deployment, and uses—will keep evolving, and we'll keep adapting and refining our approach as we all learn over time.

As we see AGI, in particular, coming into sharper focus, the societal implications become incredibly profound. This isn't just about developing powerful AI; it's about building the most transformative technology in human history, using it to solve humanity's biggest challenges, and ensuring that the right safeguards and governance are in place, for the benefit of the world. We'll keep sharing our progress and findings about this journey, and expect to continue to evolve our thinking as we move closer to AGI.

As we move forward, we believe that the improvements we've made over the last year to our governance and other processes, our new Frontier Safety Framework, and our AI Principles position us well for the next phase of AI transformation. The opportunity of AI to assist and improve the lives of people around the world is what ultimately drives us in this work, and we'll keep pursuing our bold, responsible, and collaborative approach to AI.

Related article

"Dot AI Companion App Announces Closure, Discontinues Personalized Service"

Dot, an AI companion application designed to function as a personal friend and confidant, will cease operations, according to a Friday announcement from its developers. New Computer, the startup behind Dot, stated on its website that the service will

"Dot AI Companion App Announces Closure, Discontinues Personalized Service"

Dot, an AI companion application designed to function as a personal friend and confidant, will cease operations, according to a Friday announcement from its developers. New Computer, the startup behind Dot, stated on its website that the service will

Anthropic Resolves Legal Case Over AI-Generated Book Piracy

Anthropic has reached a resolution in a significant copyright dispute with US authors, agreeing to a proposed class action settlement that avoids a potentially costly trial. The agreement, filed in court documents this Tuesday, stems from allegations

Anthropic Resolves Legal Case Over AI-Generated Book Piracy

Anthropic has reached a resolution in a significant copyright dispute with US authors, agreeing to a proposed class action settlement that avoids a potentially costly trial. The agreement, filed in court documents this Tuesday, stems from allegations

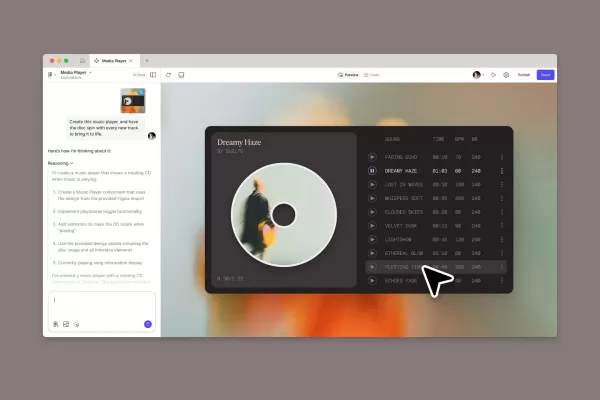

Figma Releases AI-Powered App Builder Tool to All Users

Figma Make, the innovative prompt-to-app development platform unveiled earlier this year, has officially exited beta and rolled out to all users. This groundbreaking tool joins the ranks of AI-powered coding assistants like Google's Gemini Code Assis

Comments (35)

0/200

Figma Releases AI-Powered App Builder Tool to All Users

Figma Make, the innovative prompt-to-app development platform unveiled earlier this year, has officially exited beta and rolled out to all users. This groundbreaking tool joins the ranks of AI-powered coding assistants like Google's Gemini Code Assis

Comments (35)

0/200

![ThomasRoberts]() ThomasRoberts

ThomasRoberts

September 1, 2025 at 6:30:32 PM EDT

September 1, 2025 at 6:30:32 PM EDT

這報告提到的AI在生物學突破蠻吸引人,但不知道這些技術何時能真正落地幫助病患...希望別像之前有些醫療AI那樣雷聲大雨點小 🧐

0

0

![LawrencePerez]() LawrencePerez

LawrencePerez

August 16, 2025 at 3:01:01 PM EDT

August 16, 2025 at 3:01:01 PM EDT

This report sounds like a big step forward! I'm amazed at how AI is transforming biology and health research. Can't wait to see what new doors this opens! 😎

0

0

![RoyMitchell]() RoyMitchell

RoyMitchell

August 7, 2025 at 9:00:59 AM EDT

August 7, 2025 at 9:00:59 AM EDT

Wow, the 2024 AI Responsibility Report sounds like a big leap! I'm amazed at how AI's pushing boundaries in health and creativity. But, like, are we sure it’s all safe? 🤔 Excited to see where this goes!

0

0

![JustinMitchell]() JustinMitchell

JustinMitchell

July 27, 2025 at 9:20:02 PM EDT

July 27, 2025 at 9:20:02 PM EDT

Wow, the 2024 AI Responsibility Report sounds like a big leap forward! I'm amazed at how AI is diving into biology and health research. Makes me wonder what crazy breakthroughs we'll see next. 🤯 Any chance we'll get AI doctors soon?

0

0

![ScottWalker]() ScottWalker

ScottWalker

July 27, 2025 at 9:19:05 PM EDT

July 27, 2025 at 9:19:05 PM EDT

This report sounds like a big step forward! I'm amazed at how AI is transforming biology and health research. Can't wait to see what new breakthroughs come next. 😎

0

0

![LawrenceJones]() LawrenceJones

LawrenceJones

April 18, 2025 at 7:05:22 PM EDT

April 18, 2025 at 7:05:22 PM EDT

¡El Informe de Responsabilidad de IA de 2024 es impresionante! Es increíble ver cómo la IA está empujando los límites en salud y biología. Pero, desearía que hubiera más sobre cómo están abordando los problemas éticos. Aún así, es un paso sólido hacia adelante. Sigan con el buen trabajo, y tal vez la próxima vez profundicen más en la ética? 🌟

0

0

Two years back, we shared our vision for pushing AI forward to benefit society and spark innovation. Just a few weeks ago, we rolled out our 2024 update, showcasing the strides we've made. From cutting-edge models that boost creativity to AI-driven breakthroughs in biology, health research, and neuroscience, we're making waves.

But being bold with AI means being responsible right from the get-go. That's why our approach to AI has always been about understanding and addressing its wide-ranging impact on people. Back in 2018, we were among the first to lay out AI principles, and we've been putting out an annual transparency report since 2019. We're always tweaking our policies, practices, and frameworks to keep up with the times.

The 2024 Responsible AI Progress Report

Our sixth annual Responsible AI Progress Report dives into how we handle AI risks throughout the development process. It shows off the progress we've made over the past year in setting up governance structures for our AI product launches.

We're pouring more resources than ever into AI research and products that help people and society, as well as into AI safety and figuring out potential risks.

Last year's report spotlighted over 300 research papers from our teams on responsibility and safety, updates to our AI policies, and what we've learned from red teaming and evaluations against safety, privacy, and security benchmarks. We've also made headway in risk mitigation across different AI launches, with better safety tuning and filters, security and privacy controls, using provenance technology in our products, and pushing for broader AI literacy. Throughout 2024, we've also supported the wider AI community with funding, tools, and standards development, as detailed in the report.

An Update to Our Frontier Safety Framework

As AI keeps evolving, new capabilities can bring new risks. That's why we rolled out the first version of our Frontier Safety Framework last year—a set of protocols to help us stay on top of potential risks from powerful frontier AI models. We've been working with experts from industry, academia, and government to better understand these risks, how to test for them, and how to mitigate them.

We've also put the Framework into action in our Google DeepMind safety and governance processes for evaluating frontier models like Gemini 2.0. Today, we're releasing an updated Frontier Safety Framework, which includes:

- Recommendations for Heightened Security: pinpointing where we need to beef up efforts to prevent data leaks.

- Deployment Mitigations Procedure: focusing on stopping the misuse of critical system capabilities.

- Deceptive Alignment Risk: tackling the risk of an autonomous system intentionally undermining human control.

You can dive deeper into this on the Google DeepMind blog.

Updating AI Principles

Since we first published our AI Principles in 2018, AI tech has been moving at breakneck speed. Now, billions of people are using AI in their daily lives. It's become a go-to technology, a platform that countless organizations and individuals use to build apps. AI has gone from a niche lab topic to something as widespread as mobile phones and the internet, with tons of beneficial uses for society and people worldwide, backed by a thriving AI ecosystem of developers.

Having common baseline principles is key to this evolution. It's not just AI companies and academic institutions; we're seeing progress on AI principles globally. The G7, the International Organization for Standardization, and various democratic nations have all put out frameworks to guide the safe development and use of AI. More and more, organizations and governments are using these common standards to figure out how to build, regulate, and deploy this evolving tech—our Responsible AI Progress Report, for instance, now follows the United States' NIST Risk Management Framework. Our experience and research over the years, along with the threat intelligence, expertise, and best practices we've shared with other AI companies, have given us a deeper understanding of AI's potential and risks.

There's a global race for AI leadership in an increasingly complex geopolitical landscape. We believe democracies should lead the way in AI development, guided by core values like freedom, equality, and respect for human rights. And we think companies, governments, and organizations that share these values should team up to create AI that protects people, boosts global growth, and supports national security.

With that in mind, we're updating our AI Principles to focus on three core tenets:

- Bold Innovation: We're developing AI to help, empower, and inspire people in almost every field, drive economic progress, improve lives, enable scientific breakthroughs, and tackle humanity's biggest challenges.

- Responsible Development and Deployment: We get that AI, as an emerging transformative technology, brings new complexities and risks. That's why we're committed to pursuing AI responsibly throughout the development and deployment lifecycle—from design to testing to deployment to iteration—learning as AI advances and its uses evolve.

- Collaborative Progress, Together: We learn from others and build technology that empowers others to use AI positively.

You can check out our full AI Principles on AI.google.

Guided by our AI Principles, we'll keep focusing on AI research and applications that align with our mission, our scientific focus, and our areas of expertise, and stay consistent with widely accepted principles of international law and human rights—always evaluating specific work by carefully assessing whether the benefits substantially outweigh potential risks. We'll also consider whether our engagements require custom research and development or can rely on general-purpose, widely-available technology. These assessments are crucial as AI is increasingly being developed by numerous organizations and governments for uses in fields like healthcare, science, robotics, cybersecurity, transportation, national security, energy, climate, and more.

Of course, in addition to the Principles, we continue to have specific product policies and clear terms of use that include prohibitions like illegal use of our services.

The Opportunity Ahead

We know how fast the underlying technology—and the debate around AI's advancement, deployment, and uses—will keep evolving, and we'll keep adapting and refining our approach as we all learn over time.

As we see AGI, in particular, coming into sharper focus, the societal implications become incredibly profound. This isn't just about developing powerful AI; it's about building the most transformative technology in human history, using it to solve humanity's biggest challenges, and ensuring that the right safeguards and governance are in place, for the benefit of the world. We'll keep sharing our progress and findings about this journey, and expect to continue to evolve our thinking as we move closer to AGI.

As we move forward, we believe that the improvements we've made over the last year to our governance and other processes, our new Frontier Safety Framework, and our AI Principles position us well for the next phase of AI transformation. The opportunity of AI to assist and improve the lives of people around the world is what ultimately drives us in this work, and we'll keep pursuing our bold, responsible, and collaborative approach to AI.

Anthropic Resolves Legal Case Over AI-Generated Book Piracy

Anthropic has reached a resolution in a significant copyright dispute with US authors, agreeing to a proposed class action settlement that avoids a potentially costly trial. The agreement, filed in court documents this Tuesday, stems from allegations

Anthropic Resolves Legal Case Over AI-Generated Book Piracy

Anthropic has reached a resolution in a significant copyright dispute with US authors, agreeing to a proposed class action settlement that avoids a potentially costly trial. The agreement, filed in court documents this Tuesday, stems from allegations

Figma Releases AI-Powered App Builder Tool to All Users

Figma Make, the innovative prompt-to-app development platform unveiled earlier this year, has officially exited beta and rolled out to all users. This groundbreaking tool joins the ranks of AI-powered coding assistants like Google's Gemini Code Assis

Figma Releases AI-Powered App Builder Tool to All Users

Figma Make, the innovative prompt-to-app development platform unveiled earlier this year, has officially exited beta and rolled out to all users. This groundbreaking tool joins the ranks of AI-powered coding assistants like Google's Gemini Code Assis

September 1, 2025 at 6:30:32 PM EDT

September 1, 2025 at 6:30:32 PM EDT

這報告提到的AI在生物學突破蠻吸引人,但不知道這些技術何時能真正落地幫助病患...希望別像之前有些醫療AI那樣雷聲大雨點小 🧐

0

0

August 16, 2025 at 3:01:01 PM EDT

August 16, 2025 at 3:01:01 PM EDT

This report sounds like a big step forward! I'm amazed at how AI is transforming biology and health research. Can't wait to see what new doors this opens! 😎

0

0

August 7, 2025 at 9:00:59 AM EDT

August 7, 2025 at 9:00:59 AM EDT

Wow, the 2024 AI Responsibility Report sounds like a big leap! I'm amazed at how AI's pushing boundaries in health and creativity. But, like, are we sure it’s all safe? 🤔 Excited to see where this goes!

0

0

July 27, 2025 at 9:20:02 PM EDT

July 27, 2025 at 9:20:02 PM EDT

Wow, the 2024 AI Responsibility Report sounds like a big leap forward! I'm amazed at how AI is diving into biology and health research. Makes me wonder what crazy breakthroughs we'll see next. 🤯 Any chance we'll get AI doctors soon?

0

0

July 27, 2025 at 9:19:05 PM EDT

July 27, 2025 at 9:19:05 PM EDT

This report sounds like a big step forward! I'm amazed at how AI is transforming biology and health research. Can't wait to see what new breakthroughs come next. 😎

0

0

April 18, 2025 at 7:05:22 PM EDT

April 18, 2025 at 7:05:22 PM EDT

¡El Informe de Responsabilidad de IA de 2024 es impresionante! Es increíble ver cómo la IA está empujando los límites en salud y biología. Pero, desearía que hubiera más sobre cómo están abordando los problemas éticos. Aún así, es un paso sólido hacia adelante. Sigan con el buen trabajo, y tal vez la próxima vez profundicen más en la ética? 🌟

0

0