Vellum

LLM App Development Platform

Vellum Product Information

Ever wondered how to make the most out of large language models (LLMs)? That's where Vellum comes into play. It's not just a platform; it's a game-changer for anyone diving into the world of LLM apps. With Vellum, you get a suite of tools designed to streamline your development process, from prompt engineering to semantic search, all the way to version control, testing, and monitoring. And the best part? It plays nice with all the major LLM providers out there.

Getting Started with Vellum

So, you're ready to harness the power of Vellum? Let me walk you through how to use it. Vellum isn't just about throwing a bunch of tools at you; it's about providing a seamless experience. Whether you're looking to build LLM-powered applications or integrate LLM features into your existing projects, Vellum has got your back. It's all about rapid experimentation, ensuring you can test, iterate, and refine your models with ease. Plus, with features like regression testing, version control, and comprehensive monitoring, you can keep your LLM changes in check as they go live. And don't forget, Vellum lets you bring your own proprietary data into the mix, making your LLM calls even more powerful. With a user-friendly interface, comparing and collaborating on prompts and models has never been easier.

Vellum's Core Features

Prompt Engineering

Crafting the perfect prompt is an art, and Vellum gives you the canvas to master it.

Semantic Search

Find what you're looking for with precision, thanks to Vellum's semantic search capabilities.

Version Control

Keep track of every change and iteration with Vellum's robust version control system.

Testing

Ensure your LLM app is up to snuff with thorough testing features.

Monitoring

Stay on top of your app's performance with real-time monitoring tools.

What Can You Do with Vellum?

Vellum isn't just a one-trick pony. Here are some of the cool things you can do with it:

- Workflow Automation: Streamline your processes with LLM-powered automation.

- Document Analysis: Dive deep into documents and extract valuable insights.

- Copilots: Build AI assistants that can help with coding, writing, or any task you throw at them.

- Fine-tuning: Refine your models to perfection with Vellum's fine-tuning capabilities.

- Q&A over Docs: Answer questions based on document content with ease.

- Intent Classification: Understand user intent better with advanced classification.

- Summarization: Sum up long texts quickly and efficiently.

- Vector Search: Perform powerful searches using vector embeddings.

- LLM Monitoring: Keep an eye on your LLM's performance at all times.

- Chatbots: Create engaging and helpful chatbots that users will love.

- Semantic Search: Go beyond keywords with semantic search capabilities.

- LLM Evaluation: Assess the effectiveness of your LLM models with Vellum's tools.

- Sentiment Analysis: Understand the emotions behind the text.

- Custom LLM Evaluation: Tailor your evaluation metrics to your specific needs.

- AI Chatbots: Build advanced chatbots powered by LLMs.

Frequently Asked Questions About Vellum

- What can Vellum help me build?

- Vellum can help you build a wide range of LLM-powered applications, from workflow automation to chatbots and beyond.

- What LLM providers are compatible with Vellum?

- Vellum is compatible with all major LLM providers, ensuring flexibility and choice.

- What are the core features of Vellum?

- The core features include prompt engineering, semantic search, version control, testing, and monitoring.

- Can I compare and collaborate on prompts and models using Vellum?

- Absolutely, Vellum's user-friendly UI makes it easy to compare and collaborate on prompts and models.

- Does Vellum support version control?

- Yes, Vellum provides robust version control to track changes and iterations.

- Can I use my own data as context in LLM calls?

- Yes, you can bring your proprietary data into the mix to enhance your LLM calls.

- Is Vellum provider agnostic?

- Yes, Vellum works seamlessly with all major LLM providers.

- Does Vellum offer a personalized demo?

- Yes, you can request a personalized demo to see Vellum in action.

- What do customers say about Vellum?

- Customers rave about Vellum's ease of use, comprehensive features, and its ability to streamline LLM app development.

Want to connect with the Vellum community? Join the conversation on the Vellum Discord. For more Discord messages, click here.

Need help or have questions? Reach out to Vellum's support team at [email protected]. For more contact options, visit the contact us page.

Vellum is brought to you by Vellum AI. Connect with them on LinkedIn to stay updated on the latest developments.

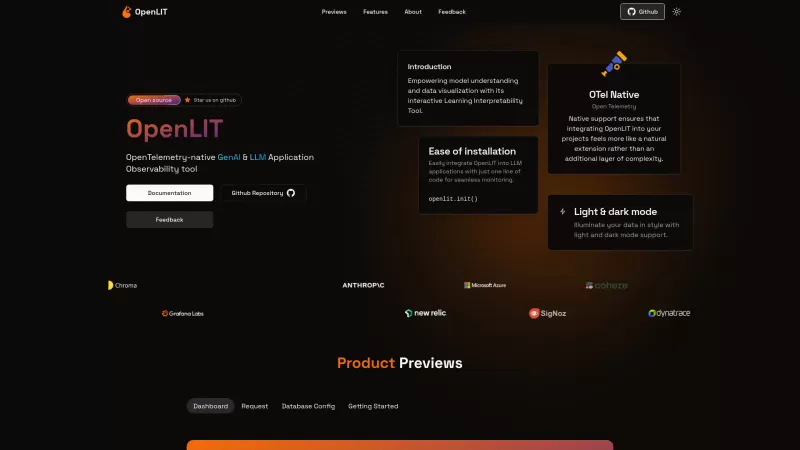

Vellum Screenshot

Vellum Reviews

Would you recommend Vellum? Post your comment