Mistral Unveils Advanced Code Embedding Model Outperforming OpenAI and Cohere in Real-World Retrieval Tasks

Mistral Enters the Embedding Arena with Codestral Embed

As enterprise retrieval augmented generation (RAG) continues to gain traction, the market is ripe for innovation in embedding models. Enter Mistral, the French AI company known for pushing boundaries in AI development. Recently, they unveiled Codestral Embed, their debut embedding model tailored specifically for code.

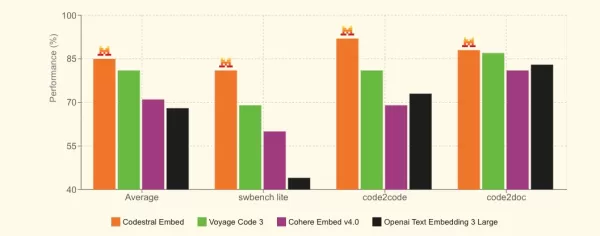

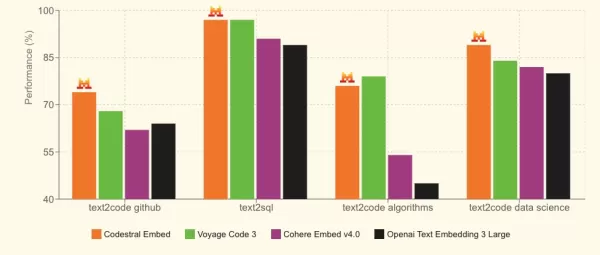

According to Mistral, Codestral Embed outshines existing models across benchmarks like SWE-Bench. The model shines brightest when it comes to retrieving real-world code data, delivering impressive performance in retrieval scenarios. Available to developers at $0.15 per million tokens, Codestral Embed offers an affordable yet powerful option for enhancing code-related applications.

In a recent announcement, Mistral proudly stated that Codestral Embed surpasses leading code embedders such as Voyage Code 3, Cohere Embed v4.0, and OpenAI’s Text Embedding 3 Large. This bold claim caught the attention of the tech community, sparking discussions on platforms like X (formerly Twitter).

Super excited to announce @MistralAI Codestral Embed, our first embedding model specialized for code.

It performs especially well for retrieval use cases on real-world code data. pic.twitter.com/ET321cRNli

— Sophia Yang, Ph.D. (@sophiamyang) May 28, 2025

Codestral Embed, part of Mistral’s Codestral family of coding models, generates embeddings that convert code and data into numerical representations, making it ideal for RAG. The model boasts flexibility in output dimensions and precisions, offering a balance between retrieval quality and storage costs. As Mistral notes, even Codestral Embed with a dimension of 256 and int8 precision outperforms competitors' models.

Benchmark Performance

Mistral put Codestral Embed through rigorous testing on benchmarks such as SWE-Bench and Text2Code from GitHub. In both cases, the model demonstrated superior performance compared to industry-leading embedding models.

Potential Use Cases

Mistral envisions Codestral Embed excelling in high-performance code retrieval and semantic understanding. The model caters to several key use cases:

- RAG: Facilitates faster information retrieval for tasks and agentic processes.

- Semantic Code Search: Developers can find code snippets using natural language queries, streamlining workflows on platforms like documentation systems and coding copilots.

- Similarity Search: Helps identify duplicated or similar code segments, aiding enterprises in enforcing reuse policies.

- Code Analytics: Supports semantic clustering by grouping code based on functionality or structure, enabling deeper insights into code architecture.

Market Dynamics and Competition

Mistral’s entry into the embedding space comes amid growing competition. The company has been actively expanding its offerings, launching Mistral Medium 3—a medium-sized version of its flagship large language model (LLM)—and introducing the Agents API for building task-oriented agents.

Industry watchers are taking notice. Some observers point out that Mistral’s timing aligns with heightened competition in the embedding sector. While Codestral Embed competes with closed-source models from giants like OpenAI and Cohere, it also faces stiff competition from open-source alternatives like Qodo-Embed-1-1.5 B.

VentureBeat reached out to Mistral for further details on Codestral Embed’s licensing options, highlighting the growing interest in this emerging technology.

A Promising Future

With its focus on code-specific optimization and competitive pricing, Codestral Embed positions itself as a strong contender in the embedding landscape. As developers continue to seek innovative solutions for code-related challenges, Mistral’s latest offering could carve out a niche that propels it forward in this rapidly evolving field.

Related article

Does Training Mitigate AI-Induced Cognitive Offloading Effects?

A recent investigative piece on Unite.ai titled 'ChatGPT Might Be Draining Your Brain: Cognitive Debt in the AI Era' shed light on concerning research from MIT. Journalist Alex McFarland detailed compelling evidence of how excessive AI dependency can

Does Training Mitigate AI-Induced Cognitive Offloading Effects?

A recent investigative piece on Unite.ai titled 'ChatGPT Might Be Draining Your Brain: Cognitive Debt in the AI Era' shed light on concerning research from MIT. Journalist Alex McFarland detailed compelling evidence of how excessive AI dependency can

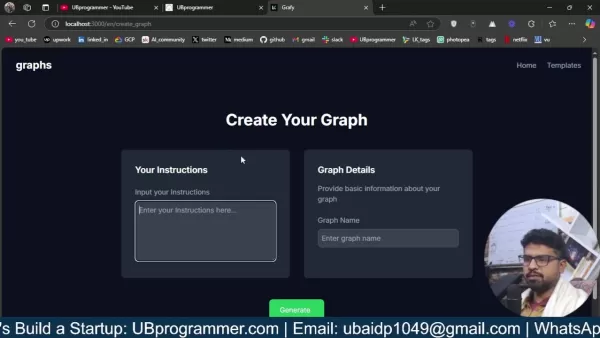

Easily Generate AI-Powered Graphs and Visualizations for Better Data Insights

Modern data analysis demands intuitive visualization of complex information. AI-powered graph generation solutions have emerged as indispensable assets, revolutionizing how professionals transform raw data into compelling visual stories. These intell

Easily Generate AI-Powered Graphs and Visualizations for Better Data Insights

Modern data analysis demands intuitive visualization of complex information. AI-powered graph generation solutions have emerged as indispensable assets, revolutionizing how professionals transform raw data into compelling visual stories. These intell

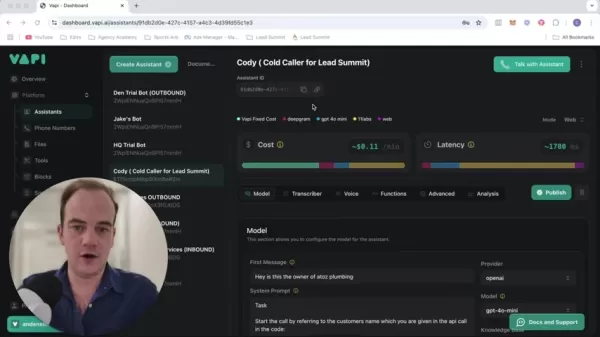

Transform Your Sales Strategy: AI Cold Calling Technology Powered by Vapi

Modern businesses operate at lightning speed, demanding innovative solutions to stay competitive. Picture revolutionizing your agency's outreach with an AI-powered cold calling system that simultaneously engages dozens of prospects - all running auto

Comments (3)

0/200

Transform Your Sales Strategy: AI Cold Calling Technology Powered by Vapi

Modern businesses operate at lightning speed, demanding innovative solutions to stay competitive. Picture revolutionizing your agency's outreach with an AI-powered cold calling system that simultaneously engages dozens of prospects - all running auto

Comments (3)

0/200

![BillyAdams]() BillyAdams

BillyAdams

August 12, 2025 at 2:01:01 AM EDT

August 12, 2025 at 2:01:01 AM EDT

Wow, Mistral’s Codestral Embed sounds like a game-changer! Outperforming OpenAI and Cohere in retrieval tasks is no small feat. I’m curious how this’ll shake up enterprise RAG—more efficient embeddings could mean faster, smarter AI apps. Anyone else excited to see where this goes? 🚀

0

0

![RogerLopez]() RogerLopez

RogerLopez

August 8, 2025 at 2:38:17 AM EDT

August 8, 2025 at 2:38:17 AM EDT

Wow, Mistral's Codestral Embed sounds like a game-changer! Beating OpenAI and Cohere in retrieval tasks is no small feat. I'm curious how this'll shake up enterprise RAG. Anyone tried it yet? 😎

0

0

![JoeWalker]() JoeWalker

JoeWalker

August 4, 2025 at 2:48:52 AM EDT

August 4, 2025 at 2:48:52 AM EDT

Mistral's new embedding model sounds like a game-changer! Beating OpenAI and Cohere in retrieval tasks is no small feat. Can't wait to see how it performs in real-world apps. 😎 Anyone tried it yet?

0

0

Mistral Enters the Embedding Arena with Codestral Embed

As enterprise retrieval augmented generation (RAG) continues to gain traction, the market is ripe for innovation in embedding models. Enter Mistral, the French AI company known for pushing boundaries in AI development. Recently, they unveiled Codestral Embed, their debut embedding model tailored specifically for code.

According to Mistral, Codestral Embed outshines existing models across benchmarks like SWE-Bench. The model shines brightest when it comes to retrieving real-world code data, delivering impressive performance in retrieval scenarios. Available to developers at $0.15 per million tokens, Codestral Embed offers an affordable yet powerful option for enhancing code-related applications.

In a recent announcement, Mistral proudly stated that Codestral Embed surpasses leading code embedders such as Voyage Code 3, Cohere Embed v4.0, and OpenAI’s Text Embedding 3 Large. This bold claim caught the attention of the tech community, sparking discussions on platforms like X (formerly Twitter).

Super excited to announce @MistralAI Codestral Embed, our first embedding model specialized for code.

It performs especially well for retrieval use cases on real-world code data. pic.twitter.com/ET321cRNli

— Sophia Yang, Ph.D. (@sophiamyang) May 28, 2025

Codestral Embed, part of Mistral’s Codestral family of coding models, generates embeddings that convert code and data into numerical representations, making it ideal for RAG. The model boasts flexibility in output dimensions and precisions, offering a balance between retrieval quality and storage costs. As Mistral notes, even Codestral Embed with a dimension of 256 and int8 precision outperforms competitors' models.

Benchmark Performance

Mistral put Codestral Embed through rigorous testing on benchmarks such as SWE-Bench and Text2Code from GitHub. In both cases, the model demonstrated superior performance compared to industry-leading embedding models.

Potential Use Cases

Mistral envisions Codestral Embed excelling in high-performance code retrieval and semantic understanding. The model caters to several key use cases:

- RAG: Facilitates faster information retrieval for tasks and agentic processes.

- Semantic Code Search: Developers can find code snippets using natural language queries, streamlining workflows on platforms like documentation systems and coding copilots.

- Similarity Search: Helps identify duplicated or similar code segments, aiding enterprises in enforcing reuse policies.

- Code Analytics: Supports semantic clustering by grouping code based on functionality or structure, enabling deeper insights into code architecture.

Market Dynamics and Competition

Mistral’s entry into the embedding space comes amid growing competition. The company has been actively expanding its offerings, launching Mistral Medium 3—a medium-sized version of its flagship large language model (LLM)—and introducing the Agents API for building task-oriented agents.

Industry watchers are taking notice. Some observers point out that Mistral’s timing aligns with heightened competition in the embedding sector. While Codestral Embed competes with closed-source models from giants like OpenAI and Cohere, it also faces stiff competition from open-source alternatives like Qodo-Embed-1-1.5 B.

VentureBeat reached out to Mistral for further details on Codestral Embed’s licensing options, highlighting the growing interest in this emerging technology.

A Promising Future

With its focus on code-specific optimization and competitive pricing, Codestral Embed positions itself as a strong contender in the embedding landscape. As developers continue to seek innovative solutions for code-related challenges, Mistral’s latest offering could carve out a niche that propels it forward in this rapidly evolving field.

Does Training Mitigate AI-Induced Cognitive Offloading Effects?

A recent investigative piece on Unite.ai titled 'ChatGPT Might Be Draining Your Brain: Cognitive Debt in the AI Era' shed light on concerning research from MIT. Journalist Alex McFarland detailed compelling evidence of how excessive AI dependency can

Does Training Mitigate AI-Induced Cognitive Offloading Effects?

A recent investigative piece on Unite.ai titled 'ChatGPT Might Be Draining Your Brain: Cognitive Debt in the AI Era' shed light on concerning research from MIT. Journalist Alex McFarland detailed compelling evidence of how excessive AI dependency can

Easily Generate AI-Powered Graphs and Visualizations for Better Data Insights

Modern data analysis demands intuitive visualization of complex information. AI-powered graph generation solutions have emerged as indispensable assets, revolutionizing how professionals transform raw data into compelling visual stories. These intell

Easily Generate AI-Powered Graphs and Visualizations for Better Data Insights

Modern data analysis demands intuitive visualization of complex information. AI-powered graph generation solutions have emerged as indispensable assets, revolutionizing how professionals transform raw data into compelling visual stories. These intell

Transform Your Sales Strategy: AI Cold Calling Technology Powered by Vapi

Modern businesses operate at lightning speed, demanding innovative solutions to stay competitive. Picture revolutionizing your agency's outreach with an AI-powered cold calling system that simultaneously engages dozens of prospects - all running auto

Transform Your Sales Strategy: AI Cold Calling Technology Powered by Vapi

Modern businesses operate at lightning speed, demanding innovative solutions to stay competitive. Picture revolutionizing your agency's outreach with an AI-powered cold calling system that simultaneously engages dozens of prospects - all running auto

August 12, 2025 at 2:01:01 AM EDT

August 12, 2025 at 2:01:01 AM EDT

Wow, Mistral’s Codestral Embed sounds like a game-changer! Outperforming OpenAI and Cohere in retrieval tasks is no small feat. I’m curious how this’ll shake up enterprise RAG—more efficient embeddings could mean faster, smarter AI apps. Anyone else excited to see where this goes? 🚀

0

0

August 8, 2025 at 2:38:17 AM EDT

August 8, 2025 at 2:38:17 AM EDT

Wow, Mistral's Codestral Embed sounds like a game-changer! Beating OpenAI and Cohere in retrieval tasks is no small feat. I'm curious how this'll shake up enterprise RAG. Anyone tried it yet? 😎

0

0

August 4, 2025 at 2:48:52 AM EDT

August 4, 2025 at 2:48:52 AM EDT

Mistral's new embedding model sounds like a game-changer! Beating OpenAI and Cohere in retrieval tasks is no small feat. Can't wait to see how it performs in real-world apps. 😎 Anyone tried it yet?

0

0