AI Agents Exploit Confidential Data: How Hackers Use Them and Protective Measures

Just like the rest of us, cybercriminals are turning to artificial intelligence to streamline their operations, making their attacks faster, easier, and more cunning. By integrating AI into their usual bag of tricks like automated bots, account takeovers, and social engineering, these savvy scammers are upping their game. A recent report from Gartner sheds light on how this trend is evolving and warns that it could intensify in the near future.

Gartner VP Analyst Jeremy D'Hoinne points out that weak authentication is the Achilles' heel fueling account takeovers. Cyber attackers exploit this vulnerability using a variety of methods to snag account passwords, from data breaches to social engineering.

The Role of AI in Account Takeovers

Once a password is compromised, AI takes the stage. Cybercriminals deploy automated AI bots to bombard multiple services with login attempts, aiming to discover if those credentials are recycled across platforms. The ultimate goal? To find a lucrative site where they can orchestrate a full account takeover. If the hacker isn't interested in pulling off the attack themselves, they can easily offload the stolen information on the dark web, where eager buyers await.

"Account takeover (ATO) remains a persistent attack vector because weak authentication credentials, such as passwords, are gathered by a variety of means including data breaches, phishing, social engineering, and malware," D'Hoinne explains in the Gartner report. "Attackers then leverage bots to automate a barrage of login attempts across a variety of services in the hope that the credentials have been reused on multiple platforms."

With AI now in their toolkit, attackers can automate the process of taking over accounts more efficiently. Gartner predicts that within the next two years, the time required for an account takeover will plummet by 50%.

AI and Deepfake Social Engineering

AI's impact goes beyond account takeovers; it's also revolutionizing deepfake campaigns. Cybercriminals are blending social engineering with deepfake audio and video to deceive their targets. Imagine getting a call from someone who sounds exactly like your boss or a trusted colleague, urging you to transfer funds or spill sensitive information. It's happening, and it's costing companies big time.

While only a handful of high-profile cases have been reported, the financial damage has been significant. Detecting deepfake voices during personal calls remains tricky. Gartner forecasts that by 2028, 40% of social engineering attacks will target both executives and the broader workforce.

"Organizations will have to stay abreast of the market and adapt procedures and workflows in an attempt to better resist attacks leveraging counterfeit reality techniques," advises Manuel Acosta, senior director analyst at Gartner. "Educating employees about the evolving threat landscape by using training specific to social engineering with deepfakes is a key step."

Thwarting AI-Powered Attacks

So, how can individuals and organizations fend off these AI-driven assaults?

"To combat emerging challenges from AI-driven attacks, organizations must leverage AI-powered tools that can provide granular real-time environment visibility and alerting to augment security teams," suggests Nicole Carignan, senior VP for security & AI strategy at Darktrace.

Carignan also recommends getting ahead of new threats by integrating machine-driven responses, either autonomously or with human oversight, to speed up security team reactions. "Through this approach, the adoption of AI technologies -- such as solutions with anomaly-based detection capabilities that can detect and respond to never-before-seen threats --- can be instrumental in keeping organizations secure."

Multi-factor authentication and biometric verification, like facial or fingerprint scans, are also crucial in safeguarding against account compromise. "Cybercriminals are not only relying on stolen credentials, but also on social manipulation, to breach identity protections," says James Scobey, chief information security officer at Keeper Security. "Deepfakes are a particular concern in this area, as AI models make these attack methods faster, cheaper, and more convincing. As attackers become more sophisticated, the need for stronger, more dynamic identity verification methods – such as multi-factor authentication (MFA) and biometrics – will be vital to defend against these progressively nuanced threats. MFA is essential for preventing account takeovers."

Gartner's Tips for Dealing with Social Engineering and Deepfakes

- Educate employees. Train them on social engineering and deepfakes, but don't rely solely on their ability to spot these threats.

- Set up other verification measures. For instance, verify any attempts to request confidential information over the phone on another platform.

- Use a call-back policy. Provide a designated number that employees can call to confirm sensitive or confidential requests.

- Go beyond a call-back policy. Ensure that a single phone call or request can't trigger a high-risk action without additional verification from high-level executives.

- Stay abreast of real-time deepfake detection. Keep up with emerging technologies that can detect deepfakes in audio and video calls, and supplement these with other identification methods like unique IDs.

Want more stories about AI? Sign up for Innovation, our weekly newsletter.

Related article

AI Reimagines Michael Jackson in the Metaverse with Stunning Digital Transformations

Artificial intelligence is fundamentally reshaping our understanding of creativity, entertainment, and cultural legacy. This exploration into AI-generated interpretations of Michael Jackson reveals how cutting-edge technology can breathe new life int

AI Reimagines Michael Jackson in the Metaverse with Stunning Digital Transformations

Artificial intelligence is fundamentally reshaping our understanding of creativity, entertainment, and cultural legacy. This exploration into AI-generated interpretations of Michael Jackson reveals how cutting-edge technology can breathe new life int

Does Training Mitigate AI-Induced Cognitive Offloading Effects?

A recent investigative piece on Unite.ai titled 'ChatGPT Might Be Draining Your Brain: Cognitive Debt in the AI Era' shed light on concerning research from MIT. Journalist Alex McFarland detailed compelling evidence of how excessive AI dependency can

Does Training Mitigate AI-Induced Cognitive Offloading Effects?

A recent investigative piece on Unite.ai titled 'ChatGPT Might Be Draining Your Brain: Cognitive Debt in the AI Era' shed light on concerning research from MIT. Journalist Alex McFarland detailed compelling evidence of how excessive AI dependency can

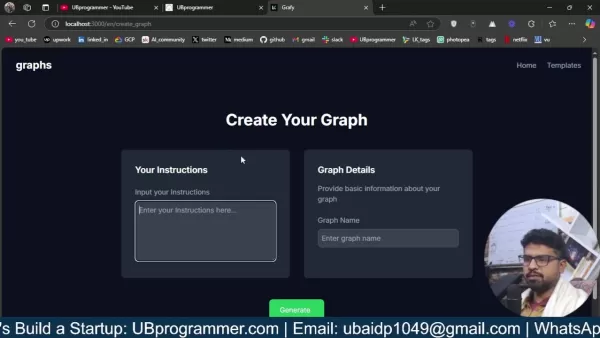

Easily Generate AI-Powered Graphs and Visualizations for Better Data Insights

Modern data analysis demands intuitive visualization of complex information. AI-powered graph generation solutions have emerged as indispensable assets, revolutionizing how professionals transform raw data into compelling visual stories. These intell

Comments (25)

0/200

Easily Generate AI-Powered Graphs and Visualizations for Better Data Insights

Modern data analysis demands intuitive visualization of complex information. AI-powered graph generation solutions have emerged as indispensable assets, revolutionizing how professionals transform raw data into compelling visual stories. These intell

Comments (25)

0/200

![JeffreyThomas]() JeffreyThomas

JeffreyThomas

April 23, 2025 at 5:58:22 AM EDT

April 23, 2025 at 5:58:22 AM EDT

¡Me sorprendió saber que los hackers están usando IA para robar datos! Al menos el app ofrece consejos para protegerse. Lectura esencial para cualquier persona en línea 😱🔒

0

0

![LarryHernández]() LarryHernández

LarryHernández

April 23, 2025 at 3:19:27 AM EDT

April 23, 2025 at 3:19:27 AM EDT

Diese App hat mir wirklich die Augen geöffnet, wie Hacker AI nutzen, um Daten zu stehlen! Es ist gruselig, aber auch super informativ. Der Abschnitt über Schutzmaßnahmen war etwas trocken, aber insgesamt ein Muss, wenn man sich für Cybersicherheit interessiert. Auf jeden Fall einen Blick wert! 😱🔒

0

0

![JamesMiller]() JamesMiller

JamesMiller

April 22, 2025 at 2:55:21 AM EDT

April 22, 2025 at 2:55:21 AM EDT

Este app realmente me mostrou como hackers usam IA para nos atrapalhar. É assustador, mas muito informativo. A parte das medidas protetivas é um pouco básica, no entanto. Poderia ser mais detalhada, mas vale a pena dar uma olhada! 👀

0

0

![GaryGonzalez]() GaryGonzalez

GaryGonzalez

April 21, 2025 at 10:49:10 PM EDT

April 21, 2025 at 10:49:10 PM EDT

AIエージェントがデータを悪用するなんて恐ろしいですね!保護対策について知るのは良いことですが、オンラインセキュリティについて不安になります。この情報は役立つけど、もう少し安心できる内容だといいなと思います。😅

0

0

![HarryLewis]() HarryLewis

HarryLewis

April 21, 2025 at 9:54:34 AM EDT

April 21, 2025 at 9:54:34 AM EDT

AI가 해커에게 악용된다는 사실이 정말 충격적이에요! 보호 방법도 알려주니 온라인에서 활동하는 사람들에게는 필수죠. 좀 더 일찍 알았더라면 좋겠어요 😓🔐

0

0

![MiaDavis]() MiaDavis

MiaDavis

April 21, 2025 at 9:17:39 AM EDT

April 21, 2025 at 9:17:39 AM EDT

이 앱은 해커들이 데이터를 훔치기 위해 AI를 어떻게 사용하는지에 대해 눈을 뜨게 해줬어요! 무섭지만 정말 유익해요. 보호 조치 부분은 조금 지루했지만, 전체적으로 사이버 보안에 관심이 있다면 꼭 읽어야 해요. 꼭 확인해보세요! 😱🔒

0

0

Just like the rest of us, cybercriminals are turning to artificial intelligence to streamline their operations, making their attacks faster, easier, and more cunning. By integrating AI into their usual bag of tricks like automated bots, account takeovers, and social engineering, these savvy scammers are upping their game. A recent report from Gartner sheds light on how this trend is evolving and warns that it could intensify in the near future.

Gartner VP Analyst Jeremy D'Hoinne points out that weak authentication is the Achilles' heel fueling account takeovers. Cyber attackers exploit this vulnerability using a variety of methods to snag account passwords, from data breaches to social engineering.

The Role of AI in Account Takeovers

Once a password is compromised, AI takes the stage. Cybercriminals deploy automated AI bots to bombard multiple services with login attempts, aiming to discover if those credentials are recycled across platforms. The ultimate goal? To find a lucrative site where they can orchestrate a full account takeover. If the hacker isn't interested in pulling off the attack themselves, they can easily offload the stolen information on the dark web, where eager buyers await.

"Account takeover (ATO) remains a persistent attack vector because weak authentication credentials, such as passwords, are gathered by a variety of means including data breaches, phishing, social engineering, and malware," D'Hoinne explains in the Gartner report. "Attackers then leverage bots to automate a barrage of login attempts across a variety of services in the hope that the credentials have been reused on multiple platforms."

With AI now in their toolkit, attackers can automate the process of taking over accounts more efficiently. Gartner predicts that within the next two years, the time required for an account takeover will plummet by 50%.

AI and Deepfake Social Engineering

AI's impact goes beyond account takeovers; it's also revolutionizing deepfake campaigns. Cybercriminals are blending social engineering with deepfake audio and video to deceive their targets. Imagine getting a call from someone who sounds exactly like your boss or a trusted colleague, urging you to transfer funds or spill sensitive information. It's happening, and it's costing companies big time.

While only a handful of high-profile cases have been reported, the financial damage has been significant. Detecting deepfake voices during personal calls remains tricky. Gartner forecasts that by 2028, 40% of social engineering attacks will target both executives and the broader workforce.

"Organizations will have to stay abreast of the market and adapt procedures and workflows in an attempt to better resist attacks leveraging counterfeit reality techniques," advises Manuel Acosta, senior director analyst at Gartner. "Educating employees about the evolving threat landscape by using training specific to social engineering with deepfakes is a key step."

Thwarting AI-Powered Attacks

So, how can individuals and organizations fend off these AI-driven assaults?

"To combat emerging challenges from AI-driven attacks, organizations must leverage AI-powered tools that can provide granular real-time environment visibility and alerting to augment security teams," suggests Nicole Carignan, senior VP for security & AI strategy at Darktrace.

Carignan also recommends getting ahead of new threats by integrating machine-driven responses, either autonomously or with human oversight, to speed up security team reactions. "Through this approach, the adoption of AI technologies -- such as solutions with anomaly-based detection capabilities that can detect and respond to never-before-seen threats --- can be instrumental in keeping organizations secure."

Multi-factor authentication and biometric verification, like facial or fingerprint scans, are also crucial in safeguarding against account compromise. "Cybercriminals are not only relying on stolen credentials, but also on social manipulation, to breach identity protections," says James Scobey, chief information security officer at Keeper Security. "Deepfakes are a particular concern in this area, as AI models make these attack methods faster, cheaper, and more convincing. As attackers become more sophisticated, the need for stronger, more dynamic identity verification methods – such as multi-factor authentication (MFA) and biometrics – will be vital to defend against these progressively nuanced threats. MFA is essential for preventing account takeovers."

Gartner's Tips for Dealing with Social Engineering and Deepfakes

- Educate employees. Train them on social engineering and deepfakes, but don't rely solely on their ability to spot these threats.

- Set up other verification measures. For instance, verify any attempts to request confidential information over the phone on another platform.

- Use a call-back policy. Provide a designated number that employees can call to confirm sensitive or confidential requests.

- Go beyond a call-back policy. Ensure that a single phone call or request can't trigger a high-risk action without additional verification from high-level executives.

- Stay abreast of real-time deepfake detection. Keep up with emerging technologies that can detect deepfakes in audio and video calls, and supplement these with other identification methods like unique IDs.

Want more stories about AI? Sign up for Innovation, our weekly newsletter.

AI Reimagines Michael Jackson in the Metaverse with Stunning Digital Transformations

Artificial intelligence is fundamentally reshaping our understanding of creativity, entertainment, and cultural legacy. This exploration into AI-generated interpretations of Michael Jackson reveals how cutting-edge technology can breathe new life int

AI Reimagines Michael Jackson in the Metaverse with Stunning Digital Transformations

Artificial intelligence is fundamentally reshaping our understanding of creativity, entertainment, and cultural legacy. This exploration into AI-generated interpretations of Michael Jackson reveals how cutting-edge technology can breathe new life int

Does Training Mitigate AI-Induced Cognitive Offloading Effects?

A recent investigative piece on Unite.ai titled 'ChatGPT Might Be Draining Your Brain: Cognitive Debt in the AI Era' shed light on concerning research from MIT. Journalist Alex McFarland detailed compelling evidence of how excessive AI dependency can

Does Training Mitigate AI-Induced Cognitive Offloading Effects?

A recent investigative piece on Unite.ai titled 'ChatGPT Might Be Draining Your Brain: Cognitive Debt in the AI Era' shed light on concerning research from MIT. Journalist Alex McFarland detailed compelling evidence of how excessive AI dependency can

Easily Generate AI-Powered Graphs and Visualizations for Better Data Insights

Modern data analysis demands intuitive visualization of complex information. AI-powered graph generation solutions have emerged as indispensable assets, revolutionizing how professionals transform raw data into compelling visual stories. These intell

Easily Generate AI-Powered Graphs and Visualizations for Better Data Insights

Modern data analysis demands intuitive visualization of complex information. AI-powered graph generation solutions have emerged as indispensable assets, revolutionizing how professionals transform raw data into compelling visual stories. These intell

April 23, 2025 at 5:58:22 AM EDT

April 23, 2025 at 5:58:22 AM EDT

¡Me sorprendió saber que los hackers están usando IA para robar datos! Al menos el app ofrece consejos para protegerse. Lectura esencial para cualquier persona en línea 😱🔒

0

0

April 23, 2025 at 3:19:27 AM EDT

April 23, 2025 at 3:19:27 AM EDT

Diese App hat mir wirklich die Augen geöffnet, wie Hacker AI nutzen, um Daten zu stehlen! Es ist gruselig, aber auch super informativ. Der Abschnitt über Schutzmaßnahmen war etwas trocken, aber insgesamt ein Muss, wenn man sich für Cybersicherheit interessiert. Auf jeden Fall einen Blick wert! 😱🔒

0

0

April 22, 2025 at 2:55:21 AM EDT

April 22, 2025 at 2:55:21 AM EDT

Este app realmente me mostrou como hackers usam IA para nos atrapalhar. É assustador, mas muito informativo. A parte das medidas protetivas é um pouco básica, no entanto. Poderia ser mais detalhada, mas vale a pena dar uma olhada! 👀

0

0

April 21, 2025 at 10:49:10 PM EDT

April 21, 2025 at 10:49:10 PM EDT

AIエージェントがデータを悪用するなんて恐ろしいですね!保護対策について知るのは良いことですが、オンラインセキュリティについて不安になります。この情報は役立つけど、もう少し安心できる内容だといいなと思います。😅

0

0

April 21, 2025 at 9:54:34 AM EDT

April 21, 2025 at 9:54:34 AM EDT

AI가 해커에게 악용된다는 사실이 정말 충격적이에요! 보호 방법도 알려주니 온라인에서 활동하는 사람들에게는 필수죠. 좀 더 일찍 알았더라면 좋겠어요 😓🔐

0

0

April 21, 2025 at 9:17:39 AM EDT

April 21, 2025 at 9:17:39 AM EDT

이 앱은 해커들이 데이터를 훔치기 위해 AI를 어떻게 사용하는지에 대해 눈을 뜨게 해줬어요! 무섭지만 정말 유익해요. 보호 조치 부분은 조금 지루했지만, 전체적으로 사이버 보안에 관심이 있다면 꼭 읽어야 해요. 꼭 확인해보세요! 😱🔒

0

0