2024: AI's Year of Remarkable Growth and Innovation

As we step into 2025, it's thrilling to reflect on the incredible strides we made in 2024. From launching the Gemini 2.0 models tailored for the agentic era to empowering creative expression, and from designing novel protein binders to advancing AI-enabled neuroscience and quantum computing, we've been pushing the boundaries of artificial intelligence responsibly and boldly. All of these efforts are aimed at harnessing AI for the greater good of humanity.

As we mentioned in our essay *Why we focus on AI* two years ago, our approach to AI development is rooted in our founding mission to organize the world’s information and make it universally accessible and useful. This mission drives our commitment to enhance the lives of as many people as possible, a goal that remains our north star.

In our 2024 Year-in-Review, we celebrate the remarkable achievements of the many talented teams at Google who have worked tirelessly to advance our mission. Their efforts have set the stage for even more exciting developments in the year ahead.

Relentless Innovation in Models, Products, and Technologies

2024 was all about experimentation, rapid deployment, and getting our latest technologies into the hands of developers. In December, we unveiled the first models of our Gemini 2.0 experimental series, designed specifically for the agentic era. We kicked things off with Gemini 2.0 Flash, our versatile workhorse, followed by cutting-edge prototypes from our agentic research. These include an updated Project Astra, exploring the potential of a universal AI assistant; Project Mariner, an early prototype capable of performing actions in Chrome as an experimental extension; and Jules, an AI-powered code agent. We're eager to integrate Gemini 2.0's capabilities into our flagship products, and we've already begun testing in AI Overviews within Search, used by over a billion people to explore new types of questions.

We also rolled out Deep Research, a new agentic feature in Gemini Advanced that saves hours of research by creating and executing multi-step plans for answering complex questions. Additionally, we introduced Gemini 2.0 Flash Thinking Experimental, a model that transparently displays its thought process.

Earlier in the year, we made significant strides by integrating Gemini's capabilities into more Google products and launching Gemini 1.5 Pro and Gemini 1.5 Flash. The latter, optimized for speed and efficiency, became our most popular model among developers thanks to its compact size and cost-effectiveness.

We also enhanced AI Studio, making it available as a progressive web app (PWA) installable on desktop, iOS, and Android, providing developers with a robust set of resources. The public's response to new features in NotebookLM, such as Audio Overviews, has been fantastic. These features can generate deep dive discussions from uploaded source material, making learning more engaging.

Speech input and output continue to be refined in products like Gemini Live, Project Astra, Journey Voices, and YouTube's auto dubbing, enhancing user interaction.

In line with our tradition of contributing to the open community, we released two new models from Gemma, our state-of-the-art open model, built on the same research and technology as Gemini. Gemma outperformed similarly sized models in areas like question answering, reasoning, and coding. We also released Gemma Scope, a tool to help researchers understand Gemma 2's inner workings.

We made strides in improving the factuality of our models and reducing hallucinations. In December, we published FACTS Grounding, a benchmark developed in collaboration with Google DeepMind, Google Research, and Kaggle, to evaluate how well large language models ground their responses in provided source material and avoid hallucinations.

The FACTS Grounding dataset, with 1,719 examples, is designed to test long-form responses grounded in context documents.

We tested leading LLMs using FACTS Grounding, and we're proud to report that Gemini 2.0 Flash Experimental, Gemini 1.5 Flash, and Gemini 1.5 Pro achieved the top three factuality scores, with Gemini-2.0-flash-exp scoring an impressive 83.6%.

We also improved ML efficiency through innovative techniques like blockwise parallel decoding, confidence-based deferral, and speculative decoding, which speed up the inference times of LLMs. These improvements benefit Google products and set industry standards.

In sports, we launched TacticAI, an AI system for football tactics that provides tactical insights, particularly on corner kicks.

Our commitment to research leadership remains strong. A 2010-2023 WIPO survey on Generative AI citations showed that Google, including Google Research and Google DeepMind, received more than double the citations of the second-most cited institution.

This WIPO graph, based on January 2024 data from The Lens, highlights Alphabet's significant contributions to generative AI research over the past decade.

Finally, we made progress with Project Starline, our "magic window" technology, partnering with HP to commercialize it, aiming to integrate it into video conferencing services like Google Meet and Zoom.

Empowering Creative Vision with Generative AI

We believe AI can unlock new realms of creativity, making creative expression more accessible and helping people realize their artistic visions. In 2024, we introduced a series of updates to our generative media tools, covering images, music, and video.

At the start of the year, we launched ImageFX and MusicFX, generative AI tools that create images and up-to-70-second audio clips from text prompts. At I/O, we previewed MusicFX DJ, designed to make live music creation more accessible. In October, we worked with Jacob Collier to simplify MusicFX DJ for new and aspiring musicians. We also updated our music AI toolkit, Music AI Sandbox, and evolved our Dream Track experiment, allowing U.S. creators to generate instrumental soundtracks across various genres using text-to-music models.

Later in the year, we released Veo 2 and Imagen 3, our latest image and video models. Imagen 3, our highest quality text-to-image model, generates images with superior detail, lighting, and fewer artifacts. Veo 2 demonstrated a better understanding of real-world physics and human movement, enhancing realism.

Veo 2 marks a significant advancement in high-quality video generation.

We continued to explore AI's potential in editing, using it to control attributes like transparency and roughness of objects.

These examples showcase AI's ability to edit material properties using synthetic data generation.

In audio generation, we improved video-to-audio (V2A) technology, generating dynamic soundscapes from text prompts based on on-screen action, which can be paired with AI-generated video from Veo.

Games offer a perfect playground for creative exploration and training embodied agents. In 2024, we introduced Genie 2, a foundation world model that generates diverse, playable 3D environments for training and evaluating embodied agents. This followed the launch of SIMA, which can follow natural-language instructions in various video game settings.

The Architecture of Intelligence: Advances in Robotics, Hardware, and Computing

As our multimodal models become more adept at understanding the world's physics, they're enabling exciting advances in robotics. We're getting closer to our goal of more capable and helpful robots.

With ALOHA Unleashed, our robot mastered tasks like tying shoelaces, hanging shirts, repairing other robots, inserting gears, and cleaning kitchens.

At the year's start, we introduced AutoRT, SARA-RT, and RT-Trajectory, extensions of our Robotics Transformers work to help robots better navigate their environments and make quicker decisions. We also released ALOHA Unleashed, teaching robots to coordinate two arms, and DemoStart, which uses reinforcement learning to improve real-world performance on a multi-fingered robotic hand using simulations.

Robotic Transformer 2 (RT-2) learns from both web and robotics data, enabling it to perform tasks like placing a strawberry in a bowl.

Beyond robotics, our AlphaChip reinforcement learning method is revolutionizing chip floorplanning for data centers and smartphones. We released a pre-trained checkpoint to facilitate external adoption of AlphaChip's open-source release. We also made Trillium, our sixth-generation TPU, available to Google Cloud customers, showcasing how AI can enhance chip design.

AlphaChip learns to optimize chip layouts, improving with each design it creates.

Our research also tackled error correction in quantum computers. In November, we launched AlphaQubit, an AI-based decoder that identifies quantum computing errors with high accuracy. This collaboration between Google DeepMind and Google Research accelerated progress toward reliable quantum computers. In tests, AlphaQubit reduced errors by 6% compared to tensor network methods and by 30% compared to correlated matching.

In December, the Google Quantum AI team unveiled Willow, our latest quantum chip. Willow can perform a benchmark computation in under five minutes that would take today's fastest supercomputers 10 septillion years. Using quantum error correction, Willow halved the error rate, achieving a milestone known as "below threshold" and earning the Physics Breakthrough of the Year award.

Willow showcases state-of-the-art performance in quantum computing.

Uncovering New Solutions: Progress in Science, Biology, and Mathematics

We continued to accelerate scientific progress with AI, releasing tools and papers that demonstrate AI's power in advancing science and mathematics. Here are some highlights:

In January, we introduced AlphaGeometry, an AI system for solving complex geometry problems. Our updated AlphaGeometry 2 and AlphaProof, a reinforcement-learning-based system for formal math reasoning, achieved silver medalist performance at the July 2024 International Mathematical Olympiad.

AlphaGeometry 2 solved Problem 4 of the July 2024 International Mathematical Olympiad in just 19 seconds, proving that ∠KIL + ∠XPY equals 180°.

In collaboration with Isomorphic Labs, we introduced AlphaFold 3, which predicts the structure and interactions of life's molecules, aiming to transform our understanding of biology and drug discovery.AlphaFold 3's advanced architecture and training cover all life's molecules, from proteins to DNA.

We also made significant strides in protein design with AlphaProteo, an AI system for creating high-strength protein binders, which could lead to new drugs and biosensors.AlphaProteo can design new protein binders for various target proteins.

In collaboration with Harvard's Lichtman Lab, we produced a nano-scale mapping of a piece of the human brain, a first of its kind, and made it available for researchers. This follows our decade-long effort in connectomics, now extending to human brain mapping.

This brain mapping project reveals mirror-image cell clusters in the deepest layer of the cortex.

In late November, we co-hosted the AI for Science Forum with the Royal Society, discussing key topics like protein structure prediction, human brain mapping, and using AI for forecasting and wildfire detection. We also hosted a Q&A with four Nobel Laureates at the forum, available on the Google DeepMind podcast.

2024 was also a landmark year as Demis Hassabis, John Jumper, and David Baker received the Nobel Prize in Chemistry for their work on AlphaFold 2, recognized for revolutionizing protein design. Geoffrey Hinton, along with John Hopfield, received the Nobel Prize in Physics for foundational work in machine learning with artificial neural networks.

Google also received additional accolades, including the NeurIPS 2024 Test of Time Paper Awards and the Beale—Orchard-Hays Prize for Primal-Dual Linear Programming (PDLP), now part of Google OR Tools, aiding in large-scale linear programming with real-world applications.

AI for the Benefit of Humanity

This year, we made significant product advances and published research demonstrating how AI can directly and immediately benefit people in areas like healthcare, disaster readiness, and education.

In healthcare, AI promises to democratize quality care, particularly in early detection of cardiovascular disease. Our research showed that a simple fingertip device, combined with basic metadata, can predict heart health risks. We also advanced AI-enabled diagnostics for tuberculosis, showing how AI can screen populations with high TB and HIV rates effectively.

Med-Gemini achieved a new state-of-the-art score on the MedQA benchmark, surpassing our previous best, Med-PaLM 2, by 4.6%.

Our Gemini model is a versatile tool for professionals, and we're developing fine-tuned models for specific domains. Med-Gemini, for instance, combines training on de-identified medical data with Gemini's capabilities, achieving 91.1% accuracy on the MedQA USMLE-style question benchmark.We're also exploring how machine learning can address shortages in imaging expertise in fields like radiology, dermatology, and pathology. We released Derm Foundation and Path Foundation for diagnostic tasks and biomarker discovery, collaborated with Stanford Medicine on the Skin Condition Image Network (SCIN) dataset, and unveiled CT Foundation for medical imaging research.

In education, we introduced LearnLM, a family of models fine-tuned for learning, enhancing experiences in Search, YouTube, and Gemini. LearnLM outperformed other leading AI models, and we made it available to developers in AI Studio. Our conversational learning companion, LearnAbout, and the audio discussion tool, Illuminate, further enrich learning experiences.

In disaster forecasting and preparedness, we introduced GenCast, improving weather and extreme event forecasting, and NeuralGCM, capable of simulating thousands of days of atmospheric conditions. GraphCast, which won the 2024 MacRobert Award, provides detailed weather predictions.

GraphCast's predictions over 10 days showcase specific humidity, surface temperature, and wind speed.

We improved our flood forecasting model to predict flooding seven days in advance, expanding coverage to 100 countries and 700 million people.

Our flood forecasting model now covers over 100 countries, with virtual gauges in 150 countries where physical gauges are unavailable.

AI also aids in wildfire detection and mitigation. Our Wildfire Boundary Maps are now available in 22 countries, and we created FireSat, a satellite constellation that can detect small wildfires within 20 minutes.We expanded Google Translate to include 110 new languages, helping break down barriers to information and opportunity for over 614 million speakers.

These new languages in Google Translate represent 8% of the world's population.

Helping Set the Standard in Responsible AI

We continued our industry-leading research in AI safety, developing new tools and techniques and integrating these into our latest models. We're committed to collaborating to address risks.

Our research into misuse found that deep fakes and jailbreaks are the most common issues. In May, we introduced The Frontier Safety Framework to identify emerging capabilities in our advanced AI models and launched our AI Responsibility Lifecycle framework. In October, we expanded our Responsible GenAI Toolkit to work with any LLM, helping developers build AI responsibly.

We released a paper on The Ethics of Advanced AI Assistants, examining the technical and moral landscape of AI assistants and the opportunities and risks they pose.

We expanded SynthID's capabilities to watermark AI-generated text in the Gemini app and web experience and video in Veo. To enhance online transparency, we joined the Coalition for Content Provenance and Authenticity (C2PA) and worked on a new, more secure version of the Content Credentials standard.

SynthID adjusts the probability scores of predicted tokens to ensure quality, accuracy, and creativity in AI-generated content.

Beyond LLMs, we shared our biosecurity approach for AlphaFold 3, worked with industry partners to launch the Coalition for Secure AI (CoSAI), and participated in the AI Seoul Summit to contribute to international AI governance.

As we develop new technologies like AI agents, we'll continue to explore safety, security, and privacy questions. Guided by our AI Principles, we're taking a deliberate, gradual approach, conducting extensive research, safety training, and risk assessments with trusted testers and external experts.

Looking Ahead to 2025

2024 was a year of incredible progress and excitement in AI. We're even more thrilled about what's coming in 2025.

As we continue to push the boundaries of AI research in products, science, health, and creativity, we must thoughtfully consider how and when to deploy these technologies. By prioritizing responsible AI practices and fostering collaboration, we'll continue to play a crucial role in building a future where AI benefits humanity.

Related article

"Dot AI Companion App Announces Closure, Discontinues Personalized Service"

Dot, an AI companion application designed to function as a personal friend and confidant, will cease operations, according to a Friday announcement from its developers. New Computer, the startup behind Dot, stated on its website that the service will

"Dot AI Companion App Announces Closure, Discontinues Personalized Service"

Dot, an AI companion application designed to function as a personal friend and confidant, will cease operations, according to a Friday announcement from its developers. New Computer, the startup behind Dot, stated on its website that the service will

Anthropic Resolves Legal Case Over AI-Generated Book Piracy

Anthropic has reached a resolution in a significant copyright dispute with US authors, agreeing to a proposed class action settlement that avoids a potentially costly trial. The agreement, filed in court documents this Tuesday, stems from allegations

Anthropic Resolves Legal Case Over AI-Generated Book Piracy

Anthropic has reached a resolution in a significant copyright dispute with US authors, agreeing to a proposed class action settlement that avoids a potentially costly trial. The agreement, filed in court documents this Tuesday, stems from allegations

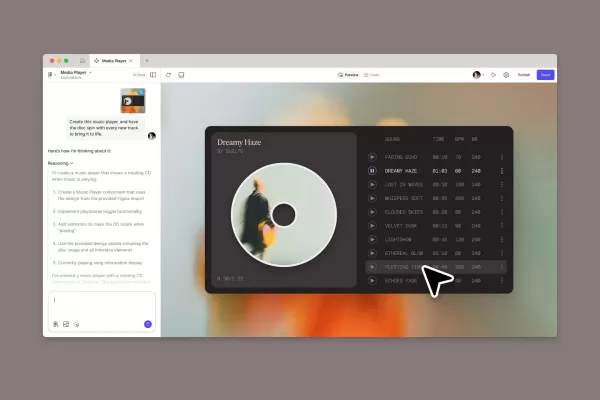

Figma Releases AI-Powered App Builder Tool to All Users

Figma Make, the innovative prompt-to-app development platform unveiled earlier this year, has officially exited beta and rolled out to all users. This groundbreaking tool joins the ranks of AI-powered coding assistants like Google's Gemini Code Assis

Comments (26)

0/200

Figma Releases AI-Powered App Builder Tool to All Users

Figma Make, the innovative prompt-to-app development platform unveiled earlier this year, has officially exited beta and rolled out to all users. This groundbreaking tool joins the ranks of AI-powered coding assistants like Google's Gemini Code Assis

Comments (26)

0/200

![AlbertSanchez]() AlbertSanchez

AlbertSanchez

August 23, 2025 at 1:01:15 AM EDT

August 23, 2025 at 1:01:15 AM EDT

Wow, 2024 was a wild ride for AI! Gemini 2.0 sounds like a game-changer for agents. Curious how it stacks up against other models—anybody tested it yet? 🧠

0

0

![FrankSmith]() FrankSmith

FrankSmith

April 22, 2025 at 9:42:56 PM EDT

April 22, 2025 at 9:42:56 PM EDT

¡2024 fue un año increíble para la IA! Desde Gemini 2.0 hasta la computación cuántica, es impresionante. Parece que estamos viviendo en una película de ciencia ficción. ¡No puedo esperar a ver qué nos trae 2025! 🚀

0

0

![RalphGarcia]() RalphGarcia

RalphGarcia

April 19, 2025 at 1:10:39 PM EDT

April 19, 2025 at 1:10:39 PM EDT

2024年のAIの進歩は本当に驚きでしたね!ジェミニ2.0から量子コンピューティングまで、まるでSF映画みたいです。2025年が楽しみです。🚀

0

0

![CarlLewis]() CarlLewis

CarlLewis

April 18, 2025 at 7:58:13 AM EDT

April 18, 2025 at 7:58:13 AM EDT

2024 was insane with AI! From Gemini 2.0 to quantum computing, it felt like living in a sci-fi movie. The creativity boost was real, but sometimes I felt overwhelmed by the rapid changes. Still, it's exciting to see where AI will take us next! 🚀

0

0

![RogerRoberts]() RogerRoberts

RogerRoberts

April 14, 2025 at 6:22:16 PM EDT

April 14, 2025 at 6:22:16 PM EDT

¡2024 fue una locura con la IA! Desde Gemini 2.0 hasta la computación cuántica, parecía estar viviendo en una película de ciencia ficción. El impulso de creatividad fue real, pero a veces me sentí abrumado por los cambios rápidos. Aún así, ¡es emocionante ver a dónde nos llevará la IA a continuación! 🚀

0

0

![RobertMartin]() RobertMartin

RobertMartin

April 13, 2025 at 6:55:17 AM EDT

April 13, 2025 at 6:55:17 AM EDT

2024年のAIの進歩は本当に驚きだった!ジェミニ2.0から量子コンピューティングまで、まるでSF映画にいるようだった。創造性の向上は確かにあったけど、急速な変化に圧倒されることもあった。それでも、AIが次にどこへ導いてくれるのか楽しみだよ!🚀

0

0

As we step into 2025, it's thrilling to reflect on the incredible strides we made in 2024. From launching the Gemini 2.0 models tailored for the agentic era to empowering creative expression, and from designing novel protein binders to advancing AI-enabled neuroscience and quantum computing, we've been pushing the boundaries of artificial intelligence responsibly and boldly. All of these efforts are aimed at harnessing AI for the greater good of humanity.

As we mentioned in our essay *Why we focus on AI* two years ago, our approach to AI development is rooted in our founding mission to organize the world’s information and make it universally accessible and useful. This mission drives our commitment to enhance the lives of as many people as possible, a goal that remains our north star.

In our 2024 Year-in-Review, we celebrate the remarkable achievements of the many talented teams at Google who have worked tirelessly to advance our mission. Their efforts have set the stage for even more exciting developments in the year ahead.

Relentless Innovation in Models, Products, and Technologies

2024 was all about experimentation, rapid deployment, and getting our latest technologies into the hands of developers. In December, we unveiled the first models of our Gemini 2.0 experimental series, designed specifically for the agentic era. We kicked things off with Gemini 2.0 Flash, our versatile workhorse, followed by cutting-edge prototypes from our agentic research. These include an updated Project Astra, exploring the potential of a universal AI assistant; Project Mariner, an early prototype capable of performing actions in Chrome as an experimental extension; and Jules, an AI-powered code agent. We're eager to integrate Gemini 2.0's capabilities into our flagship products, and we've already begun testing in AI Overviews within Search, used by over a billion people to explore new types of questions.

Earlier in the year, we made significant strides by integrating Gemini's capabilities into more Google products and launching Gemini 1.5 Pro and Gemini 1.5 Flash. The latter, optimized for speed and efficiency, became our most popular model among developers thanks to its compact size and cost-effectiveness.

We also enhanced AI Studio, making it available as a progressive web app (PWA) installable on desktop, iOS, and Android, providing developers with a robust set of resources. The public's response to new features in NotebookLM, such as Audio Overviews, has been fantastic. These features can generate deep dive discussions from uploaded source material, making learning more engaging.

Speech input and output continue to be refined in products like Gemini Live, Project Astra, Journey Voices, and YouTube's auto dubbing, enhancing user interaction.

In line with our tradition of contributing to the open community, we released two new models from Gemma, our state-of-the-art open model, built on the same research and technology as Gemini. Gemma outperformed similarly sized models in areas like question answering, reasoning, and coding. We also released Gemma Scope, a tool to help researchers understand Gemma 2's inner workings.

We made strides in improving the factuality of our models and reducing hallucinations. In December, we published FACTS Grounding, a benchmark developed in collaboration with Google DeepMind, Google Research, and Kaggle, to evaluate how well large language models ground their responses in provided source material and avoid hallucinations.

We also improved ML efficiency through innovative techniques like blockwise parallel decoding, confidence-based deferral, and speculative decoding, which speed up the inference times of LLMs. These improvements benefit Google products and set industry standards.

In sports, we launched TacticAI, an AI system for football tactics that provides tactical insights, particularly on corner kicks.

Our commitment to research leadership remains strong. A 2010-2023 WIPO survey on Generative AI citations showed that Google, including Google Research and Google DeepMind, received more than double the citations of the second-most cited institution.

Empowering Creative Vision with Generative AI

We believe AI can unlock new realms of creativity, making creative expression more accessible and helping people realize their artistic visions. In 2024, we introduced a series of updates to our generative media tools, covering images, music, and video.

At the start of the year, we launched ImageFX and MusicFX, generative AI tools that create images and up-to-70-second audio clips from text prompts. At I/O, we previewed MusicFX DJ, designed to make live music creation more accessible. In October, we worked with Jacob Collier to simplify MusicFX DJ for new and aspiring musicians. We also updated our music AI toolkit, Music AI Sandbox, and evolved our Dream Track experiment, allowing U.S. creators to generate instrumental soundtracks across various genres using text-to-music models.

We continued to explore AI's potential in editing, using it to control attributes like transparency and roughness of objects.

In audio generation, we improved video-to-audio (V2A) technology, generating dynamic soundscapes from text prompts based on on-screen action, which can be paired with AI-generated video from Veo.

Games offer a perfect playground for creative exploration and training embodied agents. In 2024, we introduced Genie 2, a foundation world model that generates diverse, playable 3D environments for training and evaluating embodied agents. This followed the launch of SIMA, which can follow natural-language instructions in various video game settings.

The Architecture of Intelligence: Advances in Robotics, Hardware, and Computing

As our multimodal models become more adept at understanding the world's physics, they're enabling exciting advances in robotics. We're getting closer to our goal of more capable and helpful robots.

At the year's start, we introduced AutoRT, SARA-RT, and RT-Trajectory, extensions of our Robotics Transformers work to help robots better navigate their environments and make quicker decisions. We also released ALOHA Unleashed, teaching robots to coordinate two arms, and DemoStart, which uses reinforcement learning to improve real-world performance on a multi-fingered robotic hand using simulations.

Our research also tackled error correction in quantum computers. In November, we launched AlphaQubit, an AI-based decoder that identifies quantum computing errors with high accuracy. This collaboration between Google DeepMind and Google Research accelerated progress toward reliable quantum computers. In tests, AlphaQubit reduced errors by 6% compared to tensor network methods and by 30% compared to correlated matching.

In December, the Google Quantum AI team unveiled Willow, our latest quantum chip. Willow can perform a benchmark computation in under five minutes that would take today's fastest supercomputers 10 septillion years. Using quantum error correction, Willow halved the error rate, achieving a milestone known as "below threshold" and earning the Physics Breakthrough of the Year award.

Uncovering New Solutions: Progress in Science, Biology, and Mathematics

We continued to accelerate scientific progress with AI, releasing tools and papers that demonstrate AI's power in advancing science and mathematics. Here are some highlights:

In January, we introduced AlphaGeometry, an AI system for solving complex geometry problems. Our updated AlphaGeometry 2 and AlphaProof, a reinforcement-learning-based system for formal math reasoning, achieved silver medalist performance at the July 2024 International Mathematical Olympiad.

In collaboration with Harvard's Lichtman Lab, we produced a nano-scale mapping of a piece of the human brain, a first of its kind, and made it available for researchers. This follows our decade-long effort in connectomics, now extending to human brain mapping.

In late November, we co-hosted the AI for Science Forum with the Royal Society, discussing key topics like protein structure prediction, human brain mapping, and using AI for forecasting and wildfire detection. We also hosted a Q&A with four Nobel Laureates at the forum, available on the Google DeepMind podcast.

2024 was also a landmark year as Demis Hassabis, John Jumper, and David Baker received the Nobel Prize in Chemistry for their work on AlphaFold 2, recognized for revolutionizing protein design. Geoffrey Hinton, along with John Hopfield, received the Nobel Prize in Physics for foundational work in machine learning with artificial neural networks.

Google also received additional accolades, including the NeurIPS 2024 Test of Time Paper Awards and the Beale—Orchard-Hays Prize for Primal-Dual Linear Programming (PDLP), now part of Google OR Tools, aiding in large-scale linear programming with real-world applications.

AI for the Benefit of Humanity

This year, we made significant product advances and published research demonstrating how AI can directly and immediately benefit people in areas like healthcare, disaster readiness, and education.

In healthcare, AI promises to democratize quality care, particularly in early detection of cardiovascular disease. Our research showed that a simple fingertip device, combined with basic metadata, can predict heart health risks. We also advanced AI-enabled diagnostics for tuberculosis, showing how AI can screen populations with high TB and HIV rates effectively.

We're also exploring how machine learning can address shortages in imaging expertise in fields like radiology, dermatology, and pathology. We released Derm Foundation and Path Foundation for diagnostic tasks and biomarker discovery, collaborated with Stanford Medicine on the Skin Condition Image Network (SCIN) dataset, and unveiled CT Foundation for medical imaging research.

In education, we introduced LearnLM, a family of models fine-tuned for learning, enhancing experiences in Search, YouTube, and Gemini. LearnLM outperformed other leading AI models, and we made it available to developers in AI Studio. Our conversational learning companion, LearnAbout, and the audio discussion tool, Illuminate, further enrich learning experiences.

In disaster forecasting and preparedness, we introduced GenCast, improving weather and extreme event forecasting, and NeuralGCM, capable of simulating thousands of days of atmospheric conditions. GraphCast, which won the 2024 MacRobert Award, provides detailed weather predictions.

We improved our flood forecasting model to predict flooding seven days in advance, expanding coverage to 100 countries and 700 million people.

We expanded Google Translate to include 110 new languages, helping break down barriers to information and opportunity for over 614 million speakers.

Helping Set the Standard in Responsible AI

We continued our industry-leading research in AI safety, developing new tools and techniques and integrating these into our latest models. We're committed to collaborating to address risks.

Our research into misuse found that deep fakes and jailbreaks are the most common issues. In May, we introduced The Frontier Safety Framework to identify emerging capabilities in our advanced AI models and launched our AI Responsibility Lifecycle framework. In October, we expanded our Responsible GenAI Toolkit to work with any LLM, helping developers build AI responsibly.

We released a paper on The Ethics of Advanced AI Assistants, examining the technical and moral landscape of AI assistants and the opportunities and risks they pose.

We expanded SynthID's capabilities to watermark AI-generated text in the Gemini app and web experience and video in Veo. To enhance online transparency, we joined the Coalition for Content Provenance and Authenticity (C2PA) and worked on a new, more secure version of the Content Credentials standard.

Beyond LLMs, we shared our biosecurity approach for AlphaFold 3, worked with industry partners to launch the Coalition for Secure AI (CoSAI), and participated in the AI Seoul Summit to contribute to international AI governance.

As we develop new technologies like AI agents, we'll continue to explore safety, security, and privacy questions. Guided by our AI Principles, we're taking a deliberate, gradual approach, conducting extensive research, safety training, and risk assessments with trusted testers and external experts.

Looking Ahead to 2025

2024 was a year of incredible progress and excitement in AI. We're even more thrilled about what's coming in 2025.

As we continue to push the boundaries of AI research in products, science, health, and creativity, we must thoughtfully consider how and when to deploy these technologies. By prioritizing responsible AI practices and fostering collaboration, we'll continue to play a crucial role in building a future where AI benefits humanity.

Anthropic Resolves Legal Case Over AI-Generated Book Piracy

Anthropic has reached a resolution in a significant copyright dispute with US authors, agreeing to a proposed class action settlement that avoids a potentially costly trial. The agreement, filed in court documents this Tuesday, stems from allegations

Anthropic Resolves Legal Case Over AI-Generated Book Piracy

Anthropic has reached a resolution in a significant copyright dispute with US authors, agreeing to a proposed class action settlement that avoids a potentially costly trial. The agreement, filed in court documents this Tuesday, stems from allegations

Figma Releases AI-Powered App Builder Tool to All Users

Figma Make, the innovative prompt-to-app development platform unveiled earlier this year, has officially exited beta and rolled out to all users. This groundbreaking tool joins the ranks of AI-powered coding assistants like Google's Gemini Code Assis

Figma Releases AI-Powered App Builder Tool to All Users

Figma Make, the innovative prompt-to-app development platform unveiled earlier this year, has officially exited beta and rolled out to all users. This groundbreaking tool joins the ranks of AI-powered coding assistants like Google's Gemini Code Assis

August 23, 2025 at 1:01:15 AM EDT

August 23, 2025 at 1:01:15 AM EDT

Wow, 2024 was a wild ride for AI! Gemini 2.0 sounds like a game-changer for agents. Curious how it stacks up against other models—anybody tested it yet? 🧠

0

0

April 22, 2025 at 9:42:56 PM EDT

April 22, 2025 at 9:42:56 PM EDT

¡2024 fue un año increíble para la IA! Desde Gemini 2.0 hasta la computación cuántica, es impresionante. Parece que estamos viviendo en una película de ciencia ficción. ¡No puedo esperar a ver qué nos trae 2025! 🚀

0

0

April 19, 2025 at 1:10:39 PM EDT

April 19, 2025 at 1:10:39 PM EDT

2024年のAIの進歩は本当に驚きでしたね!ジェミニ2.0から量子コンピューティングまで、まるでSF映画みたいです。2025年が楽しみです。🚀

0

0

April 18, 2025 at 7:58:13 AM EDT

April 18, 2025 at 7:58:13 AM EDT

2024 was insane with AI! From Gemini 2.0 to quantum computing, it felt like living in a sci-fi movie. The creativity boost was real, but sometimes I felt overwhelmed by the rapid changes. Still, it's exciting to see where AI will take us next! 🚀

0

0

April 14, 2025 at 6:22:16 PM EDT

April 14, 2025 at 6:22:16 PM EDT

¡2024 fue una locura con la IA! Desde Gemini 2.0 hasta la computación cuántica, parecía estar viviendo en una película de ciencia ficción. El impulso de creatividad fue real, pero a veces me sentí abrumado por los cambios rápidos. Aún así, ¡es emocionante ver a dónde nos llevará la IA a continuación! 🚀

0

0

April 13, 2025 at 6:55:17 AM EDT

April 13, 2025 at 6:55:17 AM EDT

2024年のAIの進歩は本当に驚きだった!ジェミニ2.0から量子コンピューティングまで、まるでSF映画にいるようだった。創造性の向上は確かにあったけど、急速な変化に圧倒されることもあった。それでも、AIが次にどこへ導いてくれるのか楽しみだよ!🚀

0

0