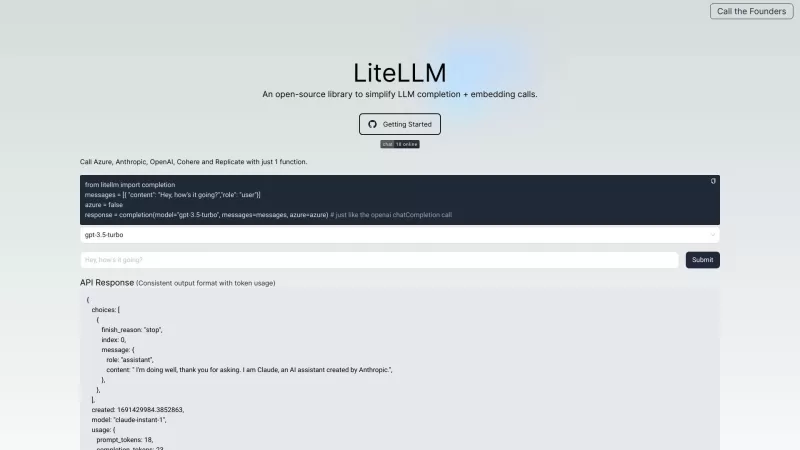

LiteLLM

LiteLLM Simplifies LLM Completion

LiteLLM Product Information

Ever wondered what LiteLLM is all about? Well, let me break it down for you. LiteLLM is this nifty open-source library that's all about making your life easier when you're dealing with LLM completion and embedding calls. It's like having a Swiss Army knife for your language model needs, providing a super user-friendly interface to tap into various LLM models. Whether you're a developer or just someone curious about AI, LiteLLM is your go-to tool for simplifying those complex LLM tasks.

How to Use LiteLLM?

So, you're ready to dive into LiteLLM? Here's how you can get started. First off, you'll need to import the 'litellm' library into your Python environment. Next, make sure you've got your LLM API keys sorted out—think OPENAI_API_KEY, COHERE_API_KEY, and the like. Set them as environment variables, and you're good to go. Once that's done, you can whip up a Python function and start making those LLM completion calls with LiteLLM. And here's the cool part: LiteLLM comes with a demo playground where you can play around with different LLM models, write some Python code, and see the results right away. It's like a sandbox for your AI experiments!

LiteLLM's Core Features

What makes LiteLLM stand out? For starters, it's all about simplifying those LLM completion and embedding calls. No more wrestling with complex APIs! LiteLLM supports a bunch of LLM models, from the popular GPT-3.5-turbo to Cohere's command-nightly. And don't forget about that demo playground I mentioned—it's a fantastic way to compare different LLM models side by side. Whether you're a seasoned pro or just starting out, LiteLLM's got you covered.

LiteLLM's Use Cases

Wondering where you can use LiteLLM? The possibilities are pretty much endless. From text generation to language understanding, and even building chatbots, LiteLLM is your ticket to exploring the world of natural language processing. It's perfect for both research projects and building applications that need that LLM magic. So, whether you're working on the next big AI breakthrough or just want to add some smarts to your app, LiteLLM is the tool for the job.

FAQ from LiteLLM

- What LLM models does LiteLLM support?

- LiteLLM supports a variety of models including GPT-3.5-turbo and Cohere's command-nightly, among others.

- Can LiteLLM be used for research purposes?

- Absolutely! LiteLLM is perfect for research, allowing you to experiment with different LLM models and see what works best for your project.

- Does LiteLLM have its own pricing?

- No, LiteLLM itself is free to use. However, you'll need to consider the pricing of the LLM APIs you're using, like OpenAI or Cohere.

- What is the demo playground in LiteLLM?

- The demo playground is a feature where you can write Python code to test and compare different LLM models in real-time. It's a great way to see how different models perform without leaving your development environment.

- LiteLLM Discord

Here is the LiteLLM Discord: https://discord.com/invite/wuPM9dRgDw. For more Discord messages, please click here.

- LiteLLM Github

LiteLLM Github Link: https://github.com/BerriAI/litellm

LiteLLM Screenshot

LiteLLM Reviews

Would you recommend LiteLLM? Post your comment